We’ve all been there: you ask Siri a question, and it responds with the ever-frustrating “Sorry I didn’t understand that”. It could be an accent or dialect problem, the fact that Siri isn’t trained on the vast volume of data that Google’s AI is trained on, or just that Apple absolutely dropped the ball on Siri. Apple launched the voice AI as an app almost 13 years ago, although Siri today still feels noticeably dumb and unhelpful even after more than a decade. Google’s voice AI seems to overwhelmingly be the most popular choice nowadays, although there’s a new kid on the block that’s absolutely eating Google’s lunch, at least in the search department.

Unveiled less than a year ago, ChatGPT from OpenAI took the world by storm for its incredible natural language processing capabilities, hitting a million users in just 5 days, and 100 million users in just two months (that’s faster than the growth seen by social media giants like Facebook, Google, and even Snapchat). ChatGPT’s intelligent and human-like responses make it the perfect AI chatbot, especially given that it really understands natural sentences much better than most other AI tools, and it’s most likely to respond with a helpful answer than an apology. Developer Mate Marschalko saw this as a brilliant opportunity to integrate ChatGPT’s intelligence with Siri, turning it into a much more helpful voice AI. With a little bit of hackery (which just took him about an hour), Marschalko combined Siri’s voice features with ChatGPT’s NLP intelligence using Apple’s Shortcuts feature. The result? A much better Voice AI that fetches better search results, offers more meaningful conversations, and even lets you control your smart home in a much more ‘human-friendly’ way… almost rivaling Tony Stark’s JARVIS in terms of usability. The best part? You can do it too!

Marschalko lists out his entire procedure in a Medium blog post that I definitely recommend checking out if you want to build your own ‘SiriGPT’ too, with an approach that required absolutely no coding experience. “I asked GPT-3 to pretend to be the smart brain of my house, carefully explained what it can access around the house and how to respond to my requests,” he said. “I explained all this in plain English with no programme code involved.”

The video above demonstrates exactly how Marschalko’s ‘SiriGPT’ works. His home is filled with dozens of lights, thermostats, underfloor heating, ventilation unit, cameras, and a lot more, making it the perfect testing ground for possibly every use case. Marschalko starts by splitting up his tasks into four distinct request types. The four request types are labeled Command, Query, Answer, and Clarify, and each request type has its own process that GPT-3 follows to determine what needs to be done.

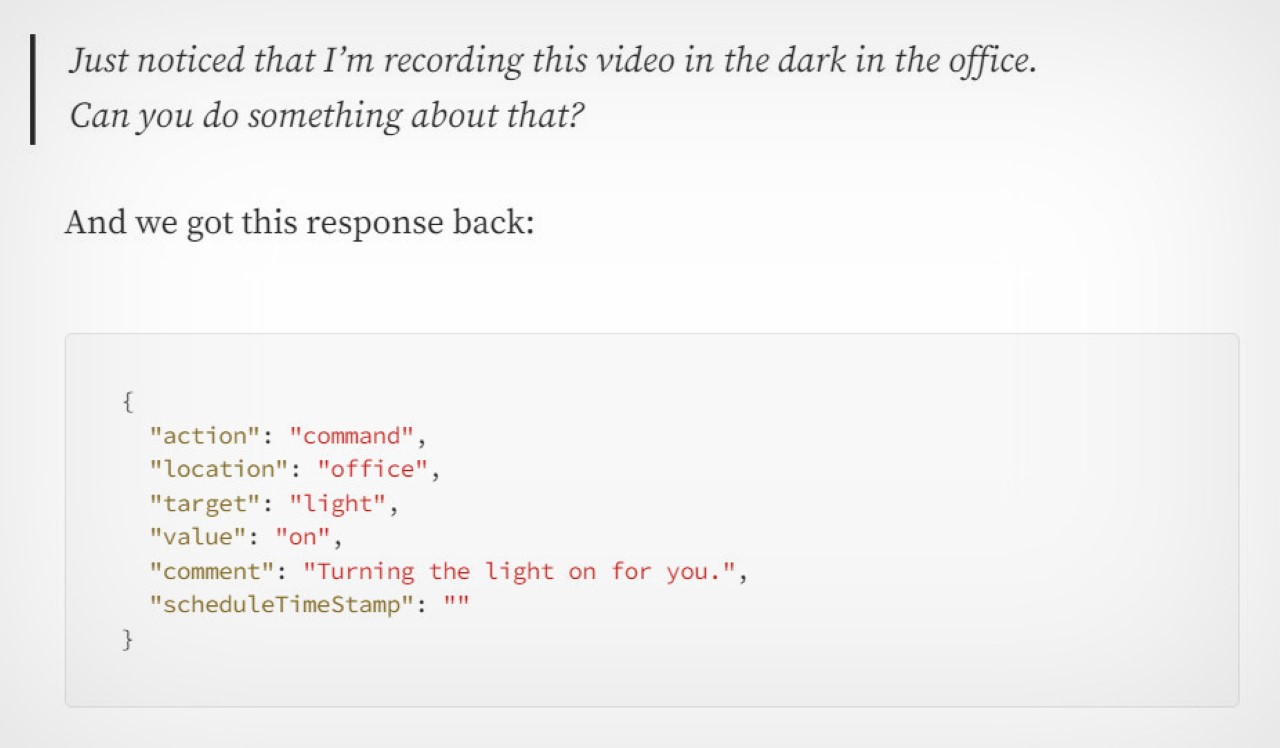

Marschalko’s AI is significantly better at processing indirectly worded commands.

Where the magic really unfolds is in how even indirect requests from Marschalko are understood and translated into meaningful actions by the assistant. While Siri and other AI assistants only respond to direct requests like “turn the light on”, or “open the garage door”, GPT3 allows for more nuanced conversations. In one example, Marschalko says “Notice that I’m recording this video in the dark, in the office. Can you do something about that,” and the assistant promptly turns on the light while responding with an AI-generated response instead of a template reply. In another example, he says “my wife is on the way driving home, and will be here in 15 minutes. Switch lights on for her outside just before she parks up”, to which the assistant responds with “The lights should be turned on by the time your guest arrives!”, demonstrating two powerful things… A. The ability to grasp concepts as complex as ‘wanting to switch a specific light on after a delay of a couple of minutes’, and B. Responding in a natural manner that conveys that they understood exactly what you wanted to be done.

Marschalko hooked all this into a shortcut called Okay Smart Home, and to power it, all he had to do was activate Siri and say the name of the shortcut (in this case “Okay Smart Home”) and then begin talking to his assistant. The four request types basically allowed Marschalko to cover all kinds of scenarios, from controlling smart home appliances with the Command request to asking the status of an appliance (like the temperature of a room or the oven) with the Query request. The Answer request covers more chat-centric queries like asking the AI for recommendations, suggestions, or general information from across the web, and the final Clarify request would allow the AI to ask you to repeat or rephrase your question if it was unable to detect any of the three previous request types.

Although this GPT-powered assistant absolutely runs circles around the visibly dumber Siri, it doesn’t come for free. You have to set up an OpenAI account and buy tokens to access its API. “Using the API will cost around $0.014 per request, so you could perform over 70 requests for $1,” Marschalko says. “Bear in mind that this is considered expensive because our request is very long, so with shorter ones you will pay proportionally less.”

The entire process is listed in this Medium blog post if you want to learn how to build out your own assistant with its distinct features. If you’ve got an OpenAI account and want to use the AI that Marschalko built in the video above, the Okay Smart Home shortcut is available to download and use with your own API keys.

The post Integrate ChatGPT into Siri to make your Apple voice assistant 100x smarter first appeared on Yanko Design.

Facebook might introduce its own voice assistant à la Siri and Alexa in the future. According to CNBC, the social network's augmented and virtual reality team led by Ira Snyder has been developing a voice AI since 2018. The team has even start...

Facebook might introduce its own voice assistant à la Siri and Alexa in the future. According to CNBC, the social network's augmented and virtual reality team led by Ira Snyder has been developing a voice AI since 2018. The team has even start...