Voice recognition usually applies to communication only in the most utilitarian sense, whether it's to translate on the spot or to keep those hands on the wheel while sending a text message. Samsung has just been granted a US patent that would convey how we're truly feeling through visuals instead of leaving it to interpretation of audio or text. An avatar could change its eyes, mouth and other facial traits to reflect the emotional state of a speaker depending on the pronunciation: sound exasperated or brimming with joy and the consonants or vowels could lead to a furrowed brow or a smile. The technique could be weighted against direct lip syncing to keep the facial cues active in mid-speech. While the patent won't be quite as expressive as direct facial mapping if Samsung puts it to use, it could be a boon for more realistic facial behavior in video games and computer-animated movies, as well as signal whether there was any emotional subtext in that speech-to-text conversion -- try not to give away any sarcasm.

Filed under: Misc, Gaming, Software, Samsung

Samsung patent ties emotional states to virtual faces through voice, shows when we're cracking up originally appeared on Engadget on Tue, 06 Nov 2012 11:41:00 EDT. Please see our terms for use of feeds.

Permalink |

USPTO

USPTO |

Email this |

Comments

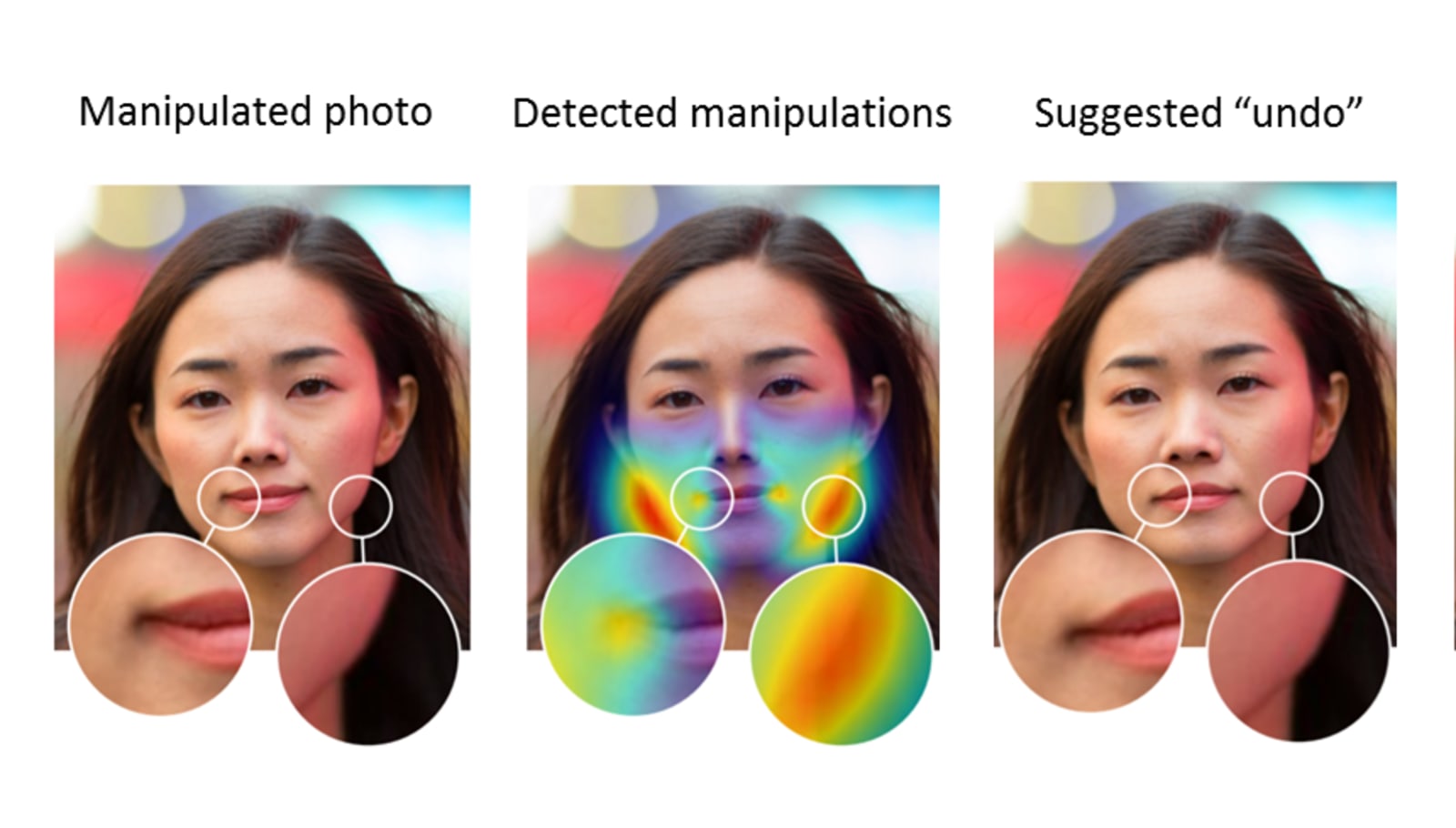

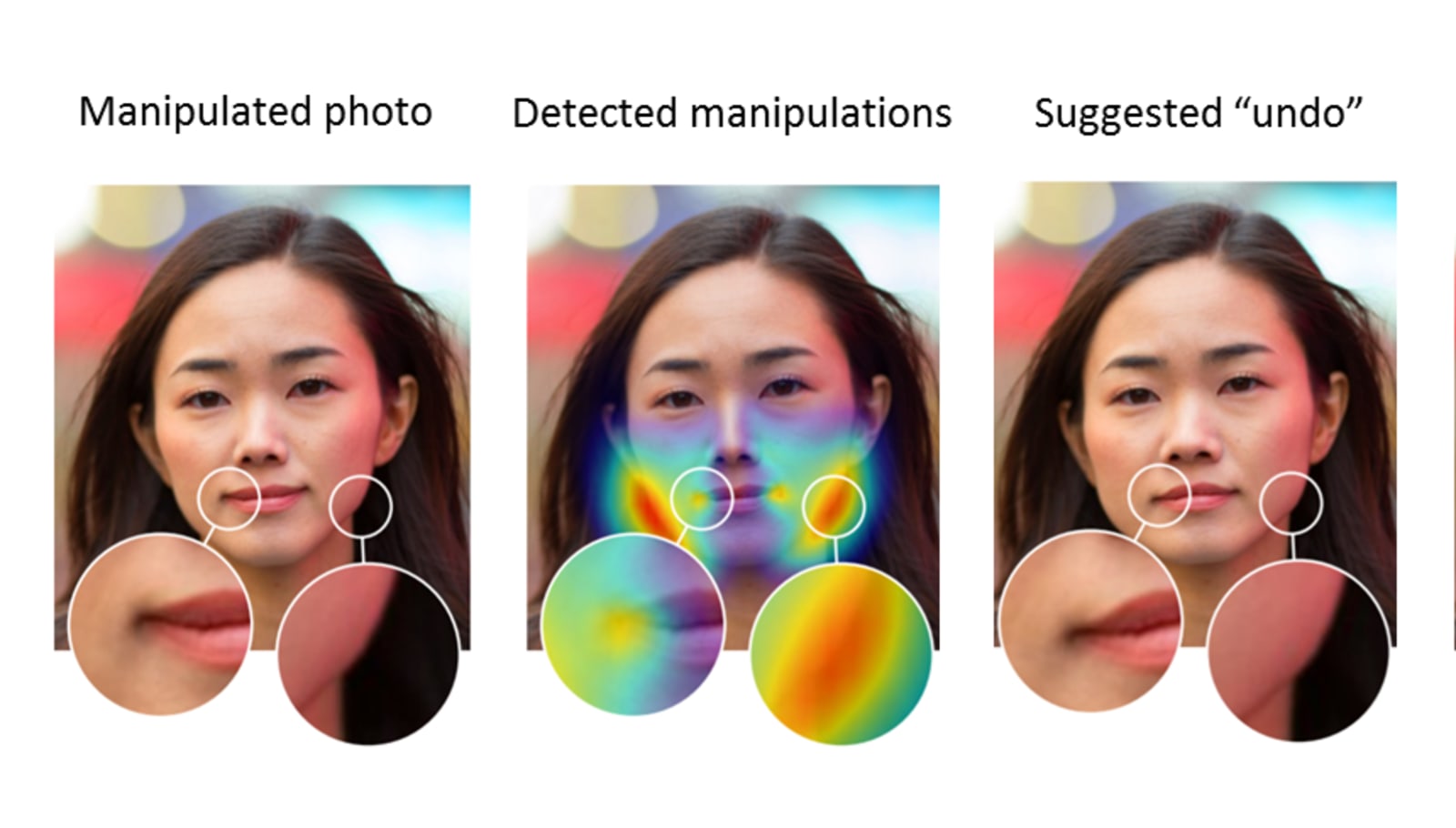

A team of Adobe and UC Berkeley researchers trained AI to detect facial manipulation in images edited with Adobe Photoshop. The researchers hope the tool will help restore trust in digital media at a time when deepfakes and fake faces are more common...

A team of Adobe and UC Berkeley researchers trained AI to detect facial manipulation in images edited with Adobe Photoshop. The researchers hope the tool will help restore trust in digital media at a time when deepfakes and fake faces are more common...

A team of Adobe and UC Berkeley researchers trained AI to detect facial manipulation in images edited with Adobe Photoshop. The researchers hope the tool will help restore trust in digital media at a time when deepfakes and fake faces are more common...

A team of Adobe and UC Berkeley researchers trained AI to detect facial manipulation in images edited with Adobe Photoshop. The researchers hope the tool will help restore trust in digital media at a time when deepfakes and fake faces are more common...