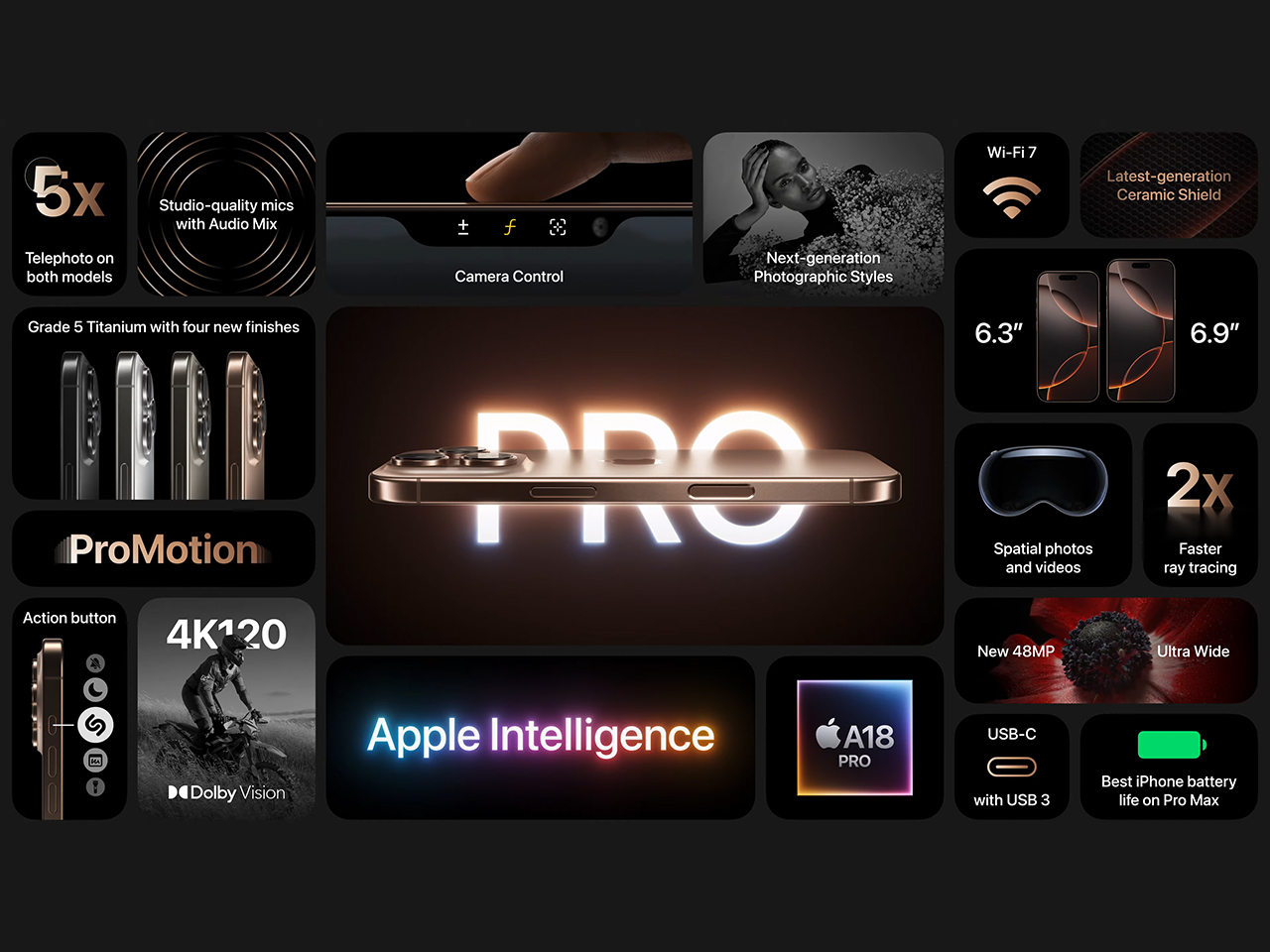

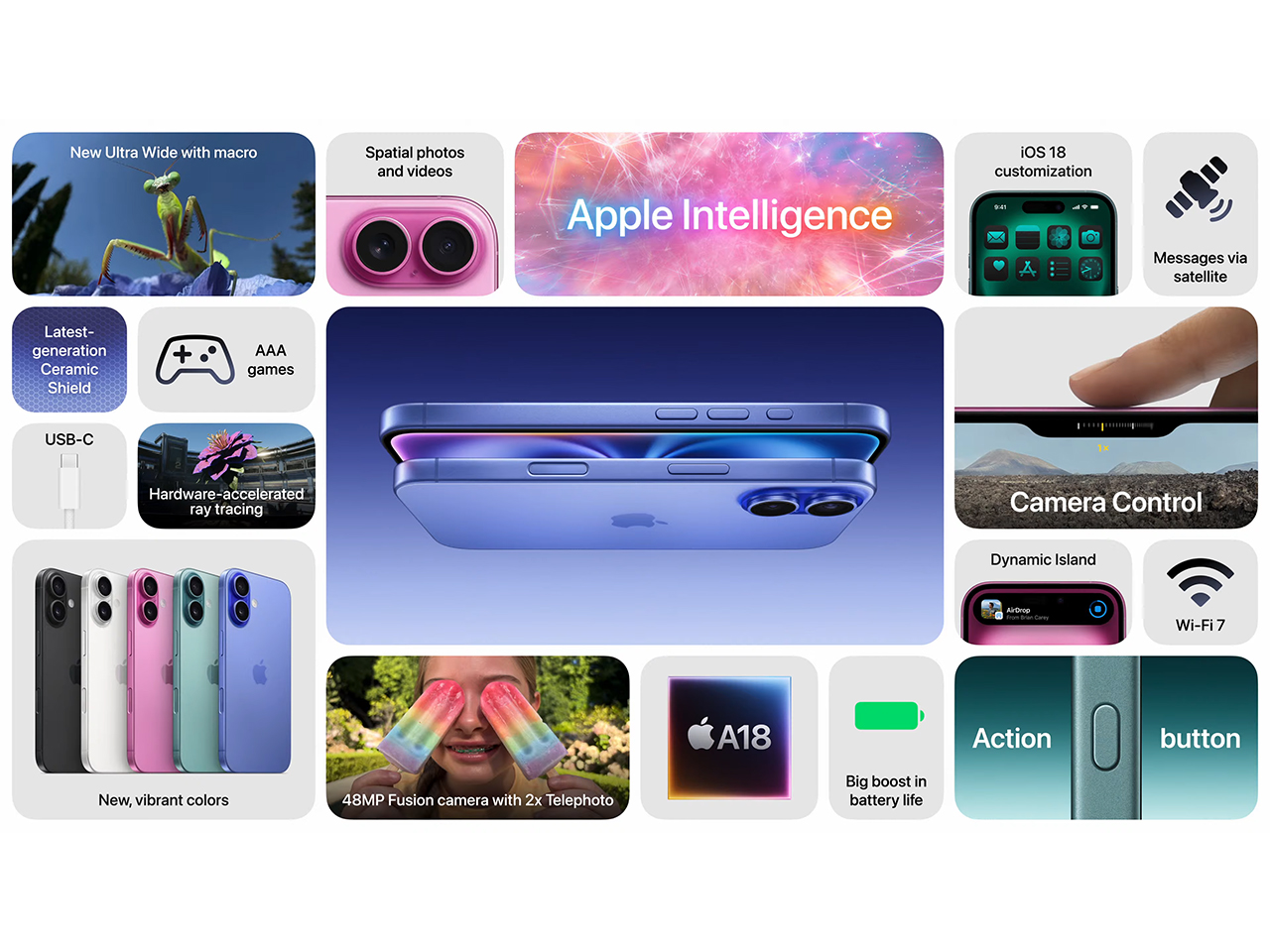

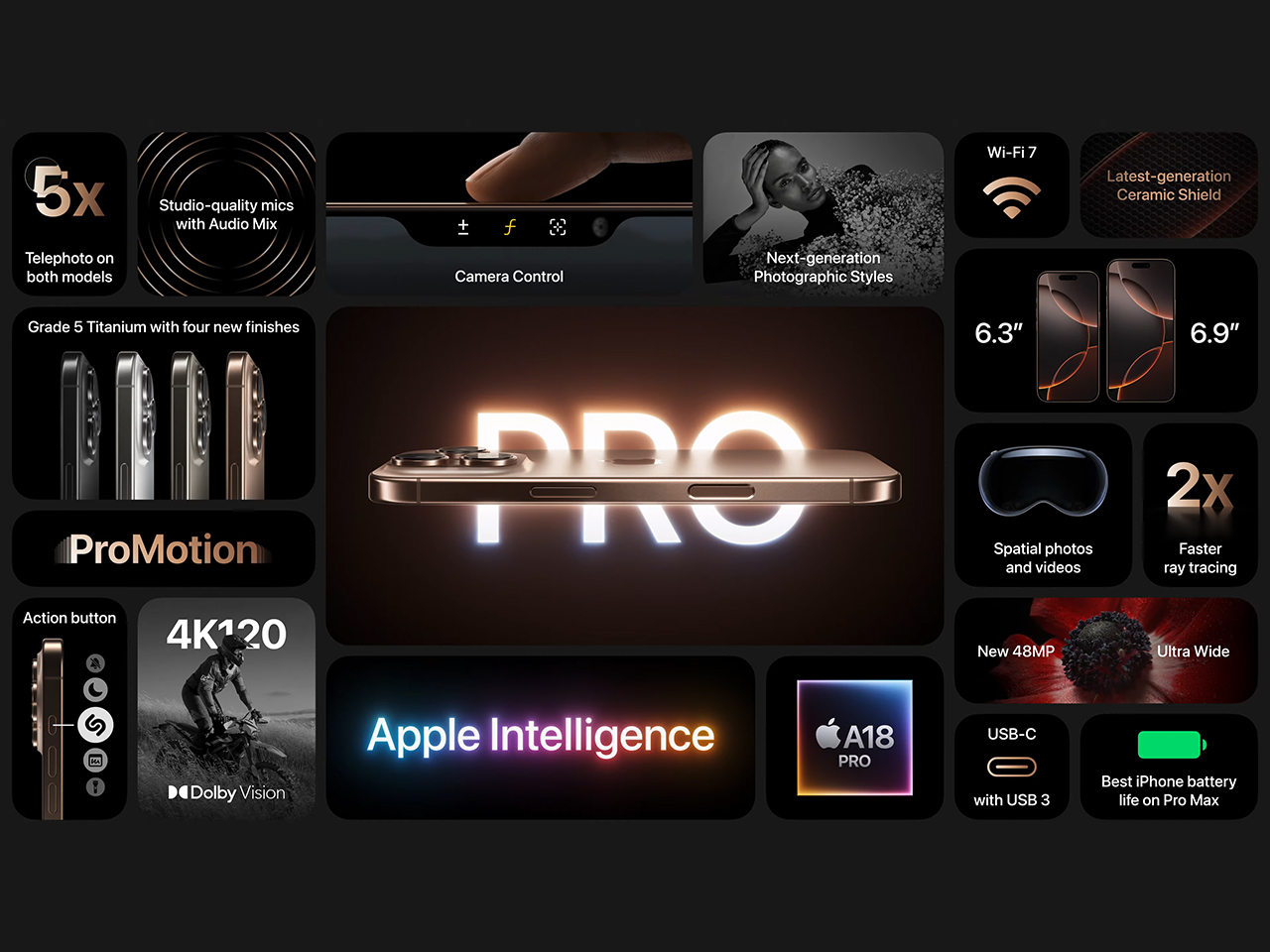

Apple has introduced the iPhone 16 Pro, bringing significant updates to both its design and performance. The iPhone 16 Pro now features larger displays—6.3 inches for the Pro and 6.9 inches for the Pro Max—making them the biggest iPhone screens yet. Despite the larger size, Apple has minimized bezels, resulting in a sleek, nearly edge-to-edge look. The adaptive 120Hz ProMotion technology provides smooth scrolling, while the always-on display functionality gives users quick access to key information.

Designer: Apple

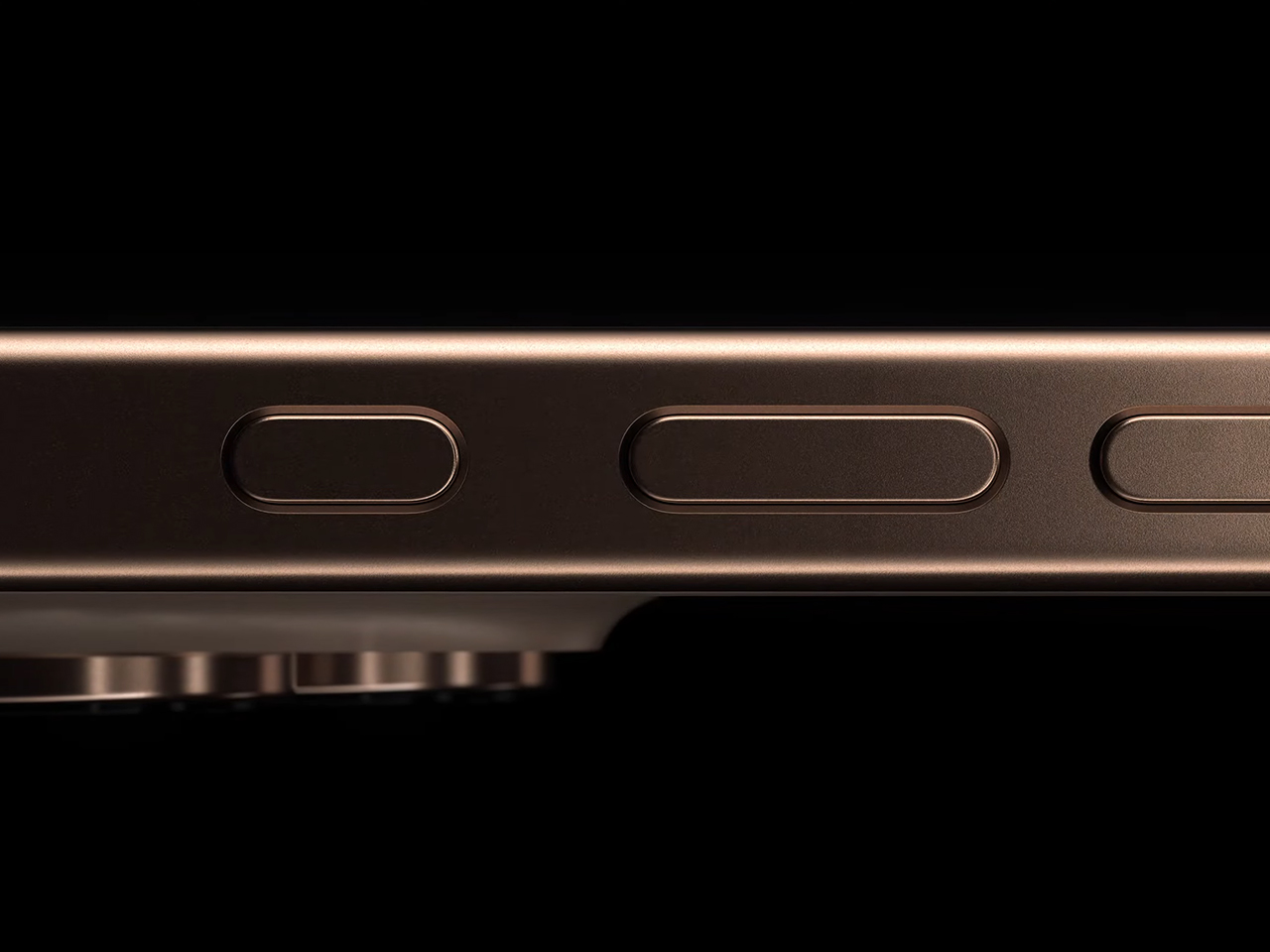

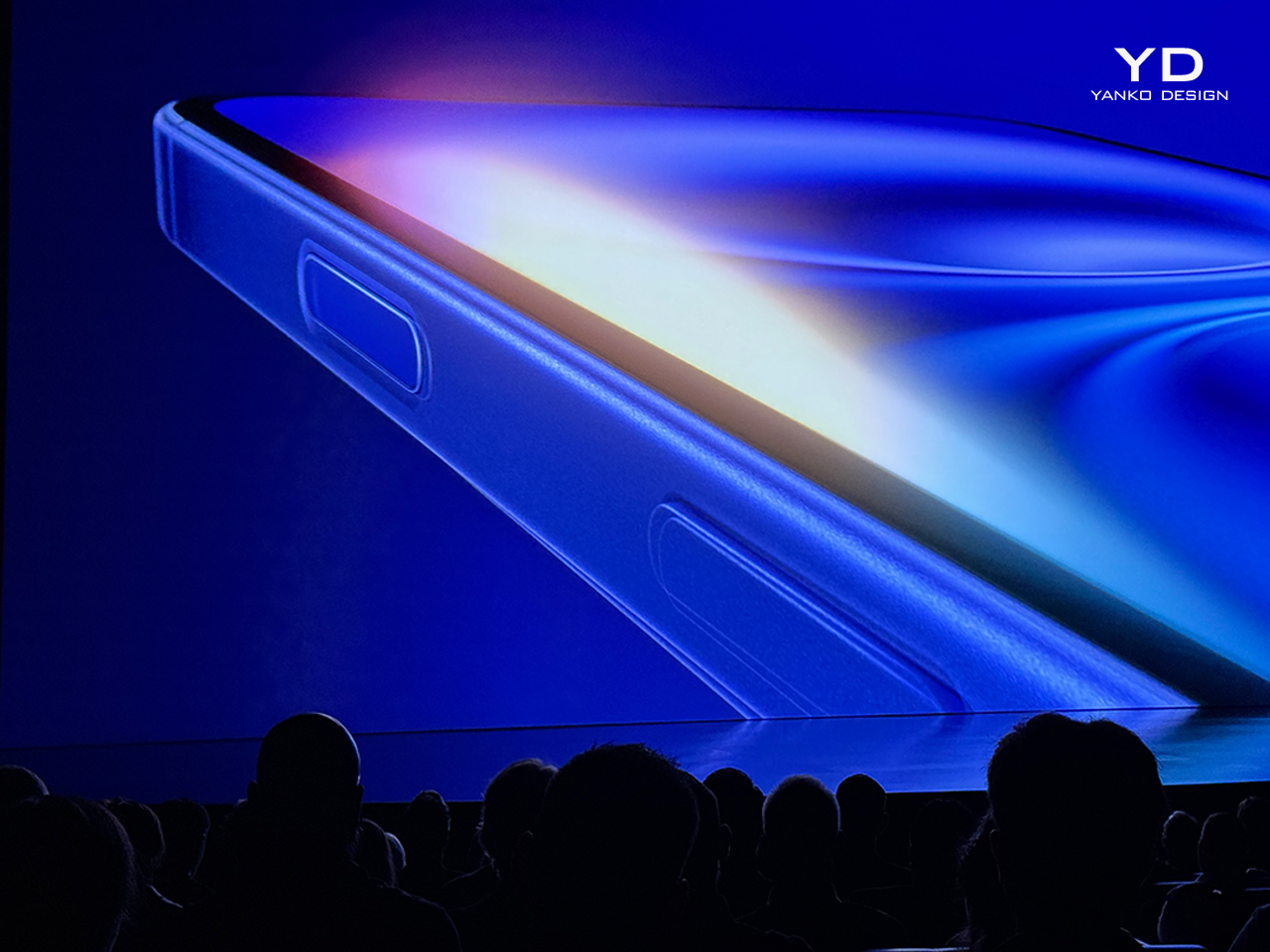

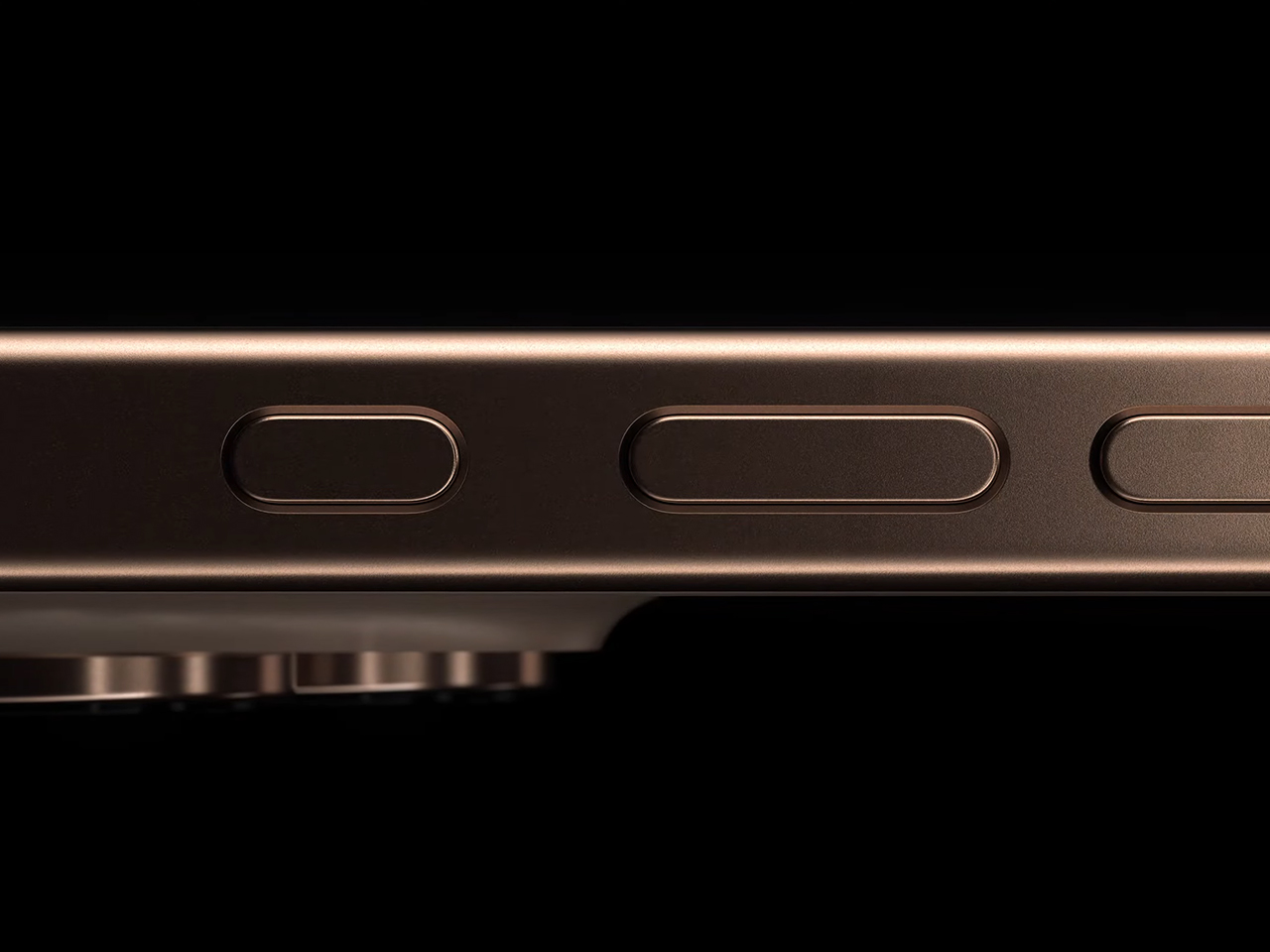

The iPhone 16 Pro’s design is constructed using aerospace-grade titanium, offering both durability and lightness. This titanium body is available in four finishes: Black Titanium, White Titanium, Natural Titanium, and Desert Titanium. The device also incorporates a new thermal architecture that improves sustained performance while keeping the phone cool during heavy use. The Pro models are also water and dust-resistant, ensuring longevity in various environments.

Photography: Real-Time Control and Customization

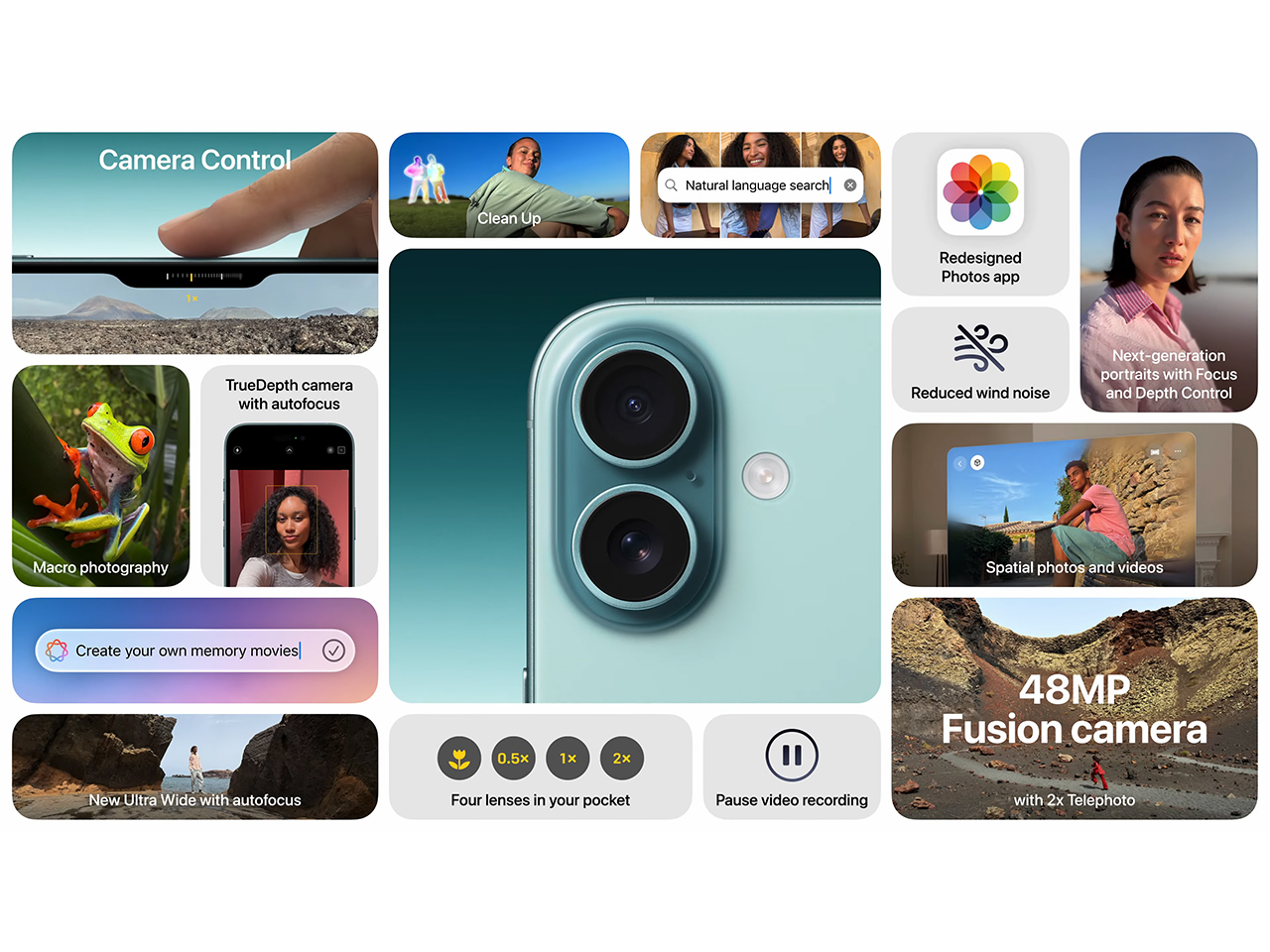

The iPhone 16 Pro empowers creativity through its 48-megapixel fusion camera, which integrates a second-generation quad-pixel sensor capable of reading data twice as fast, allowing for zero shutter lag. Whether capturing fast-moving subjects or subtle details, this new system ensures uncompromised resolution and detail. The sensor’s high-speed data transfer to the A18 Pro chip allows users to capture 48-megapixel proRAW and HEAT photos effortlessly.

A new 48-megapixel ultra-wide camera complements the fusion camera, offering high-resolution shots with autofocus. It excels in capturing wider scenes and stunning macro shots, delivering sharpness and clarity that make it indispensable for creative users. The 5x telephoto camera—with Apple’s longest focal length—provides incredible zoom capabilities from a distance, while the Tetra Prism design improves optical performance for more detailed, high-quality images.

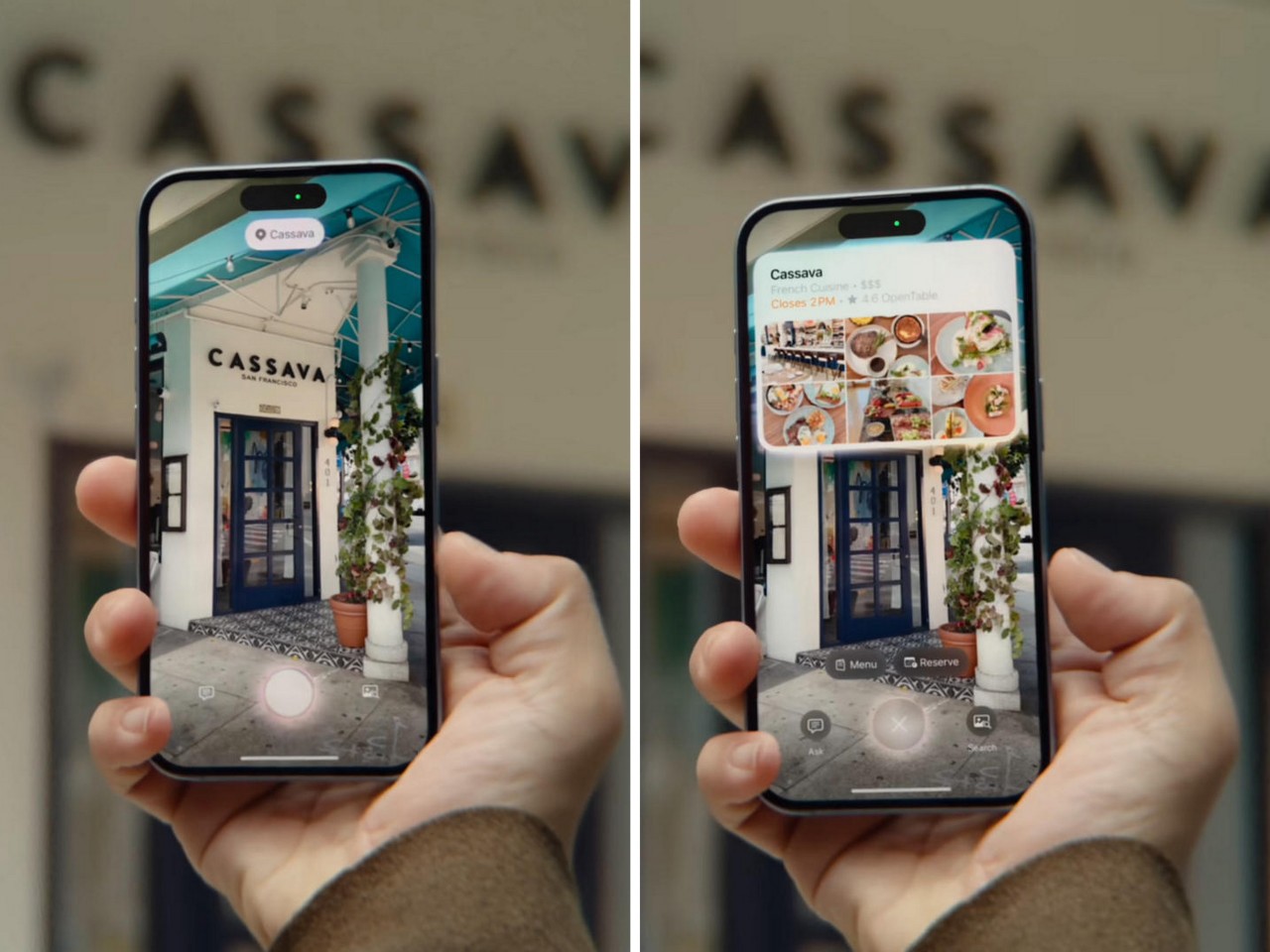

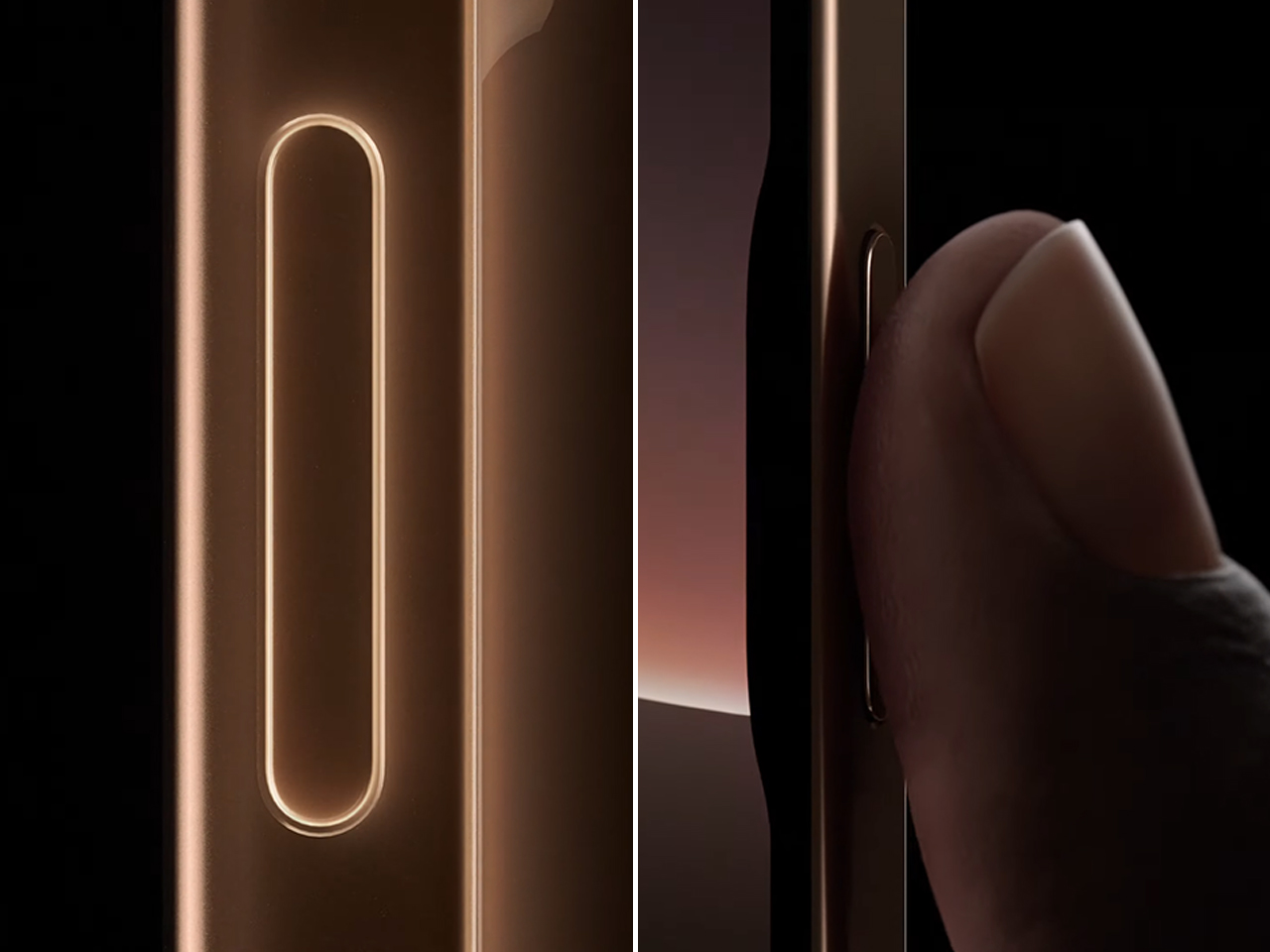

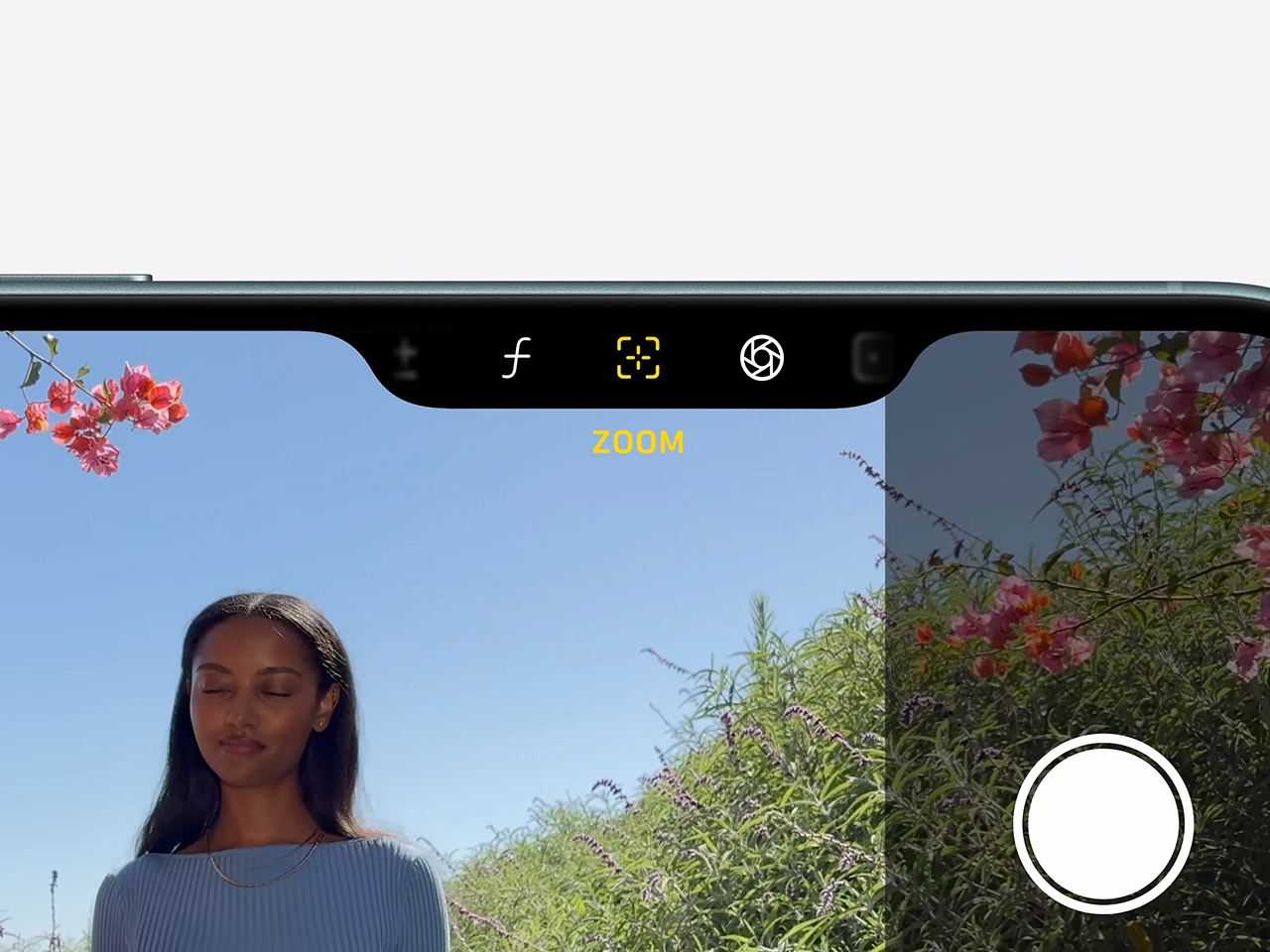

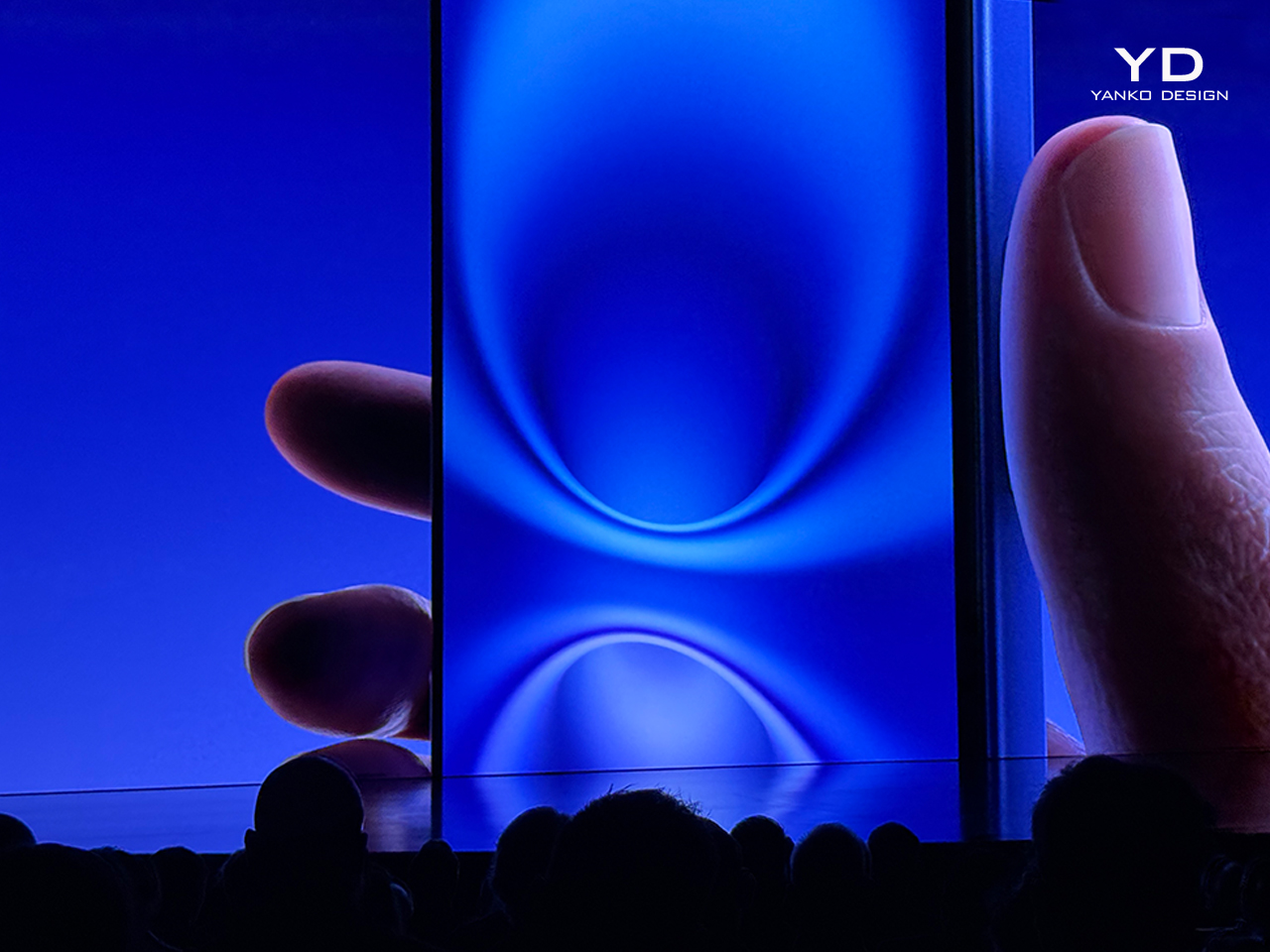

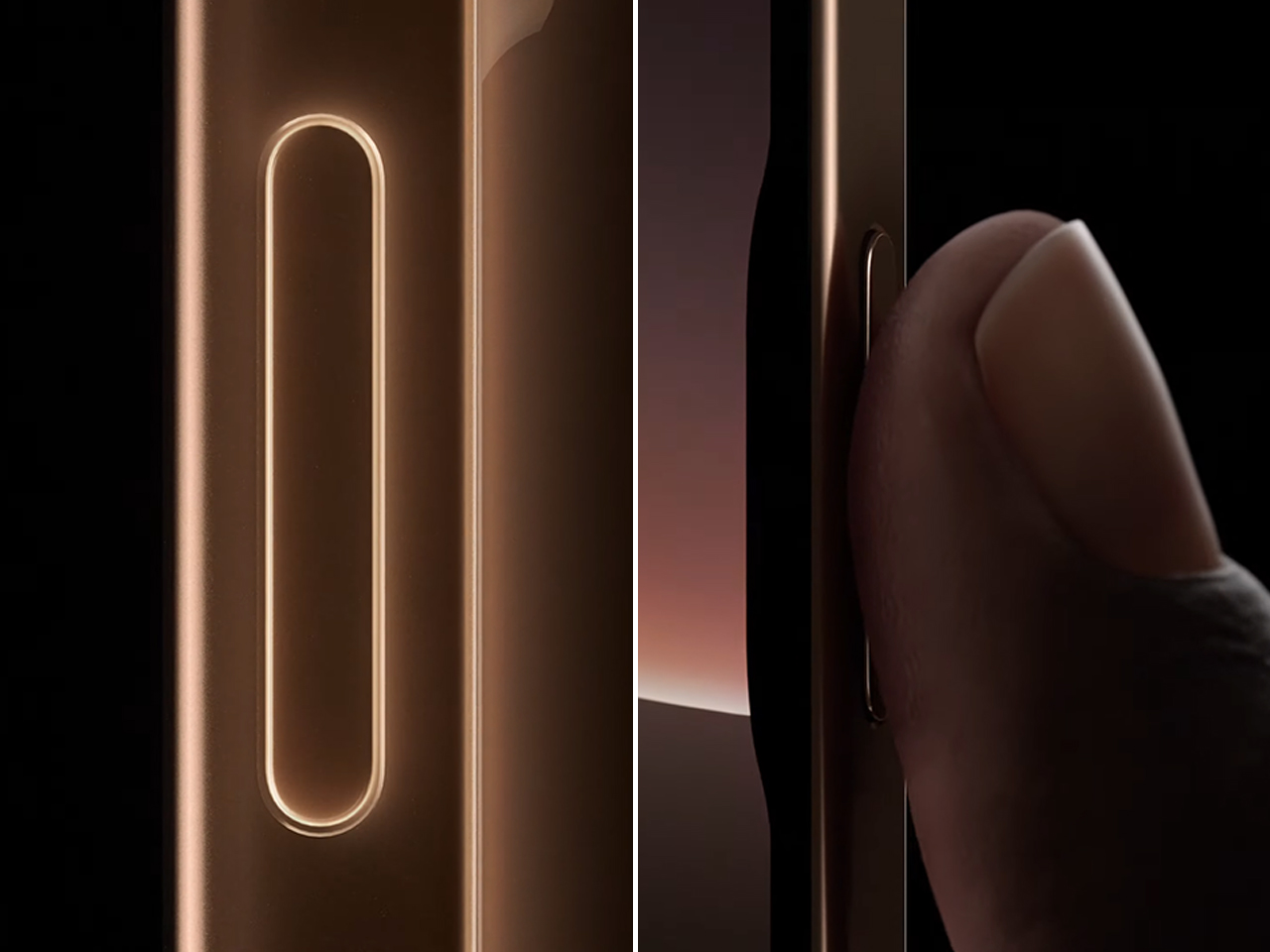

To streamline the photography process, the iPhone 16 Pro introduces an upgraded camera control system. This interface lets users quickly switch between lenses and adjust the depth of field and exposure with a dedicated slider. Later this year, a two-stage shutter will be added, allowing users to lock focus and exposure with a light press, offering precision when reframing shots.

Advanced Photographic Styles and Real-Time Grading

Apple has enhanced the creative range of the iPhone 16 Pro with advanced photographic styles, allowing users to personalize their photos in real-time. With the A18 Pro chip, the image pipeline dynamically adjusts skin tones, colors, highlights, and shadows, enabling a wider range of aesthetic choices. Users can apply styles like black-and-white, dramatic tones, and other custom looks that go beyond basic filters and fine-tune the look with a new control path that simultaneously adjusts tone and color.

What sets these styles apart is the ability to change them after capture, allowing greater flexibility in editing. The real-time preview enabled by the A18 Pro gives users a professional-level color grading experience as they shoot, a significant upgrade for photographers looking for more creative control.

Video: Cinema-Grade Capabilities

The iPhone 16 Pro significantly advances video recording. The new 4K 120fps recording in Dolby Vision is possible thanks to the faster sensor in the 48-megapixel fusion camera and the high transfer speeds of the Apple camera interface. The image signal processor (ISP) of the A18 Pro allows for frame-by-frame cinema-quality color grading, enabling professional-quality video capture directly on the iPhone.

One of the most exciting features is the ability to shoot 4K 120 ProRes and Log video directly to an external storage device, perfect for high-end workflows that demand high frame rates and extensive color grading control. Users no longer need to commit to frame rates upfront—they can adjust playback speed after capture. Whether for slow-motion effects or cinematic storytelling, the iPhone 16 Pro offers flexible playback options, including quarter-speed, half-speed, and 1/5-speed for 24fps cinematic moments.

The camera control interface supports third-party apps like FiLMiC Pro and Grid Composer, enabling advanced features such as precise framing based on the rule of thirds and other composition tools. This further solidifies the iPhone 16 Pro as a versatile tool for video creators.

Audio: Studio-quality sound and Spatial Audio

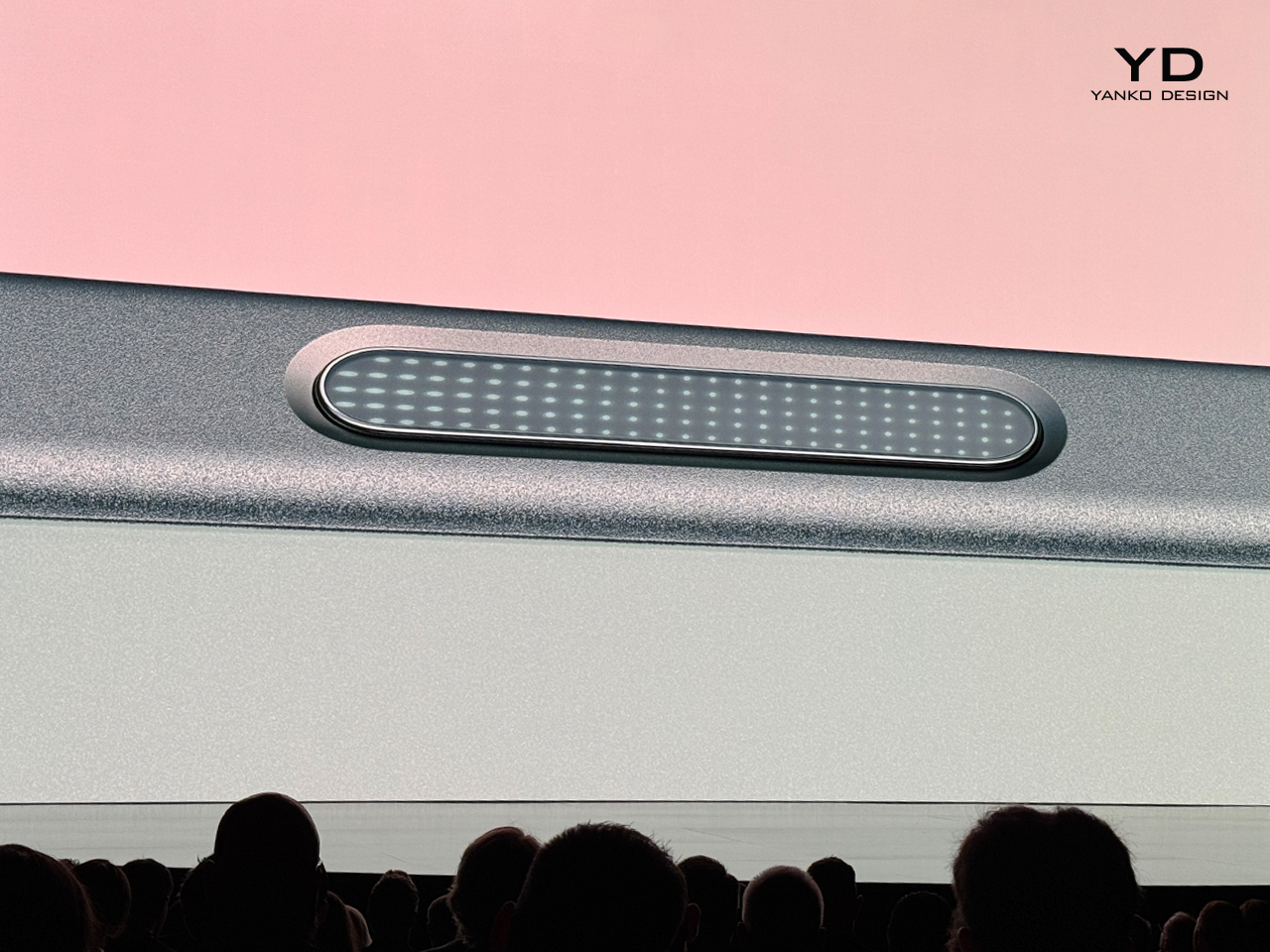

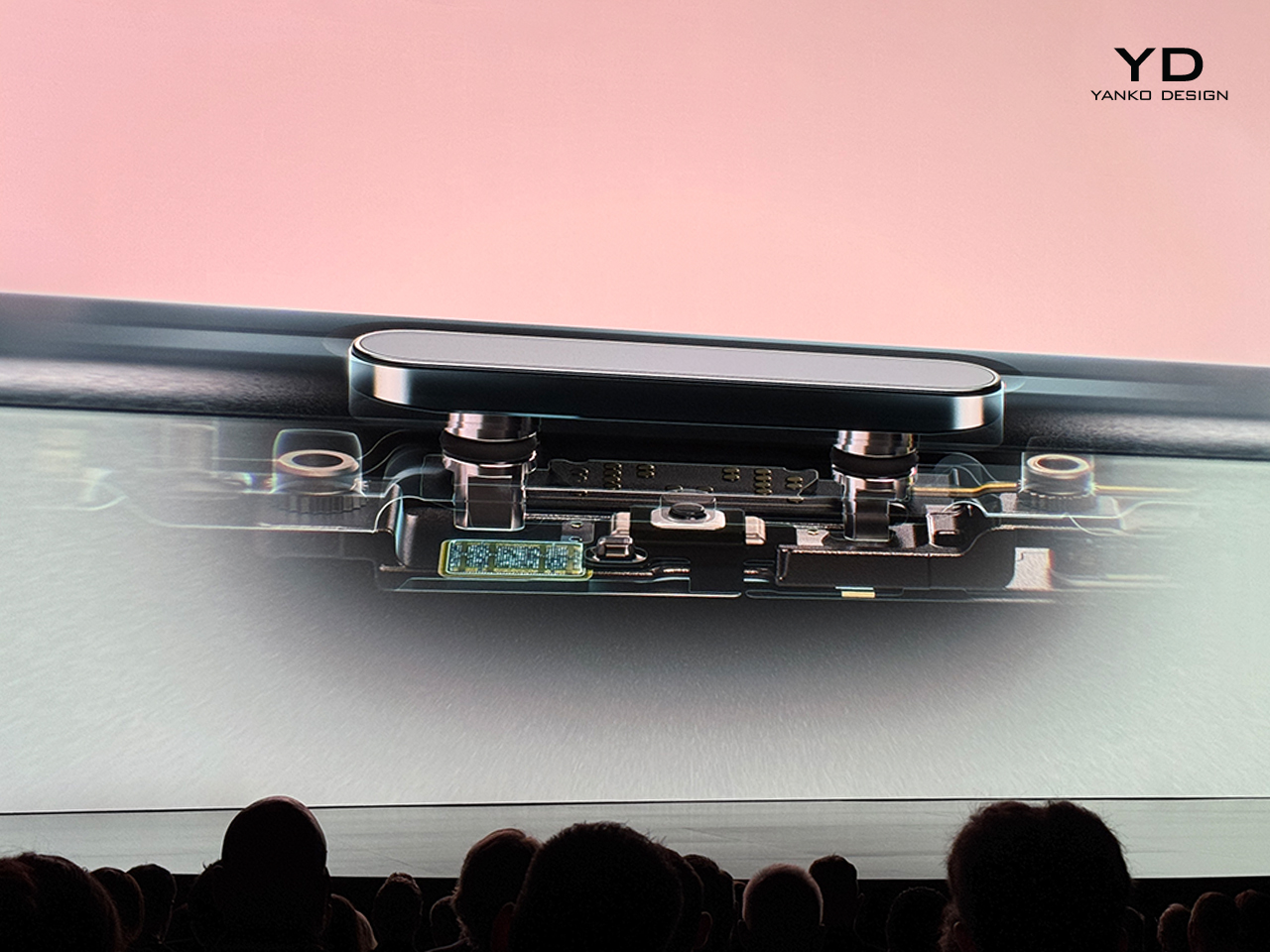

The iPhone 16 Pro also delivers significant audio upgrades. Four studio-quality microphones provide low noise levels for true-to-life sound capture, whether recording vocals or instruments. The reduced noise floor ensures high-quality audio, which is essential for professional recordings.

A new feature is spatial audio capture during video recording, enhancing the immersive experience when paired with AirPods or viewed on Apple Vision Pro. The spatial audio capture allows dynamic editing through the new audio mix feature, which uses machine learning to separate background elements from voices. This feature includes three voice options: in-frame mix, which isolates the person’s voice on camera; studio mix, which replicates a professional recording environment by eliminating reverb; and cinematic mix, which positions the vocal track upfront with surrounding environmental sounds in the background.

For content creators, Voice Memos now offers the ability to layer tracks on top of existing recordings. This is especially useful for musicians, who can now add vocals over a guitar track or any other instrumental recording. The system automatically isolates the voice from the background audio for a clean, professional result.

A18 Pro Chip: Powering Creativity and Performance

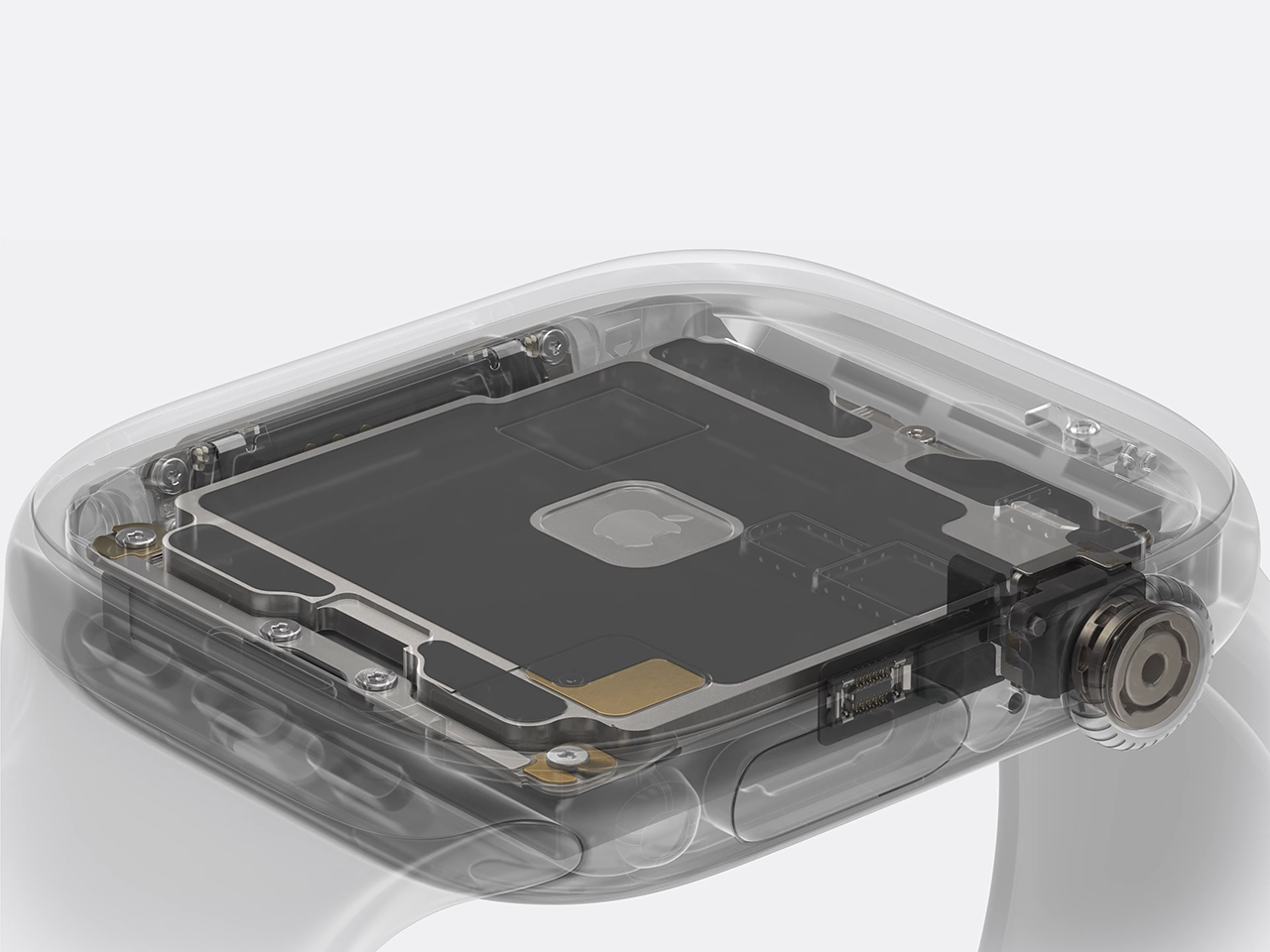

At the core of the iPhone 16 Pro’s new capabilities is the A18 Pro chip, built with second-generation 3-nanometer technology for improved performance and efficiency. The 16-core Neural Engine is designed for tasks requiring high computational power, such as machine learning and generative AI. With a 17% increase in system memory bandwidth, the iPhone 16 Pro can handle tasks such as ray tracing in gaming, 4K video editing, and more.

The A18 Pro chip’s enhanced image signal processor (ISP) enables real-time color grading and supports the advanced photo and video capabilities of the iPhone 16 Pro, ensuring that every shot and video benefits from professional-level quality. The chip’s GPU also provides 20% faster performance, allowing for smoother gaming and more efficient graphics rendering.

iPhone 16 Pro: Is It Time to Switch or Upgrade?

For professional creators, the iPhone 16 Pro delivers the performance and tools needed to meet demanding creative standards. Powered by the A18 Pro chip, it offers advanced photographic styles, pro-level video recording, and studio-quality audio. Whether capturing intricate details in images, producing cinematic-quality videos, or recording clear, high-fidelity audio, the iPhone 16 Pro provides the precision and control necessary to achieve your creative goals. This upgrade is a powerful creative tool designed to push the boundaries of your work, supporting and enhancing your vision with every use.

The post Apple Unveils iPhone 16 Pro with New Camera, Video, and Audio Features Powered by A18 Pro Chip first appeared on Yanko Design.