Trying to write on a laptop means fighting a machine that is also a notification box, streaming portal, and social feed. Distraction-free apps help, but they still live inside the same browser-and-tab chaos, surrounded by everything else your computer knows how to do. Some writers just want a device that only knows how to produce plain text and does not care about anything else happening in the world.

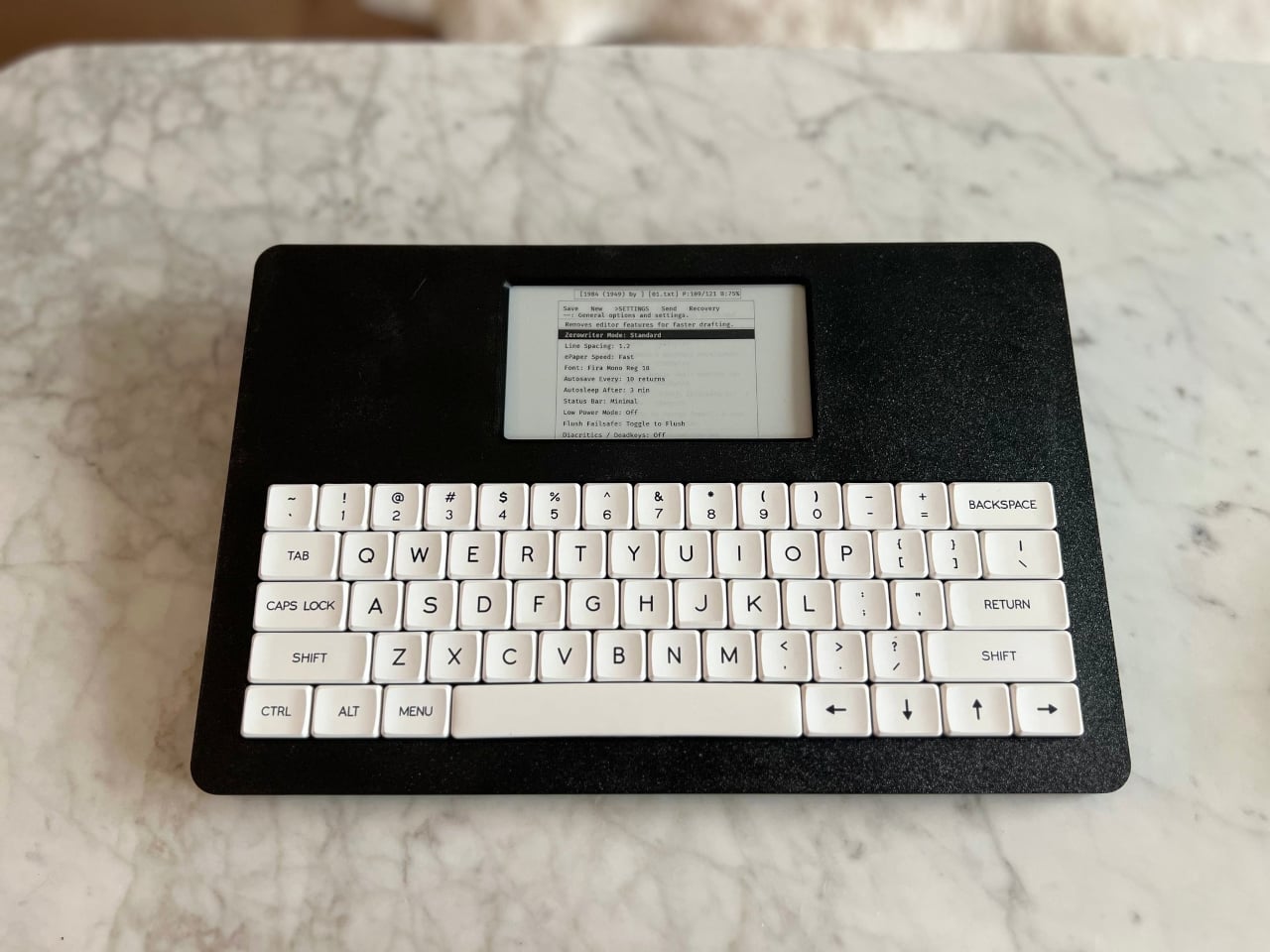

Zerowriter Ink is an open-source e-paper word processor that tries to be exactly that. It combines a 5.2-inch Inkplate e-paper display with a 61-key low-profile mechanical keyboard in a slim slab that fits in a 13-inch laptop sleeve. It wakes instantly, shows a clean page, and runs for weeks on a single charge instead of draining down to zero by lunchtime like most laptops.

Designer: Adam Wilk

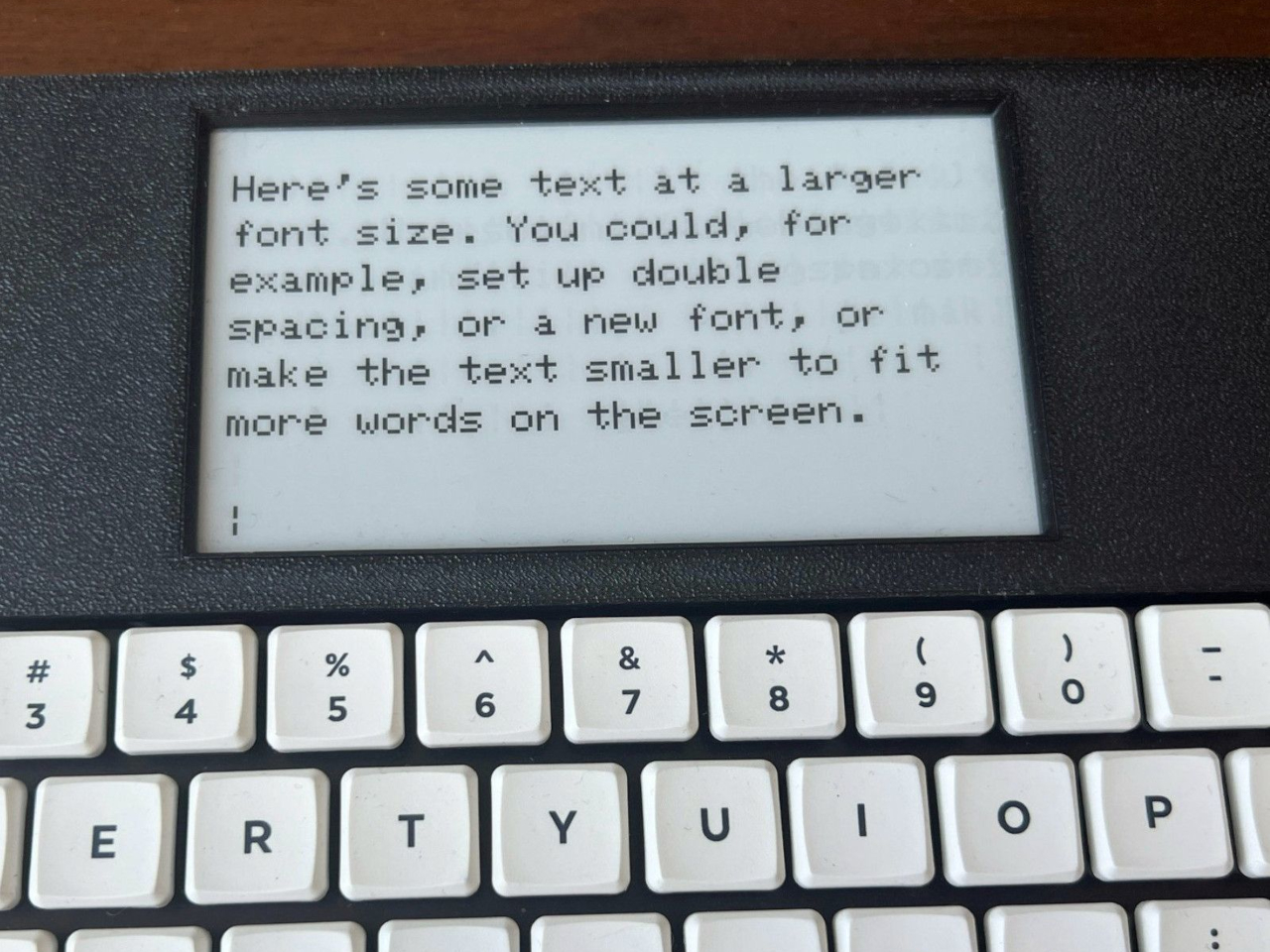

Picture drafting on a park bench or train, where the high-contrast e-paper screen stays readable in direct sunlight and does not blast blue light. A custom refresh engine keeps typing lag almost imperceptible, so it feels more like a fast e-reader that learned to keep up with your thoughts than the sluggish e-paper most people expect from displays that usually just show book pages or bus schedules.

The 60% mechanical keyboard uses Kailh Choc Pro Red switches, and every switch and keycap is hot-swappable. That means you can tune the feel and sound to your taste, or replace a dead switch without tossing the device. It feels more like a compact enthusiast board that happens to have an e-paper screen attached than a sealed writing appliance you cannot repair or modify.

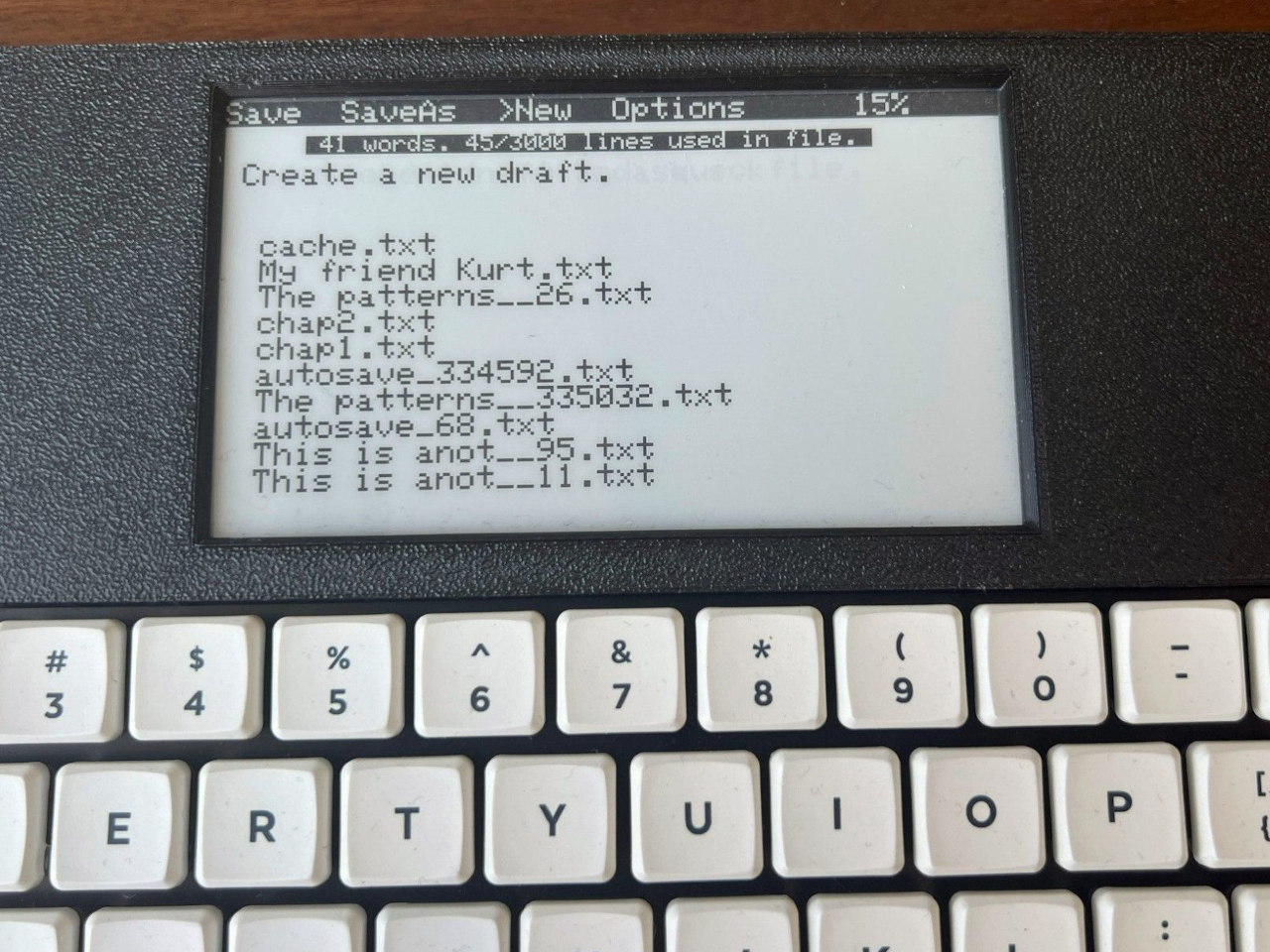

The built-in software offers a drafting mode and a simple word-processing mode, letting you either pour out text or make quick cursor-based edits with arrow keys. On-device file management lets you save and rename documents, and finished .txt files live on a microSD card. When ready to polish, you plug in over USB or scan a QR code to move drafts to your main machine for formatting and revision.

Zerowriter Ink ships completely offline, with no accounts, no cloud sync, and no AI quietly indexing your drafts. Your words stay on the microSD card unless you decide otherwise. At the same time, the ESP32 hardware and Arduino-based firmware mean Wi-Fi and Bluetooth are there for anyone who wants to add sync or other features, either by writing their own build or grabbing one from the community.

The device is definitely not trying to replace laptops. It is trying to give writers a small, reliable space where nothing else happens. It is for people who miss the simplicity of an Alphasmart but want a sharper screen and a better keyboard, and for tinkerers who like the idea of a writing tool they can open up, both in hardware and in code, once the draft is done and curiosity takes over.

The post Zerowriter Ink Is an Open-Source E-Paper Typewriter Built for Writers first appeared on Yanko Design.