CES 2026 isn't the first year we've seen a wave of interesting robots or even useful robots crop up in Las Vegas. But it's the first year I can remember when there have been so many humanoid and humanoid-like robots performing actually useful tasks. Of those, Switchbot's Onero H1 has been one of the most intriguing robot helpers I've seen on the show floor, especially because the company says that it will actually go on sale later this year (though it won't come cheap).

Up to now, Chinese company Switchbot has been known for its robot vacuums and smart home devices. Much of that expertise is evident in Onero. The unexpectedly cute robot has a wheeled base that looks similar to the company's robot vacuums, but is also equipped with a set of articulated arms that can help it perform common household tasks.

I was able to see some of its abilities at Switchbot's CES booth, where Onero dutifully picked up individual articles of clothing from a couch, rolled over to a washing machine, opened the door, placed the items inside and closed the door. The robot moved a bit slowly; it took nearly two minutes for it to grab one piece of clothing and deposit it inside the appliance which was only a few feet away.

I'm not sure if its slowness was a quirk of the poor CES Wi-Fi, a demo designed to maximize conference-goers attention or a genuine limitation of the robot. But I'm not sure it matters all that much. The whole appeal of a chore robot is that it can take care of things when you're not around; if you come home to a load of laundry that's done, it's not that concerning if the robot took longer to complete the task than you would have. The laundry is done and you don't have to do it. That's the dream.

Under the hood, Onero is powered by RealSense cameras and other sensors that help it learn its surroundings, as well as on-device AI models.

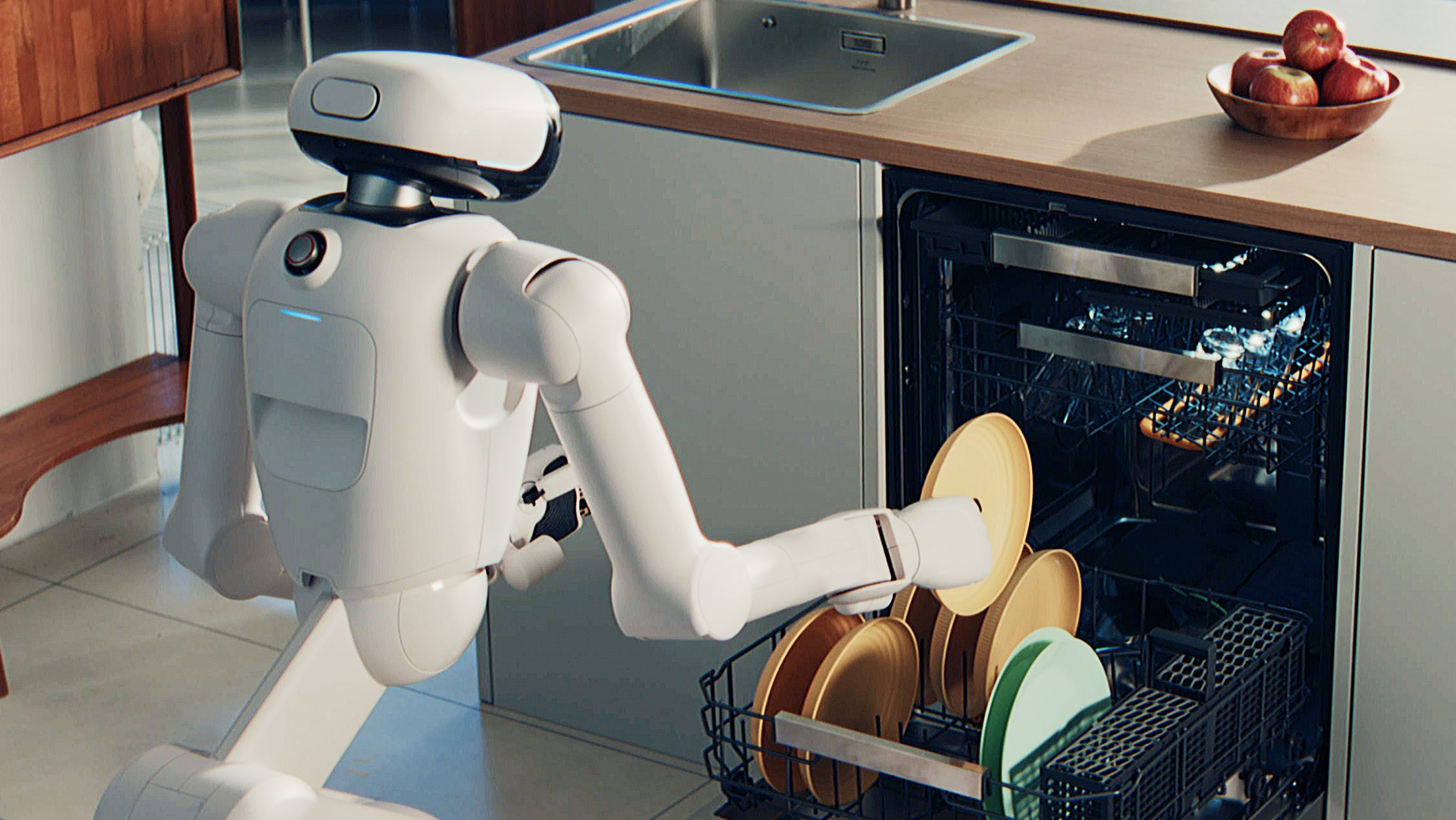

The demo of course only offered a very limited glimpse of Onero's potential capabilities. In a promotional video shared by Switchbot, the company suggests the robot can so much, much more: serve food and drinks, put dishes away, wash windows, fold clothes and complete a bunch of other — frankly, impressive — tasks. The Onero in the video also has an articulated hand with five fingers that gives it more dexterity than the claw-hand one I saw at CES. A Switchbot rep told me, though, that it plans to offer both versions when it does go on sale.

Which brings me to the most exciting part about watching Onero: the company is actually planning on selling it this year. A Switchbot rep confirmed to me it will be available to buy sometime in 2026, though it will likely be closer to the end of the year. The company hasn't settled on a final price, but I was told it will be "less than $10,000."

While we don't know how much less, it's safe to say Onero won't come cheap. It also seems fair to say that this will be a very niche device compared to many of Switchbot's other products. But, if it can competently handle everything the company claims it can, then there's probably a lot of people and businesses that would be willing to pay.