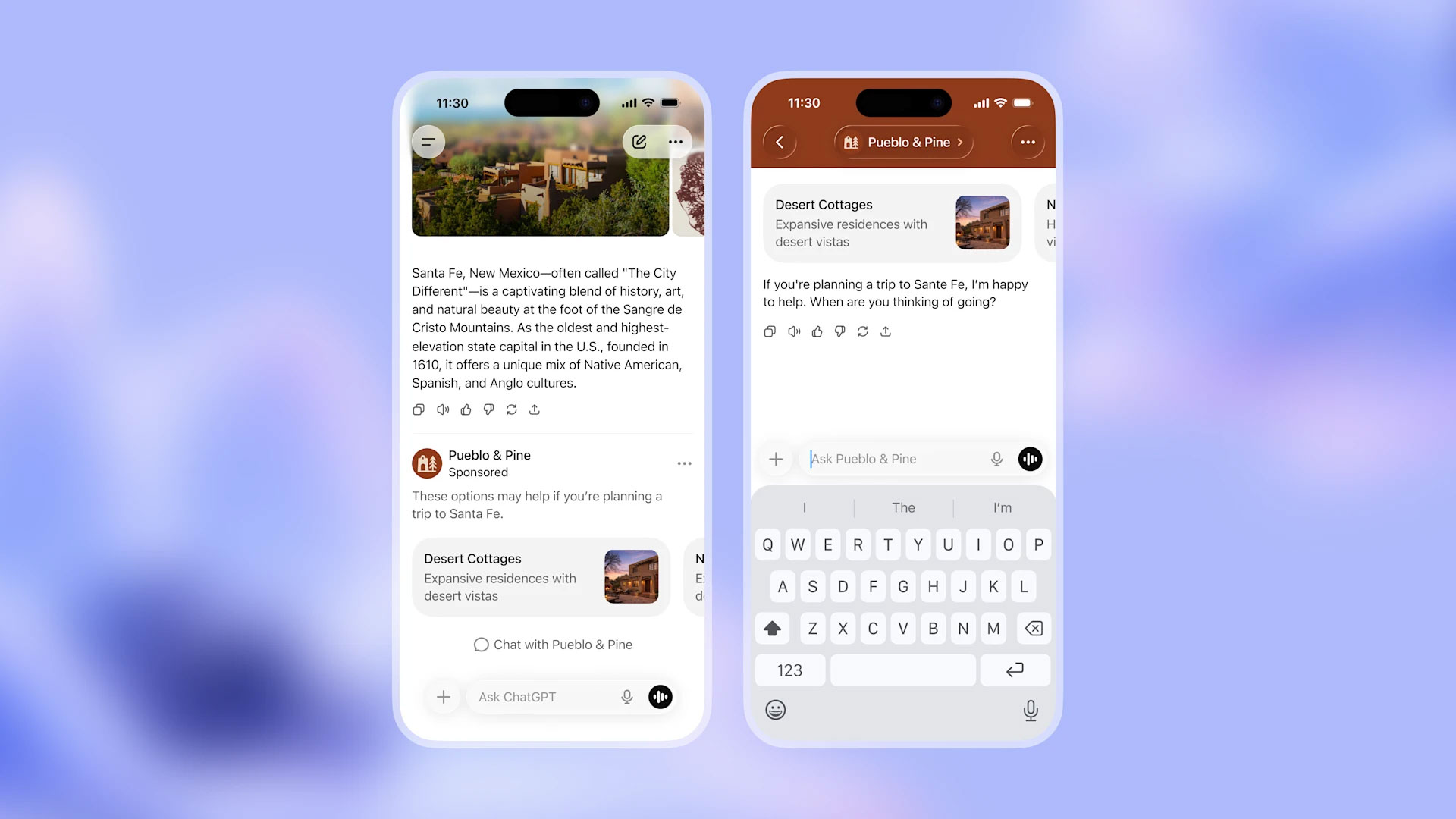

OpenAI plans to start testing ads inside of ChatGPT "in the coming weeks." In a blog post published Friday, the company said adult users in the US of its free and Go tiers (more on the latter in a moment) would start seeing sponsored products and services appear below their conversations with its chatbot. "Ads will be clearly labeled and separated from the organic answer," OpenAI said, adding any sponsored spots would not influence the answers ChatGPT generates. "Answers are optimized based on what's most helpful to you."

OpenAI says people won't see ads appear when they're talking to ChatGPT about sensitive subjects like their health, mental state of mind or current politics. The company also won't show ads to teens under the age of 18. As for privacy, OpenAI states it won't share or sell your data with advertisers. The company will also give users the option to disable ad personalization and clear the data it uses to generate sponsored responses. "We’ll always offer a way to not see ads in ChatGPT, including a paid tier that’s ad-free," OpenAI adds. Users can dismiss ads, at which point they'll be asked to explain why they didn't engage with it.

"Given what AI can do, we're excited to develop new experiences over time that people find more helpful and relevant than any other ads. Conversational interfaces create possibilities for people to go beyond static messages and links," OpenAI said. However, the company was also quick to note its "long-term focus remains on building products that millions of people and businesses find valuable enough to pay for."

To that point, OpenAI said it would also make its ChatGPT Go subscription available to users in the US. The company first launched the tier in India last August, marketing it as a low-cost alternative to its more expensive Plus and Pro offerings. In the US, Go will cost $8 per month — or $12 less than the monthly price of the Plus plan — and offer 10 times higher rate limits for messages, file uploads and image creation than the free tier. The subscription also extends ChatGPT's memory and context window, meaning the chatbot will be better at remembering details from past conversations. That said, you'll see ads at this tier. To go ad-free, you'll need to subscribe to one of OpenAI's more expensive plans. For consumers, that means either the Plus or Pro plans.

According to reports, OpenAI had been testing ads inside of ChatGPT since at least the end of last year. As companies continue to pay a high cost for model training and inference, all chatbots are likely to feature ads in some form.

This article originally appeared on Engadget at https://www.engadget.com/ai/openai-is-bringing-ads-to-chatgpt-192831449.html?src=rss