Smart home sensors have gotten pretty good at detecting when you walk into a room, but they’re still terrible at knowing when you’re actually there. Most motion sensors trigger when you move, then assume you’ve left the moment you sit down to read or work at a desk. That means your lights flicker off while you’re still in the room, forcing you to wave your arms like you’re trying to flag down a rescue helicopter. It’s the kind of everyday annoyance that makes smart homes feel less smart and more like they’re making educated guesses.

The Aqara Presence Multi-Sensor FP300 solves this with a combination of PIR and 60GHz mmWave radar sensors that detect both motion and stationary presence. That dual-sensor setup means the device knows you’re there even if you’re sitting perfectly still, which is exactly what presence detection should have been doing all along. The sensor also packs temperature, humidity, and light sensors into its compact body, turning it into a five-in-one device that can automate everything from lighting to climate control based on actual occupancy.

Designer: Aqara

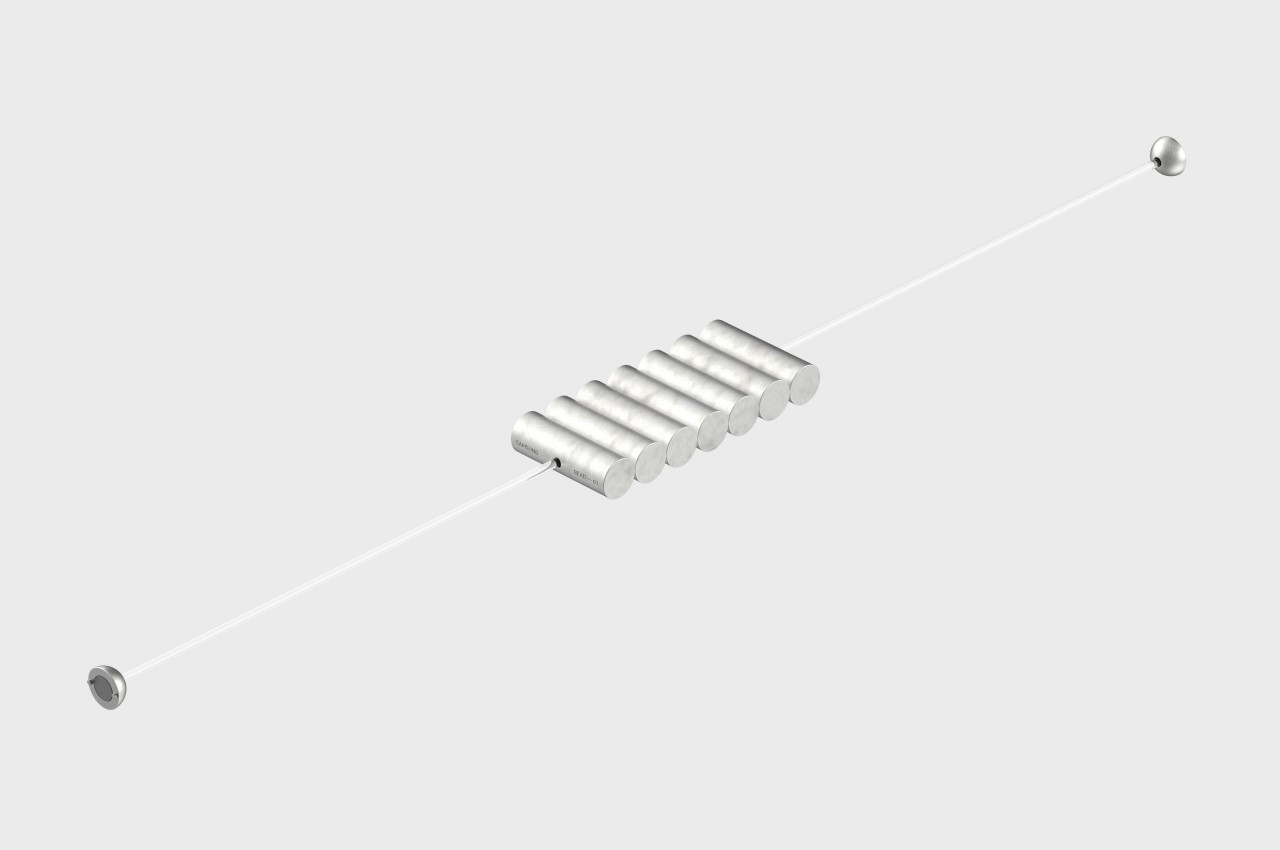

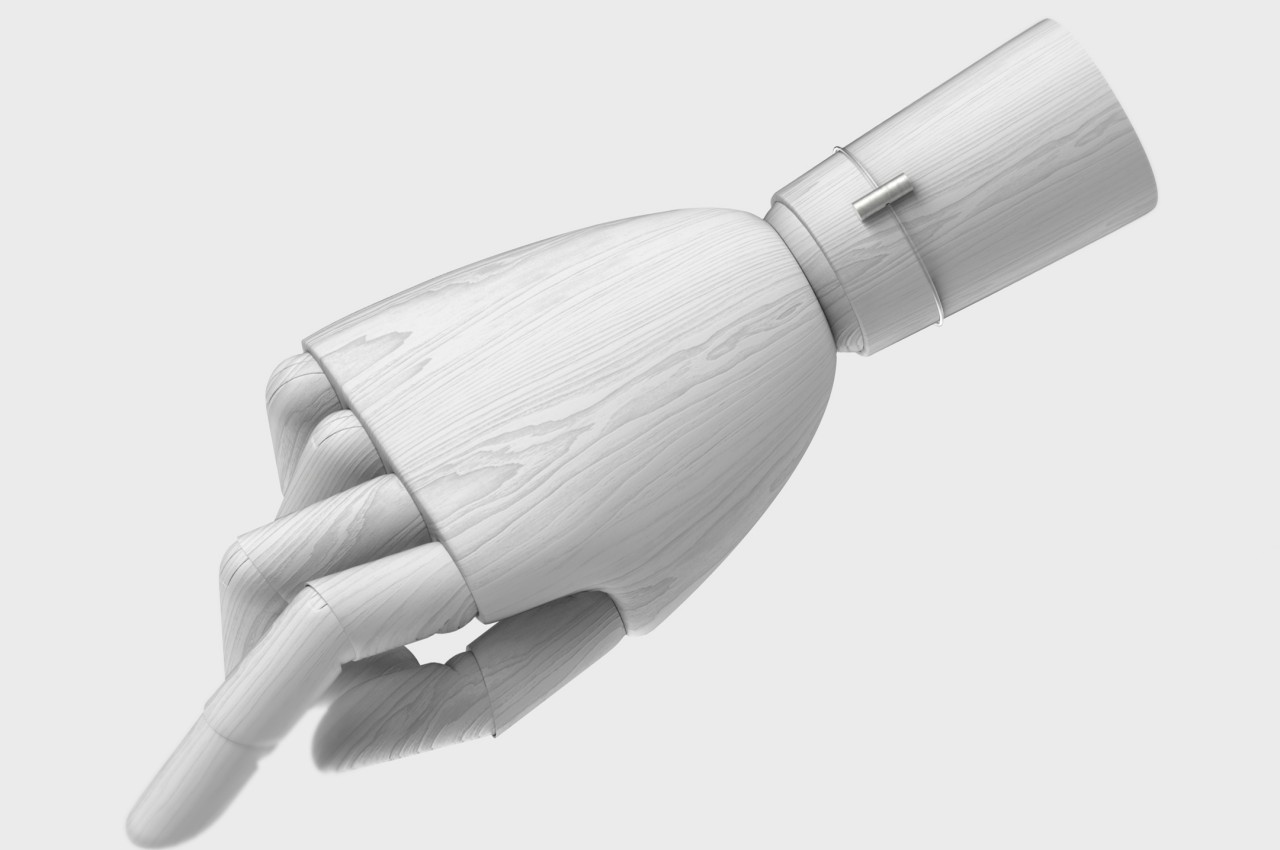

The FP300 itself is a small, cylindrical unit that measures just 42mm on each side and 50mm tall. It’s designed to blend in rather than stand out, with a clean white finish and subtle Aqara branding. The real advantage is how flexible placement can be. You can mount it on walls or ceilings, stick it in corners, attach it to magnetic surfaces like refrigerators, or just set it on a shelf or desk without any mounting hardware at all. That wireless freedom is rare for presence sensors, which usually require wired power or specific mounting positions.

Of course, being battery-powered raises questions about longevity, but Aqara claims up to three years of battery life when using Zigbee, or two years with Thread. That’s running on two replaceable CR2450 coin cells, which is surprisingly long for a device that’s constantly monitoring presence and environmental conditions. You can extend that further by disabling certain sensors or adjusting reporting intervals if you don’t need every data point the device can collect.

The FP300 supports both Zigbee and Thread protocols, which means it works with pretty much every major smart home platform through Matter. Apple Home, Google Home, Alexa, SmartThings, and Home Assistant are all compatible, though you’ll need either an Aqara hub for Zigbee or a Thread border router to get everything working. Using Zigbee unlocks extra customization options in the Aqara Home app, like adjusting detection sensitivity and tweaking reporting intervals.

What makes the FP300 feel genuinely useful is how those five sensors work together. The presence detection ensures lights stay on when you’re in the room, while the light sensor prevents them from turning on during the day. The temperature and humidity data can trigger your HVAC system only when someone’s actually home, saving energy without sacrificing comfort. It’s the kind of layered automation that makes smart homes feel less gimmicky and more practical.

At around $50, the FP300 sits between basic motion sensors that miss half your movements and wired presence sensors that cost more and require professional installation. For anyone building out a smart home without tearing into walls or dealing with complicated wiring, that’s a reasonable trade-off. The fact that you can just plop it on a shelf and have it start working makes the whole setup feel refreshingly simple for once.

The post Aqara FP300 Detects You Even When You’re Sitting Perfectly Still first appeared on Yanko Design.