Imagine a robotic hand that not only mimics human dexterity but completely reimagines what a hand can do. Researchers at École Polytechnique Fédérale de Lausanne (EPFL) have developed something that looks like it crawled straight out of a sci-fi fever dream: a modular robotic hand that can detach from its arm, scuttle across surfaces spider-style, and grab multiple objects at once.

The human hand has long been considered the gold standard for dexterity. But here’s the thing about trying to replicate perfection: you often inherit its limitations, too. Our hands are fundamentally asymmetrical. We have one opposable thumb per hand, which means we’re constantly repositioning our wrists and contorting our bodies to reach awkwardly placed objects or grasp items from different angles. Try reaching behind your hand while keeping a firm grip on something, and you’ll quickly understand the problem.

Designer: École Polytechnique Fédérale de Lausanne’s (EPFL) school of engineering

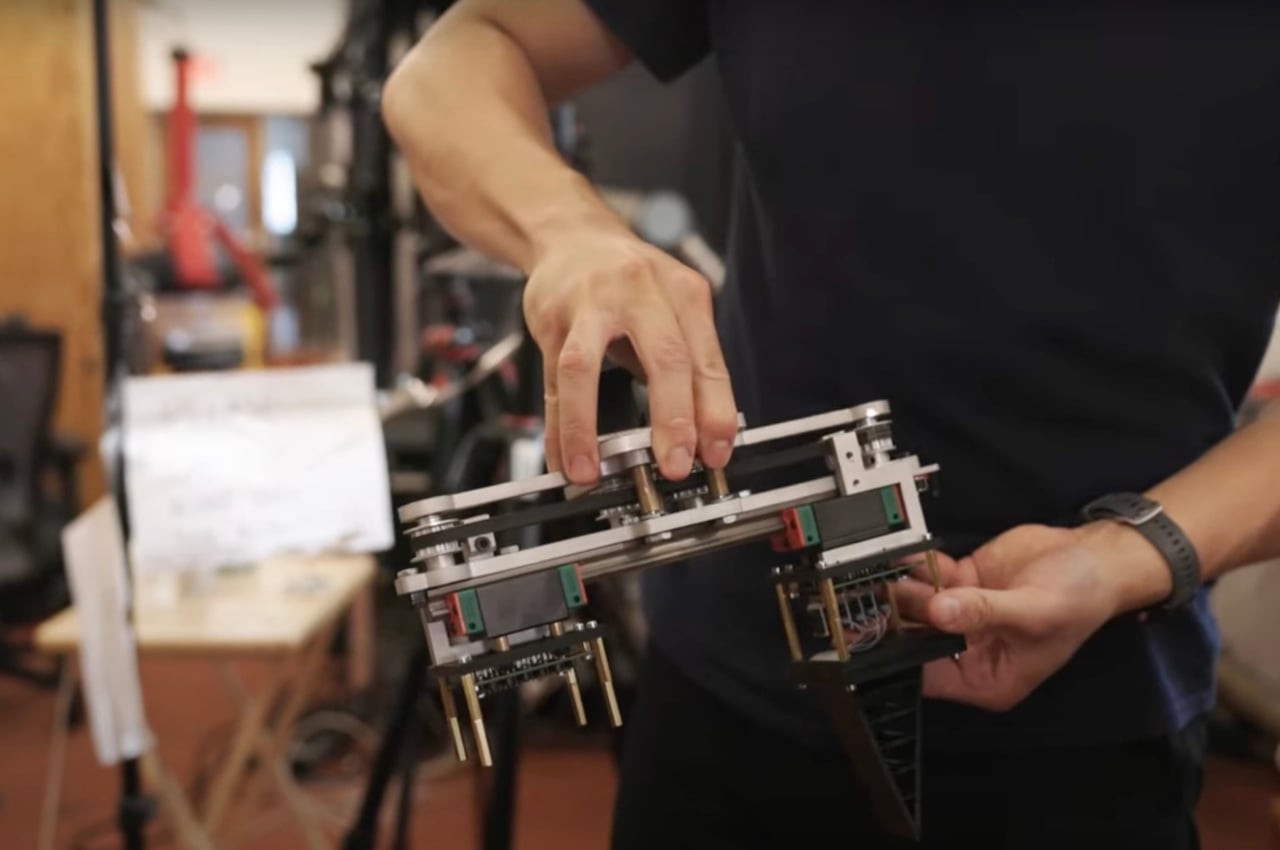

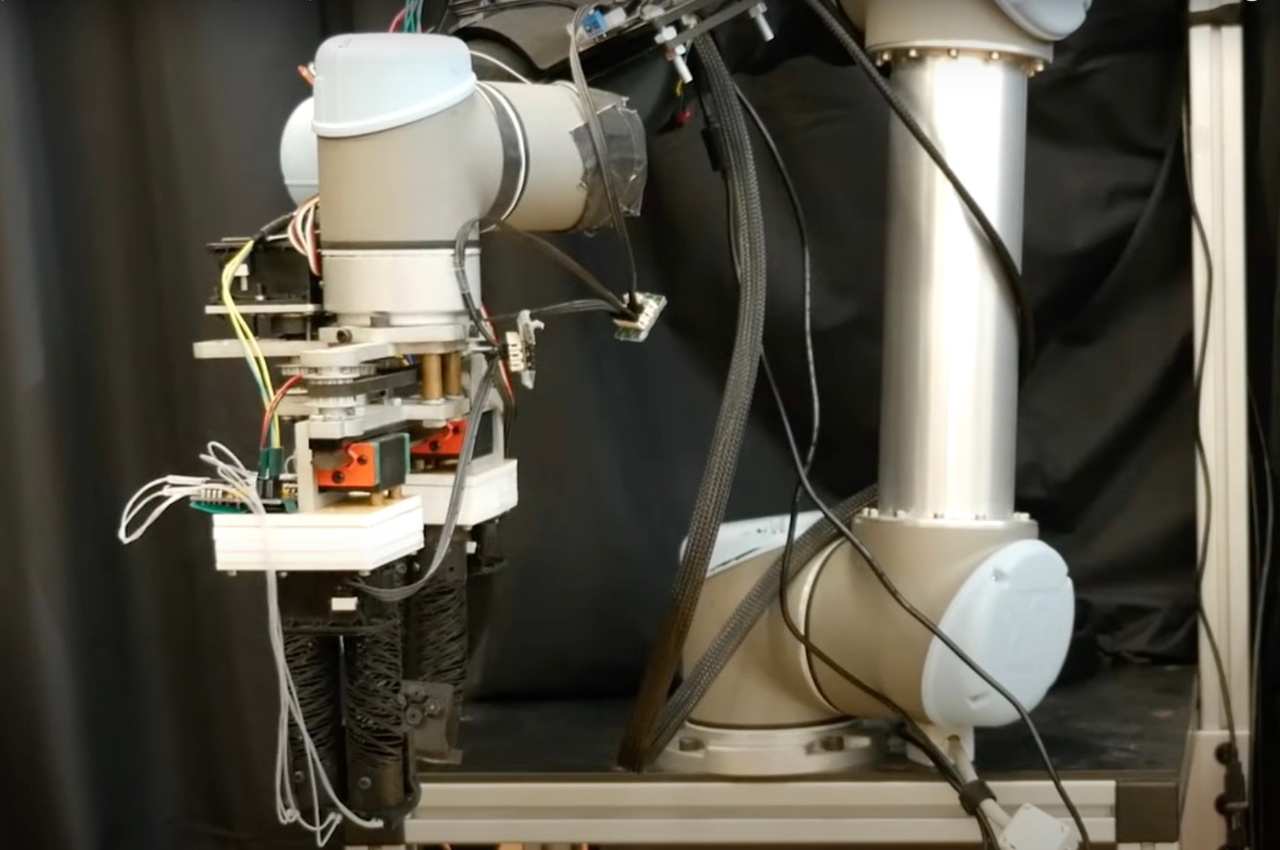

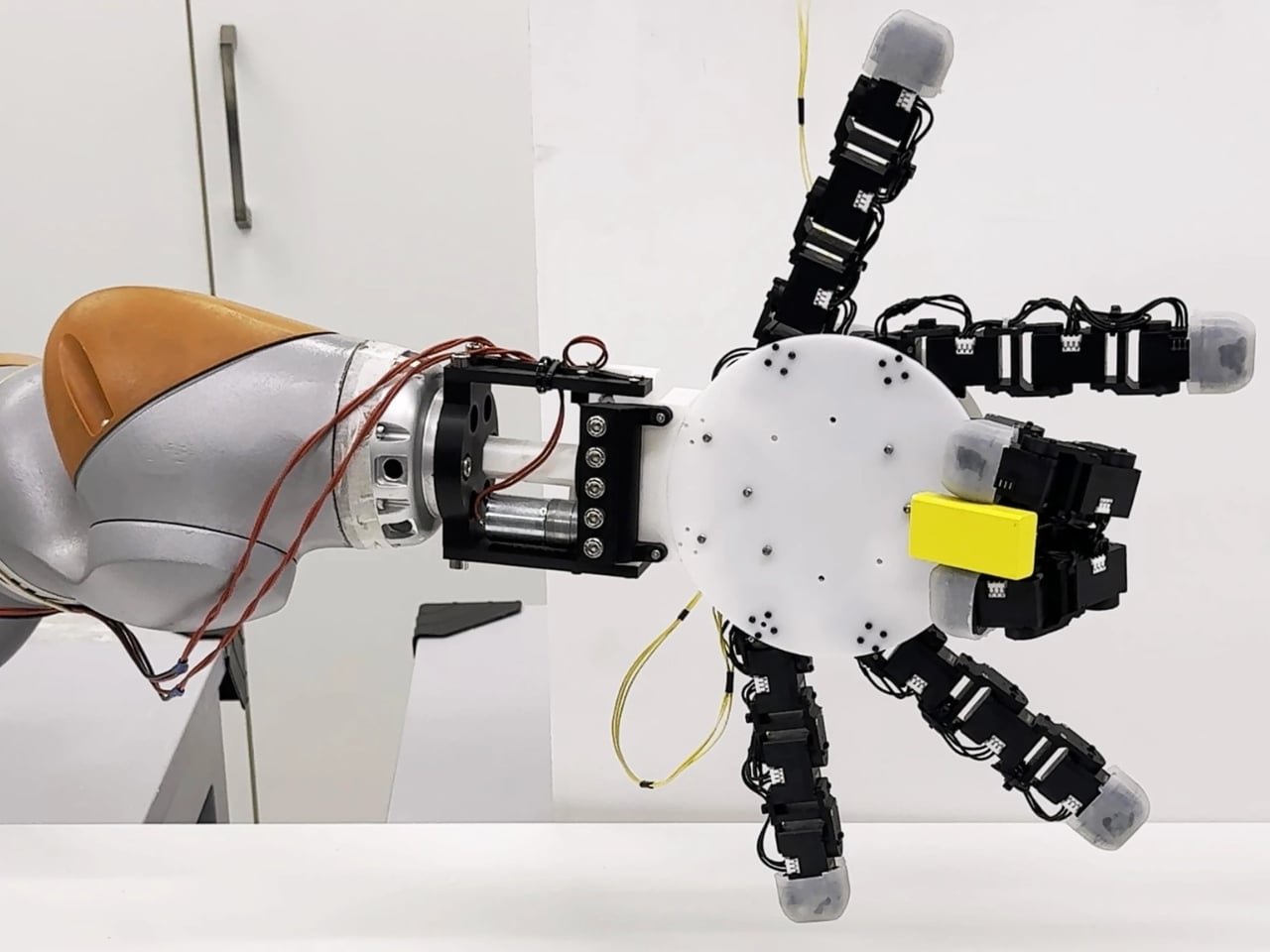

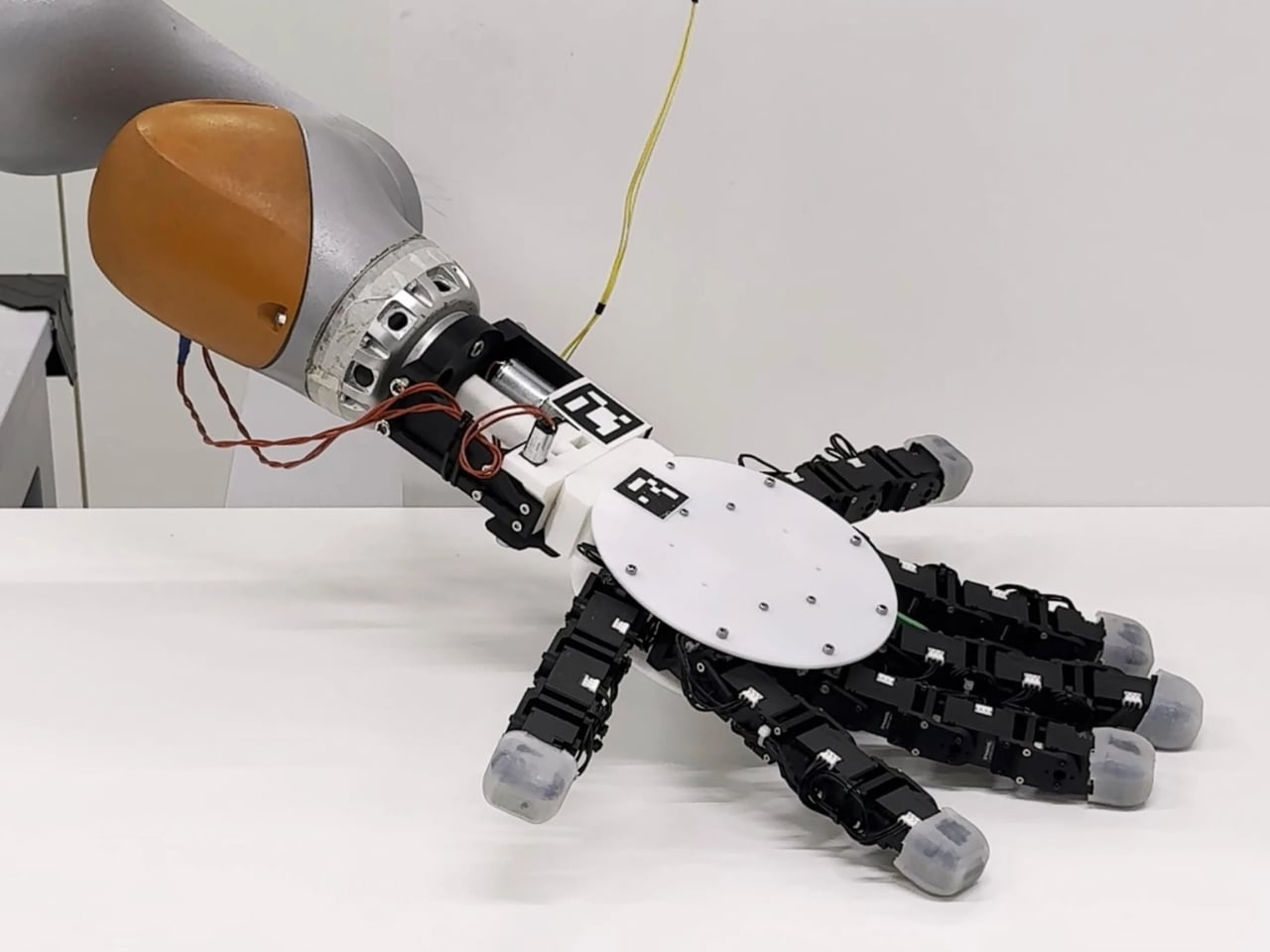

The team at EPFL, led by Aude Billard from the Learning Algorithms and Systems Laboratory, decided to throw the rulebook out the window. Instead of copying human anatomy, they created something better: a symmetrical hand that features up to six identical fingers, each tipped with silicone for grip. The genius lies in the design, where any combination of fingers can form opposing pairs for pinching and grasping. No single designated thumb here.

But wait, it gets wilder. The hand is completely reversible, meaning the palm and back are interchangeable. Flip it over, and it works just as effectively from either side. This eliminates the need for awkward repositioning and opens up grasping possibilities that humans simply can’t achieve. The device can perform 33 different types of human grasping motions, and thanks to its modular design, it can hold multiple objects simultaneously with fewer fingers than we’d need.

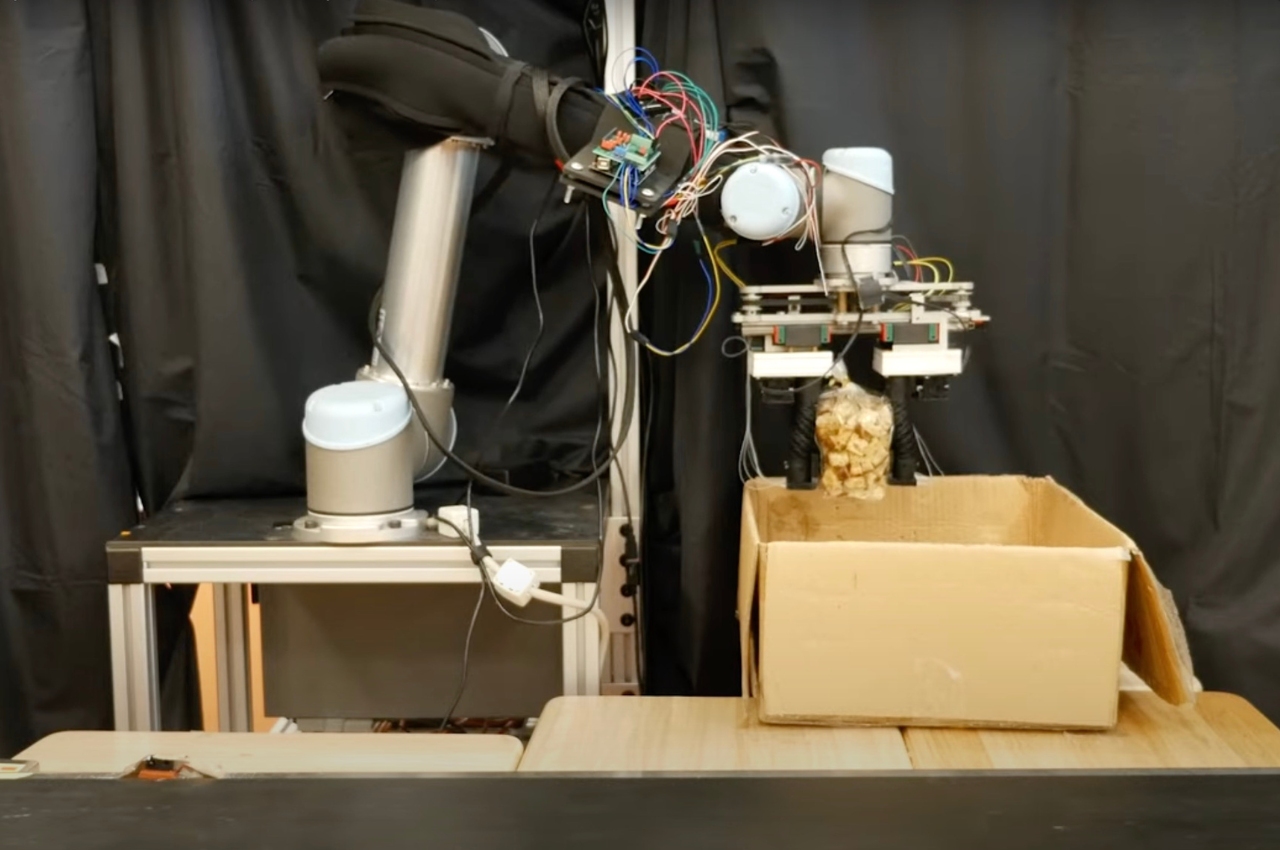

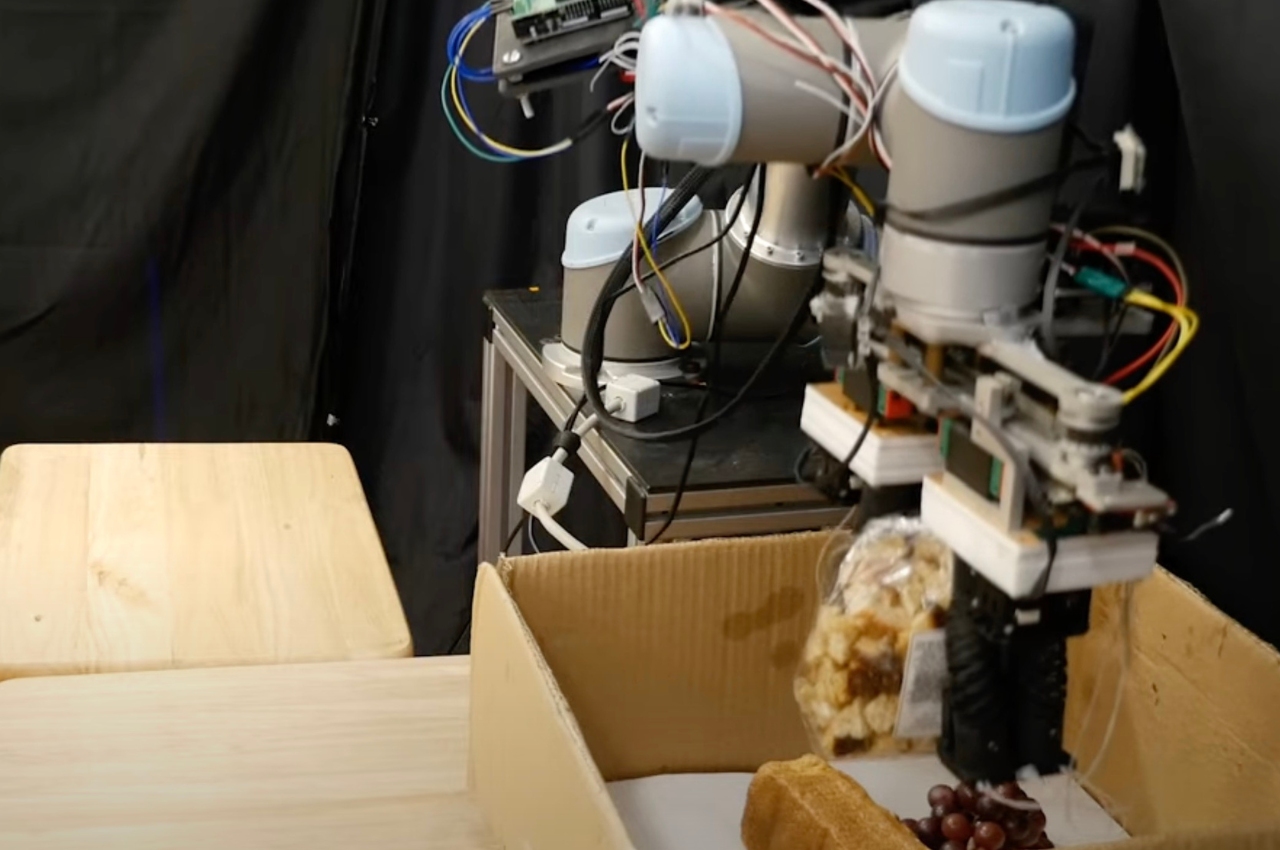

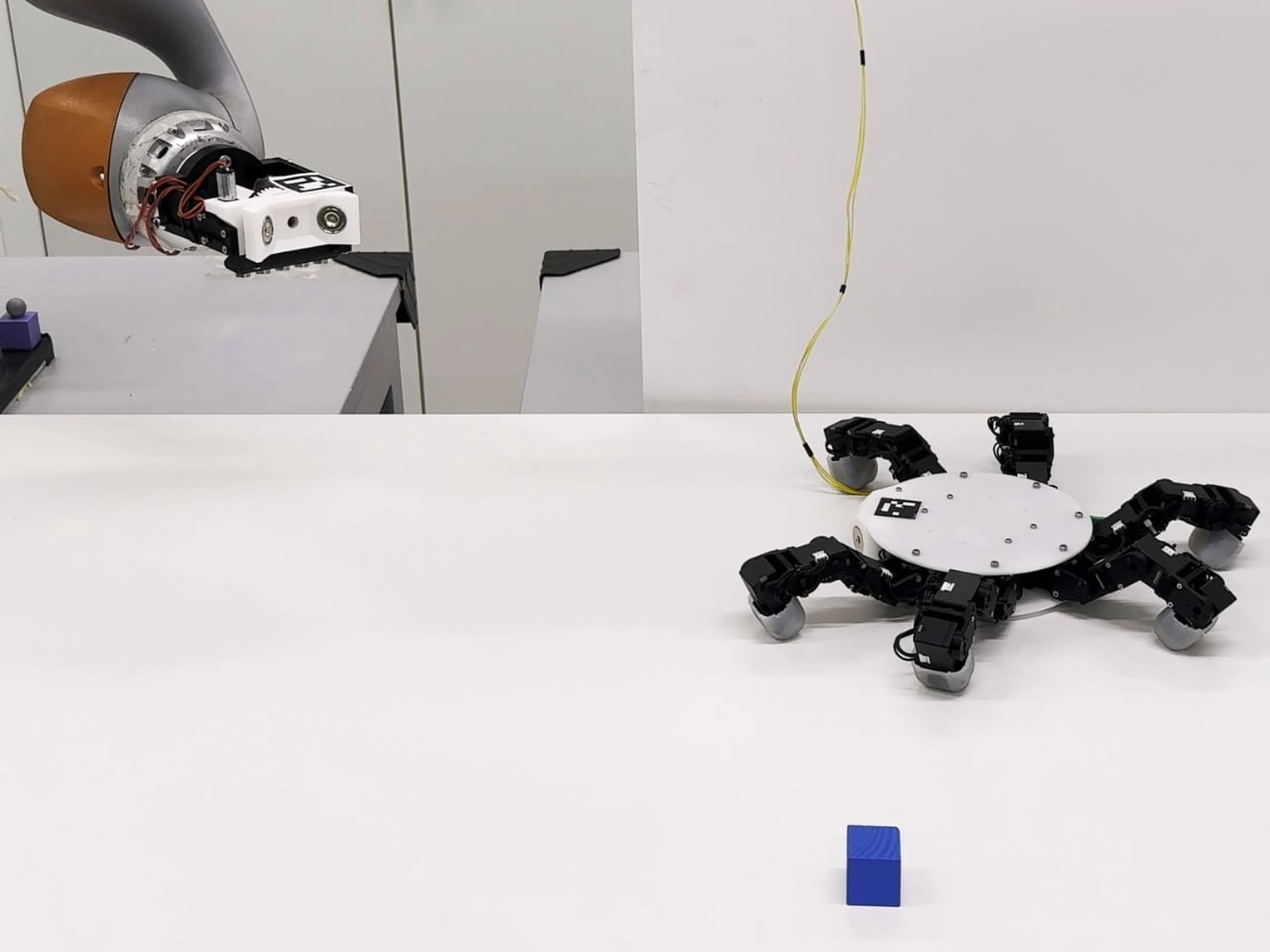

The most mind-bending feature? This hand can literally walk away from its job. Using a magnetic attachment and motor-driven bolt system, it detaches from its robotic arm and crawls independently to retrieve objects beyond the arm’s reach. Picture a warehouse robot that needs to grab something just out of range. Instead of the entire system repositioning, the hand simply walks over, grabs what it needs, and returns like a loyal (if slightly creepy) pet.

The practical applications are staggering. In industrial settings, this kind of “loco-manipulation” (locomotion plus manipulation) could revolutionize how robots interact with their environments. Service robots could navigate complex spaces and handle multiple tasks without constant human intervention. In exploratory robotics, think Mars rovers or deep-sea vehicles, a detachable hand could investigate tight spaces or retrieve samples from areas the main body can’t access.

The research team’s work, published in Nature, demonstrates that symmetrical design provides measurably better performance, with 5 to 10 percent improvements in crawling distance compared to traditional asymmetric configurations. The hand’s 160mm diameter palm houses motors that mimic the natural forward movement of human finger joints, but without being constrained by human limitations.

What makes this project so compelling isn’t just the technical achievement. It’s the philosophical shift it represents. For years, robotics has been obsessed with replicating human form and function. But by questioning whether human design is actually optimal for all tasks, the EPFL team has created something that surpasses our biological blueprint. It’s a reminder that innovation often requires abandoning our assumptions about how things should work.

This robotic hand represents more than just another engineering marvel. It’s a glimpse into a future where machines aren’t limited by human constraints, where form follows function in unexpected ways, and where a hand doesn’t need to stay attached to be incredibly handy. Whether it’s retrieving your dropped phone from under the couch or assembling complex machinery in factories, this crawling, grasping, reversible wonder proves that sometimes the best way forward is to let go of convention entirely.

The post This 6-Fingered Robot Hand Crawls Away From Its Own Arm first appeared on Yanko Design.