The best gaming accessories solve problems you didn’t know you had. Before you use them, the old way seems fine. After you use them, going back feels like torture. Belkin’s new Charging Case Pro for Nintendo Switch 2 falls squarely into this category.

The original Charging Case launched last June with a solid concept: protect your Switch 2 and keep it powered during long trips. But Belkin’s designers clearly listened to user feedback and spotted the pain points. The Pro version addresses nearly every frustration from the first generation. You can charge the battery without unzipping the case. You get a proper integrated stand instead of relying on the console’s kickstand. There’s even a battery level display so you’re never guessing how much juice remains. These aren’t revolutionary features individually, but together they transform a good accessory into an essential one.

Designer: Belkin

Having to unzip your case, fish out the battery pack, plug it in separately, then put everything back together just to charge overnight is the kind of stupid friction that kills products. The external USB-C port fixes this entirely. Leave your Switch 2 inside, plug one cable into the front, done. The little OLED display shows precise battery percentage, which actually matters when you’re deciding whether to top up before a three-hour flight or risk it.

The 10,000mAh battery doubles as an adjustable stand with a built-in USB-C connector. The Switch 2 slots onto it like the official dock and starts charging immediately at whatever viewing angle works. The original version forced users to balance the console on its mediocre kickstand while a cable dangled between device and battery. One approach solves the problem. The other creates new ones.

Belkin claims 1.5 full charges from the 10,000mAh capacity, translating to 12 to 15 extra hours depending on what’s running. Graphically intensive games like Zelda sequels will drain faster than pixel art indie platformers, but either way that’s enough power to cross the Atlantic twice before needing an outlet. For a case this compact, the capacity-to-size ratio makes sense. A bigger battery would mean carrying a brick.

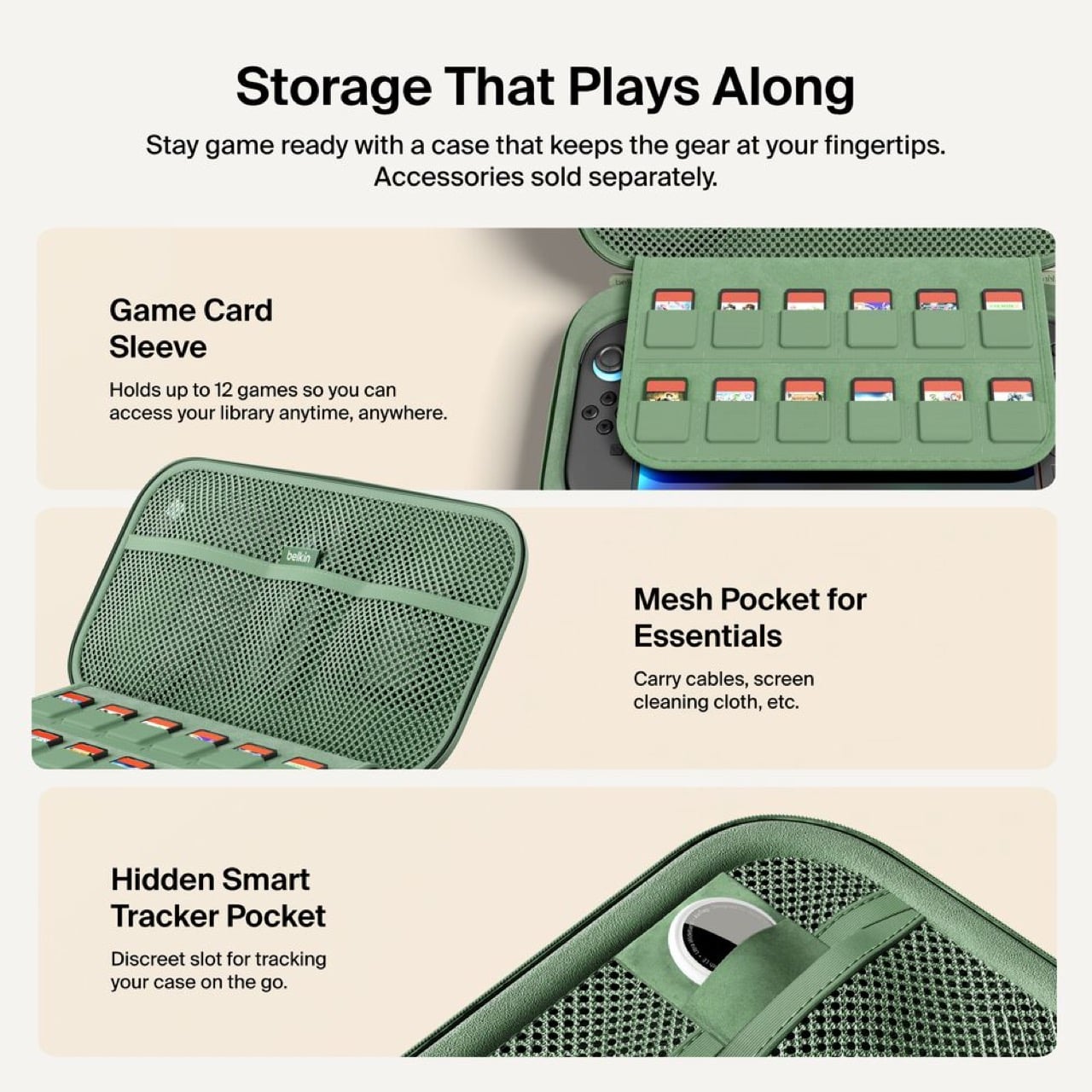

Twelve individual game cartridge slots sit under a flip-down cover, each one molded to prevent rattling. A mesh pocket handles cables and microfiber cloths. Belkin included a hidden compartment sized exactly for an AirTag or Tile tracker. Considering a fully loaded case with console represents about $500 of hardware, location tracking becomes a practical feature rather than a gimmick. People lose things. Expensive things get stolen. Planning for that shows sensible product design.

Three colorways: sage green, black, sandy beige. The green matches Nintendo’s brand aesthetic. Black hides wear better over time. The beige photographs well but might show dirt faster than the darker options.

Charging $99.99 means a $30 jump from the original’s $69.99 price. That’s a 43% increase, which sounds aggressive until you itemize the changes. External charging port. Battery display. Integrated stand with direct connection. Better storage. Refined build quality throughout. Spread $30 across those improvements and the math works. Belkin could have pushed $120 easily and still moved units. They left money on the table, frankly.

This reads like version 2.0 engineering instead of the lazy incremental updates most companies ship. Belkin rebuilt the internal layout, redesigned the battery for dual purpose use, and solved actual user complaints instead of adding RGB lighting or whatever. Most “Pro” accessories just mean “black version, higher price.” This one actually earns the name.

Own the original and it works fine? Skip this. Shopping for your first Switch 2 charging solution or fed up with your current setup? Start here. It’s up on Belkin’s site now, probably hitting Amazon within a week because that’s how 2026 works.

The post Belkin’s New Switch 2 Docking Case Has a 10,000mAh Battery That Lets You Charge While Shut first appeared on Yanko Design.