AI chatbots haven't come close to replacing teens' social media habits, but they are playing a significant role in their online habits. Nearly one-third of US teens report using AI chatbots daily or more, according to a new report from Pew Research.

The report is the first from Pew to specifically examine how often teens are using AI overall, and was published alongside its latest research on teens' social media use. It's based on an online survey of 1,458 US teens who were polled between September 25 to October 9, 2025. According to Pew, the survey was "weighted to be representative of U.S. teens ages 13 to 17 who live with their parents by age, gender, race and ethnicity, household income, and other categories."

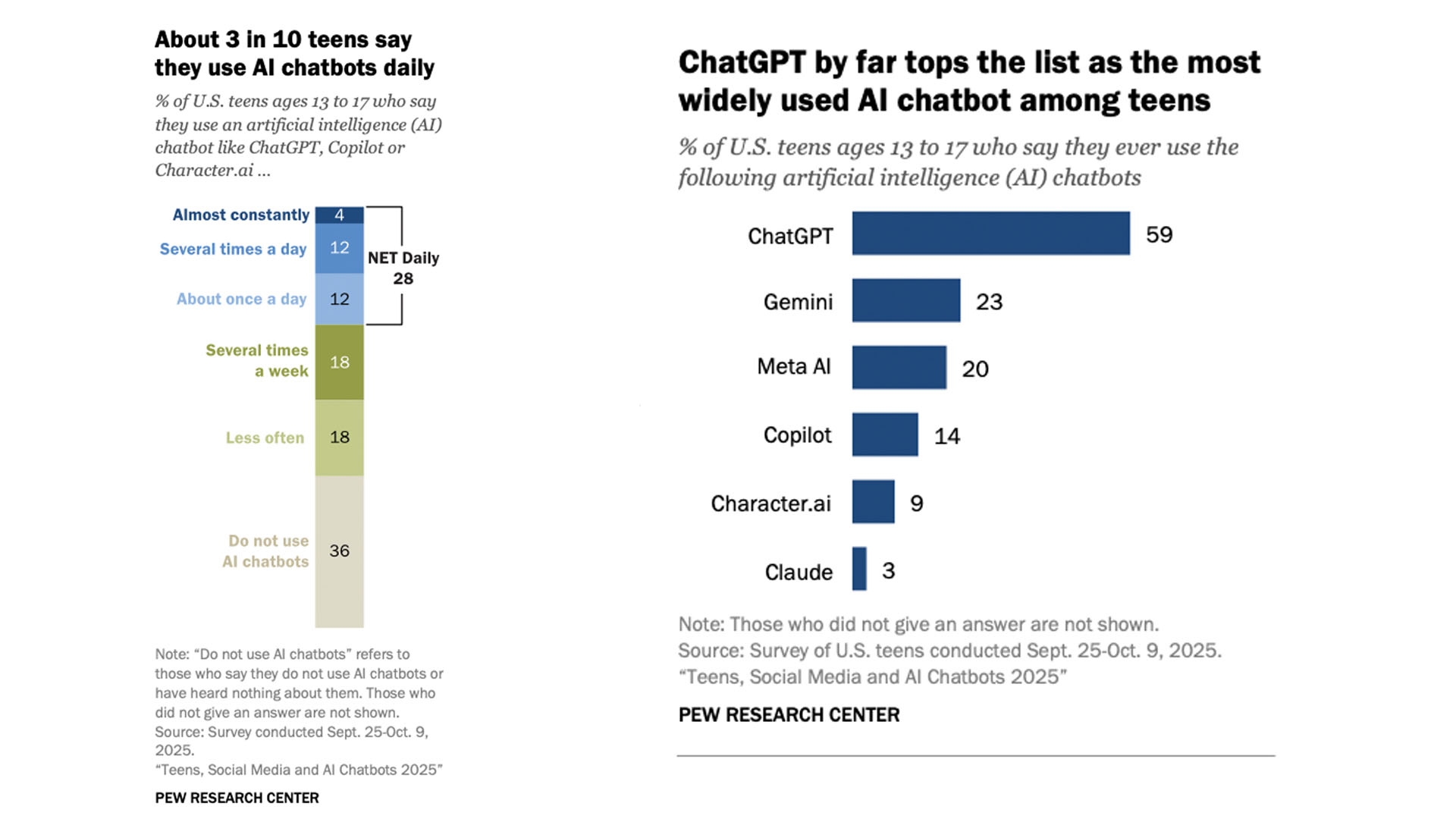

According to Pew, 48 percent of teens use AI chatbots "several times a week" or more often, with 12 percent reporting their use at "several times a day" and 4 percent saying they use the tools "almost constantly." That's far fewer than the 21 percent of teens who report almost constant use of TikTok and the 17 percent who say the same about YouTube. But those numbers are still significant considering how much newer these services are compared with mainstream social media apps.

The report also offers some insight into which AI companies' chatbots are most used among teens. OpenAI's ChatGPT came out ahead by far, with 59 percent of teens saying they had used the service, followed by Google's Gemini at 23 percent and Meta AI at 20 percent. Just 14 percent of teens said they had ever used Microsoft Copilot, and 9 percent and 3 percent reported using Character AI and Anthropic's Claude, respectively.

Pew's research comes as there's been growing scrutiny over AI companies' handling of younger users. Both OpenAI and Character AI are currently facing wrongful deaths lawsuits from the parents of teens who died by suicide. In both cases, the parents allege that their child's interactions with a chatbot played a role in their death. (Character AI briefly banned teens from its service before introducing a more limited format for younger users.) Other companies, including Alphabet and Meta, are being probed by the FTC over their safety policies for younger users.

Interestingly, the report also indicates there has been little change in US teens' social media use. Pew, which has regularly polled teens about how they use social media, notes that teens' daily use of these platforms "remains relatively stable" compared with recent years. YouTube is still the most widely-used platform, reaching 92 percent of teens, followed by TikTok at 69 percent, Instagram at 63 percent and Snapchat at 55 percent. Of the major apps the report surveyed, WhatsApp is the only service to see significant change in recent years, with 24 percent of teens now reporting they use the messaging app, compared with 17 percent in 2022.