Not all robots have to look, well, robotic. There is a growing number of robots that are inspired by real life creatures (sometimes, even humans, but that’s a whole other discussion) or so called bio-inspired bots. The latest winner of the Natural Robotics Contest is inspired by a pretty unlikely animal: the insect-eating mammal called the Pangolin.

Designer: Dorothy and Dr. Robert Siddall

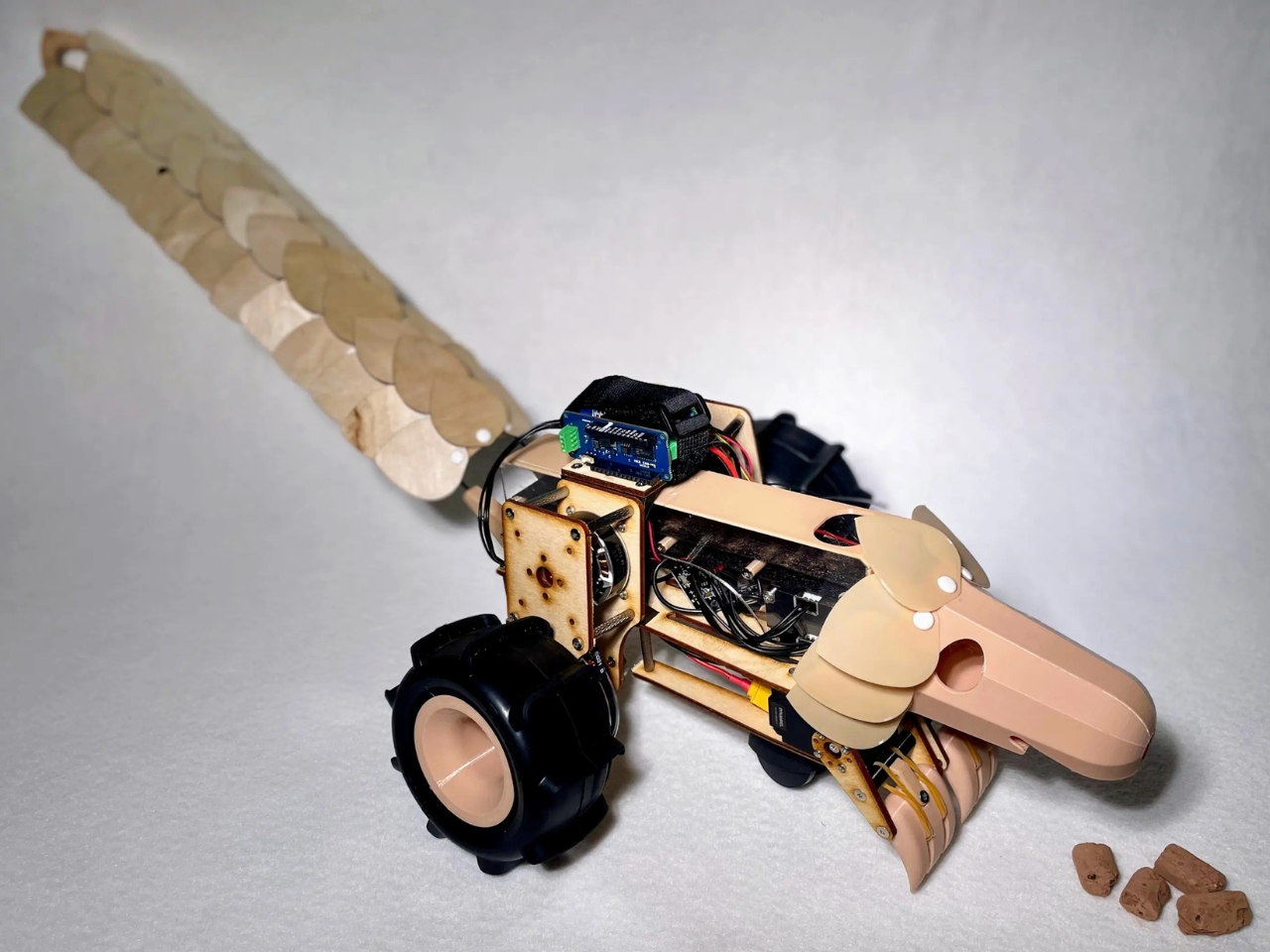

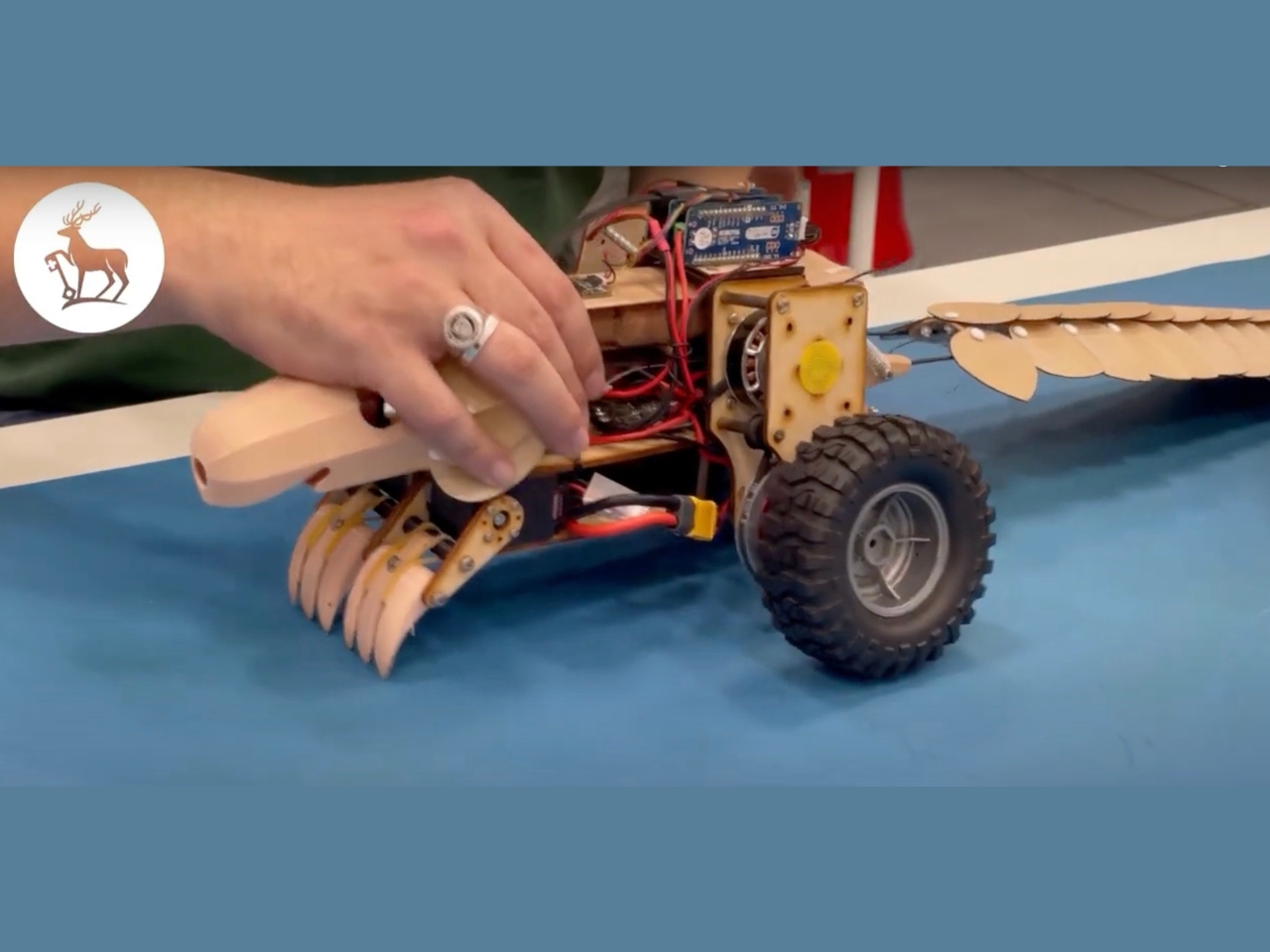

A high school student from California named Dorothy designed a robot whose main goal is to dig and plant seeds. Since pangolins are naturally digging animals, why not use it to create a robot that can help populate areas with more trees? The winning concept was turned into an actual prototype called the Plantolin by the partner research institute. More than just looking like a pangolin, it uses features from the mammal and incorporate it into the functions of the robot.

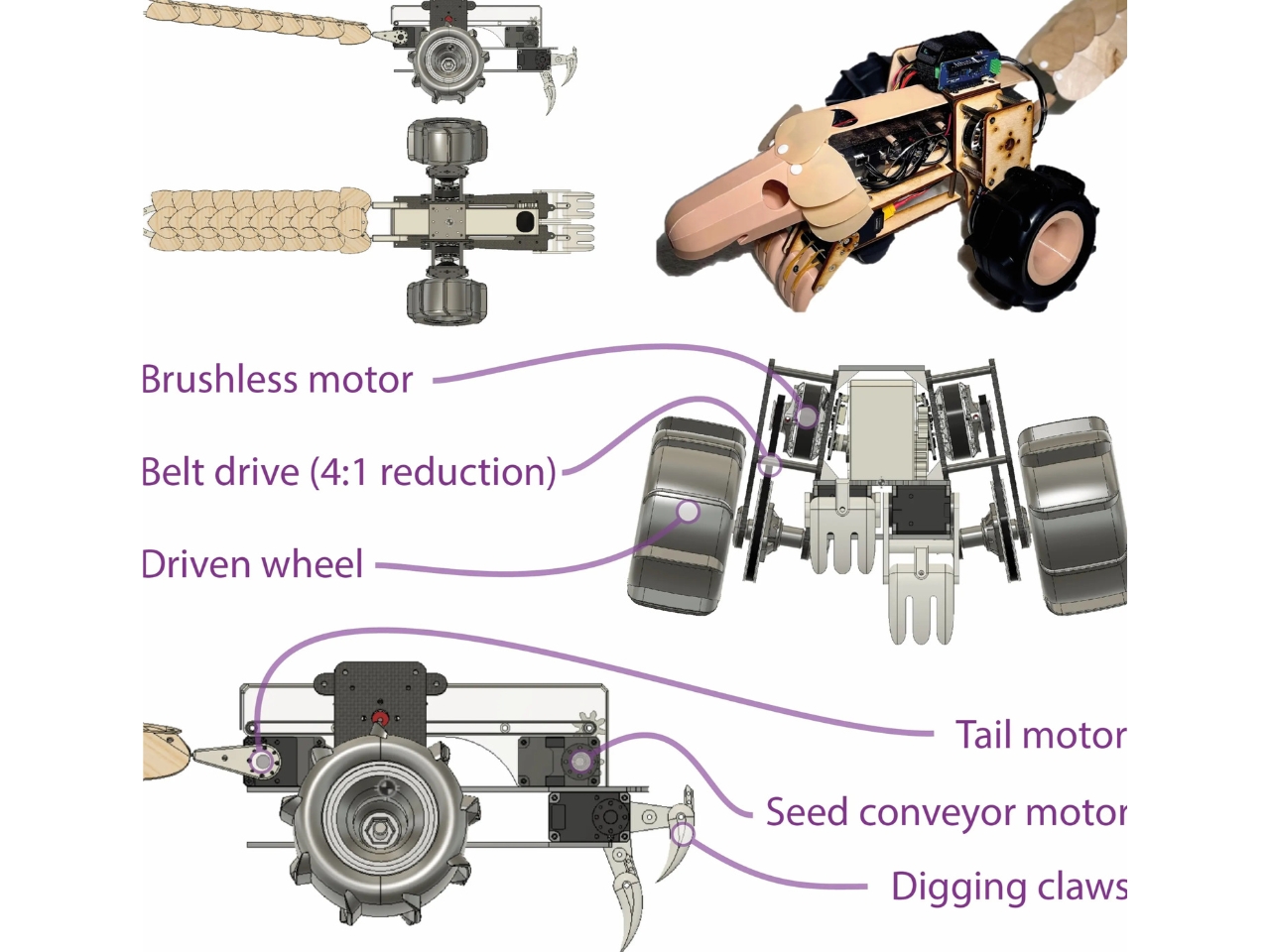

The Plantolin roves around on two wheels and just like the pangolin, it balances on its long, movable tail. Each of the wheels has an electric quadcopter drone motor. The digging is done by these two front legs with the tail tilting down once it starts to provide leverage. Once there’s a hole already, the robot drives over it and poops out a yew tree seed bomb nugget (containing both seeds and soil).

It’s a pretty interesting way to re-populate a space with more trees. It will probably be faster and will need minimal human intervention when it’s programmed right, so no need to train actual pangolins to do the job.

The post Pangolin-inspired robot can dig and “poop” out seeds to plant trees first appeared on Yanko Design.