Once upon a Super Bowl Sunday in 1984, Apple unveiled an ad that changed the world. Directed by Ridley Scott, the commercial broke new ground in marketing and technology. As the heroine dashed through a drab, Orwellian world, hurling a hammer to shatter the oppressive image on the screen, viewers were left breathless. The message was clear: the Apple Macintosh was here to break the chains of conformity and ignite the flames of innovation. Forty years later, where is Apple now? The legacy of that revolutionary spirit lives on, not just in Apple’s computers, but right on our wrists.

Designer: Apple

Designer: Seiko

Fast-forward to today and the legacy of that revolutionary spirit lives on, not just in Apple’s computers but right on our wrists. Enter the Apple Watch Ultra, a device embodying the essence of forward-thinking technology. But before we dive into the modern marvel, let’s take a nostalgic trip back to another wrist-worn wonder from the same era: the Seiko UC-2000.

The Seiko UC-2000: A Wrist Revolution in 1984

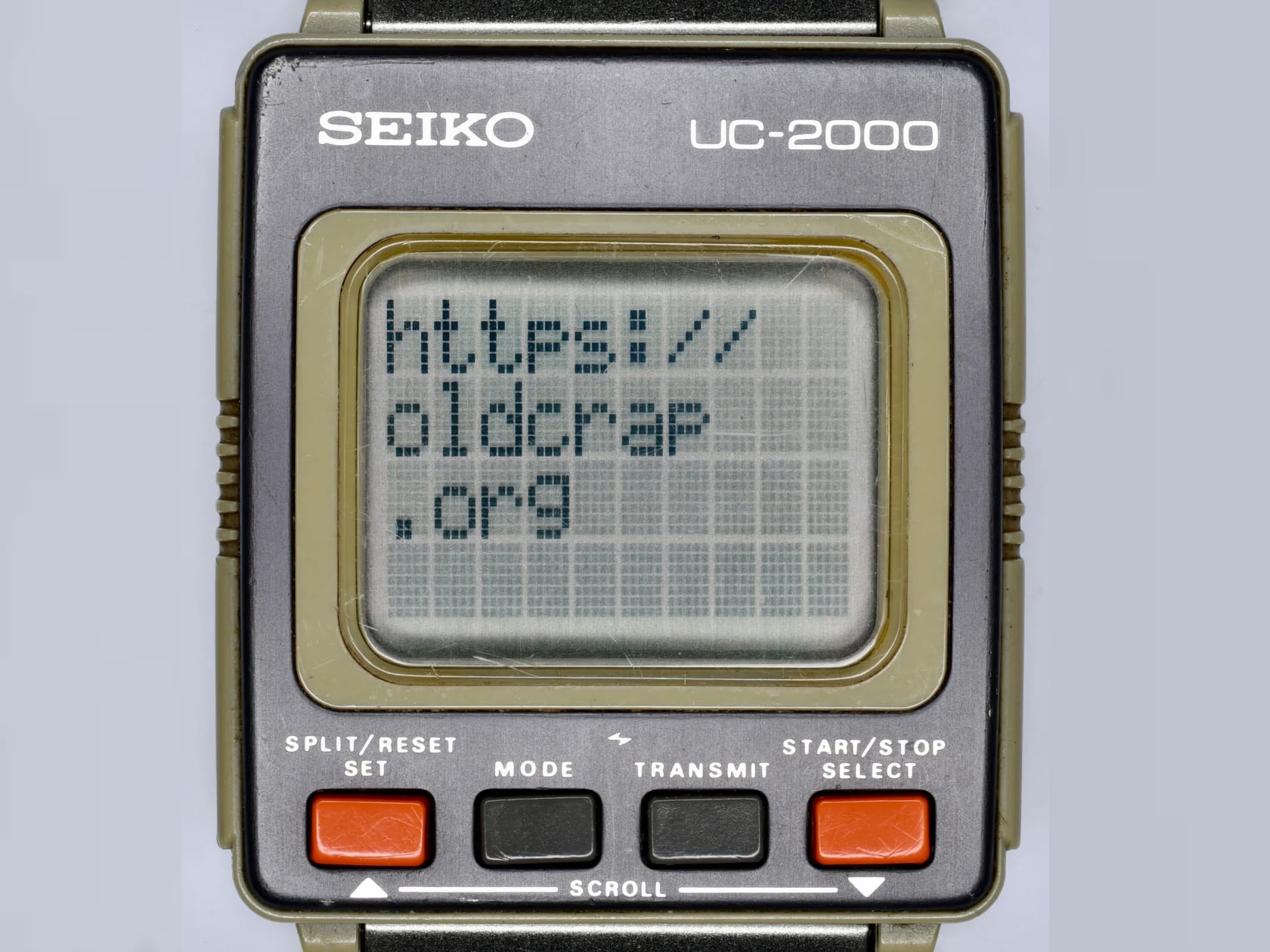

In the same iconic year of 1984, Seiko wasn’t content with just making elegant timepieces. They dared to dream of a wristwatch that could do so much more. Introducing the Seiko UC-2000, a watch that doubled as a personal computer. This gadget had a rectangular LCD screen and a series of buttons and could be paired with a keyboard docking station to perform tasks that were nothing short of magical for its time.

Seiko UC-2000

Imagine programming in BASIC, storing contacts, and managing your schedule, all from a device strapped to your wrist. The UC-2000 was ahead of its time, a pioneering effort in wearable tech. Its design included distinctive orange buttons, adding to its unique appearance and functionality.

Seiko UC-2000 + Keyboard dock

Echoes of the Past in the Apple Watch Ultra

Now, let’s warp back to the present and unwrap the magic of the Apple Watch Ultra. Sleek, sophisticated, and packed with features, it’s everything the Seiko UC-2000 aimed to be and more. The Apple Watch Ultra sports a vibrant OLED display, sophisticated health monitoring tools, navigation aids, and seamless connectivity with the Apple ecosystem. It’s like having a mini-supercomputer on your wrist.

Apple Watch Ultra

But here’s the kicker: look closely at the design. Notice anything familiar? The Apple Watch Ultra features an orange ring and a striking orange button on the left. This design choice echoes the legacy of wearable tech pioneers like the Seiko UC-2000.

Source: oldcrap.org Seiko UC-2000

Even more striking is the resemblance in the link bracelet design. The Apple Link Bracelet closely mirrors the design of the Seiko UC-2000’s band, with its sleek, segmented links and metallic finish. Both bracelets emphasize a modern, streamlined look, showcasing how timeless design elements continue to influence new generations of technology. Four decades later, the spirit of those early innovations lives on, influencing and inspiring new generations of technology.

Source: oldcrap.org Seiko UC-2000

Link Bracelet for Apple Watch Ultra 49mm Metal Band

The shape of the watches themselves holds a remarkable resemblance. The Seiko UC-2000 and the Apple Watch Ultra feature a rectangular face with rounded edges, a design that enhances aesthetics and functionality. This shape provides a broad, clear display area while maintaining a comfortable fit on the wrist. The Apple Watch Ultra, with its rounded corners and sleek profile, seems like a modern interpretation of the Seiko UC-2000’s design ethos. This continuity in design highlights how certain principles of form and function remain relevant and influential across decades.

Apple Watch Ultra 2

Seiko UC-2000

Bridging Four Decades of Innovation

The journey from Apple’s iconic 1984 ad to the cutting-edge Apple Watch Ultra is a tale of relentless innovation. Apple’s commercial heralded a new era of computing. Similarly, the Seiko UC-2000 took a bold step into the future of wearable technology.

Source: Reddit

Today, as we glance at our Apple Watch Ultras, it’s fascinating to see how far we’ve come. From the pioneering days of the Seiko UC-2000, with its orange buttons and chunky design, to the sleek, multifunctional marvels on our wrists now, it’s clear that the spirit of innovation and rebellion against the ordinary has always been the driving force. From its early days to now, the Apple Watch has evolved into a market leader, becoming the number one selling watch in the world. It’s incredible to see how far we’ve come.

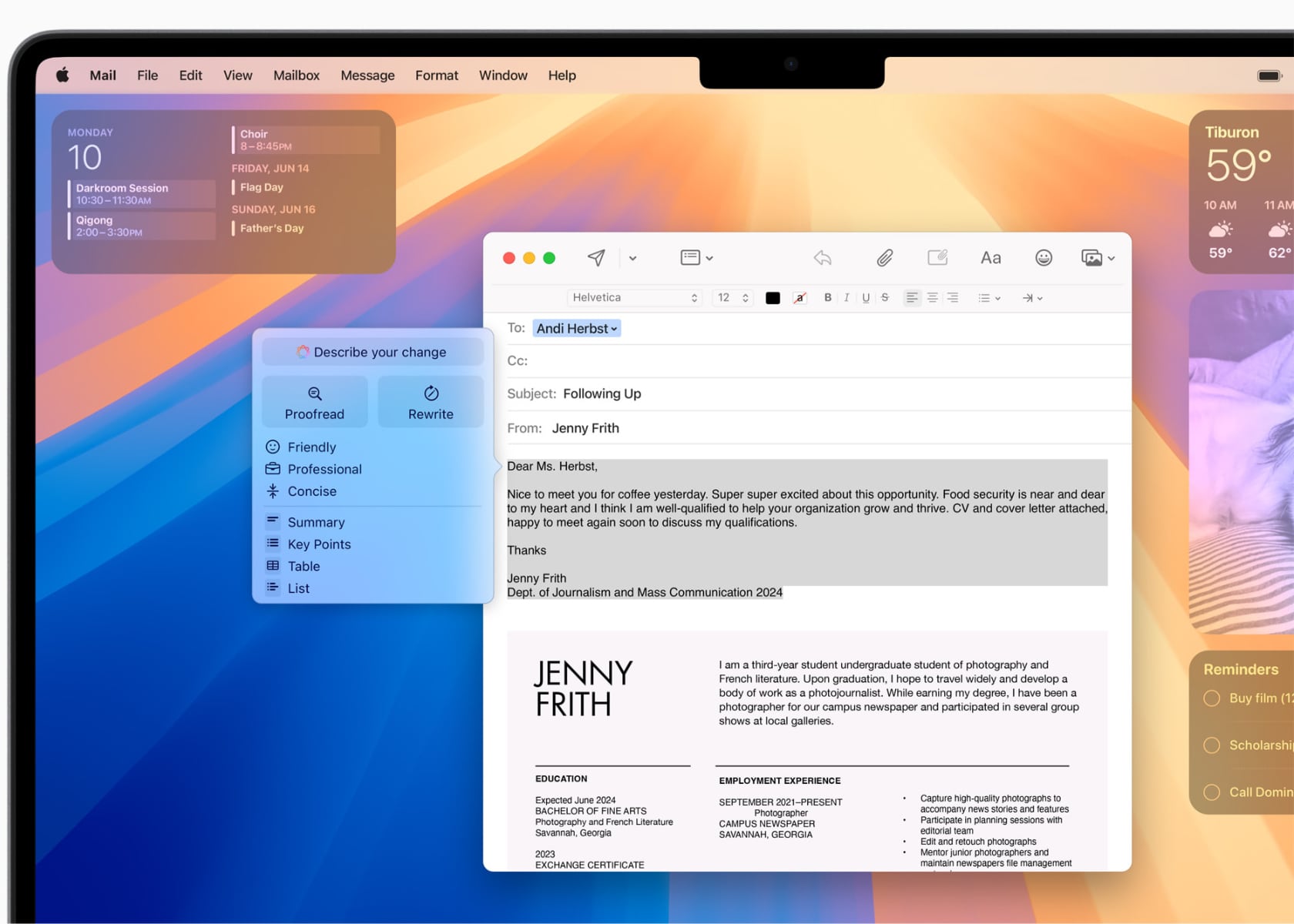

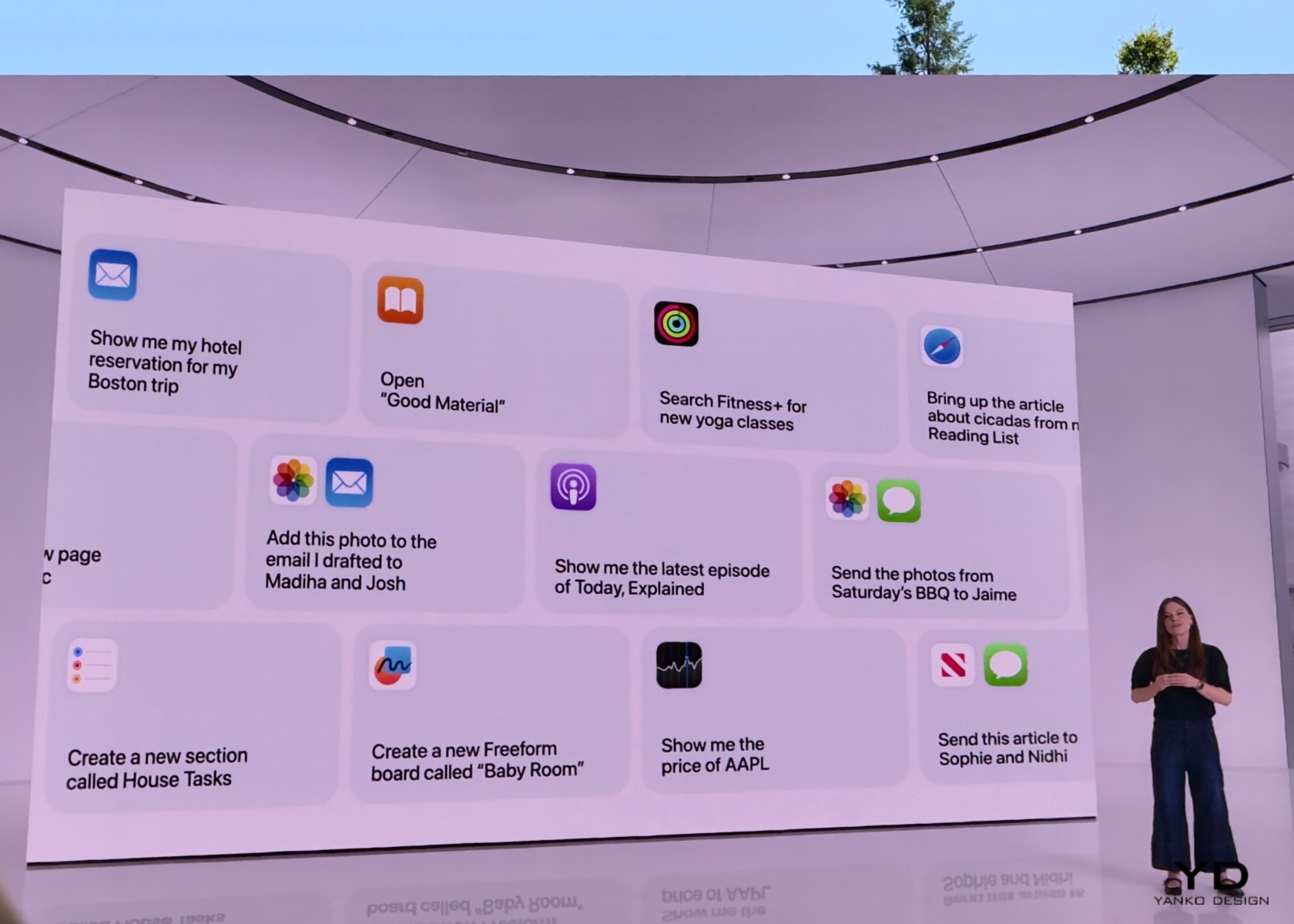

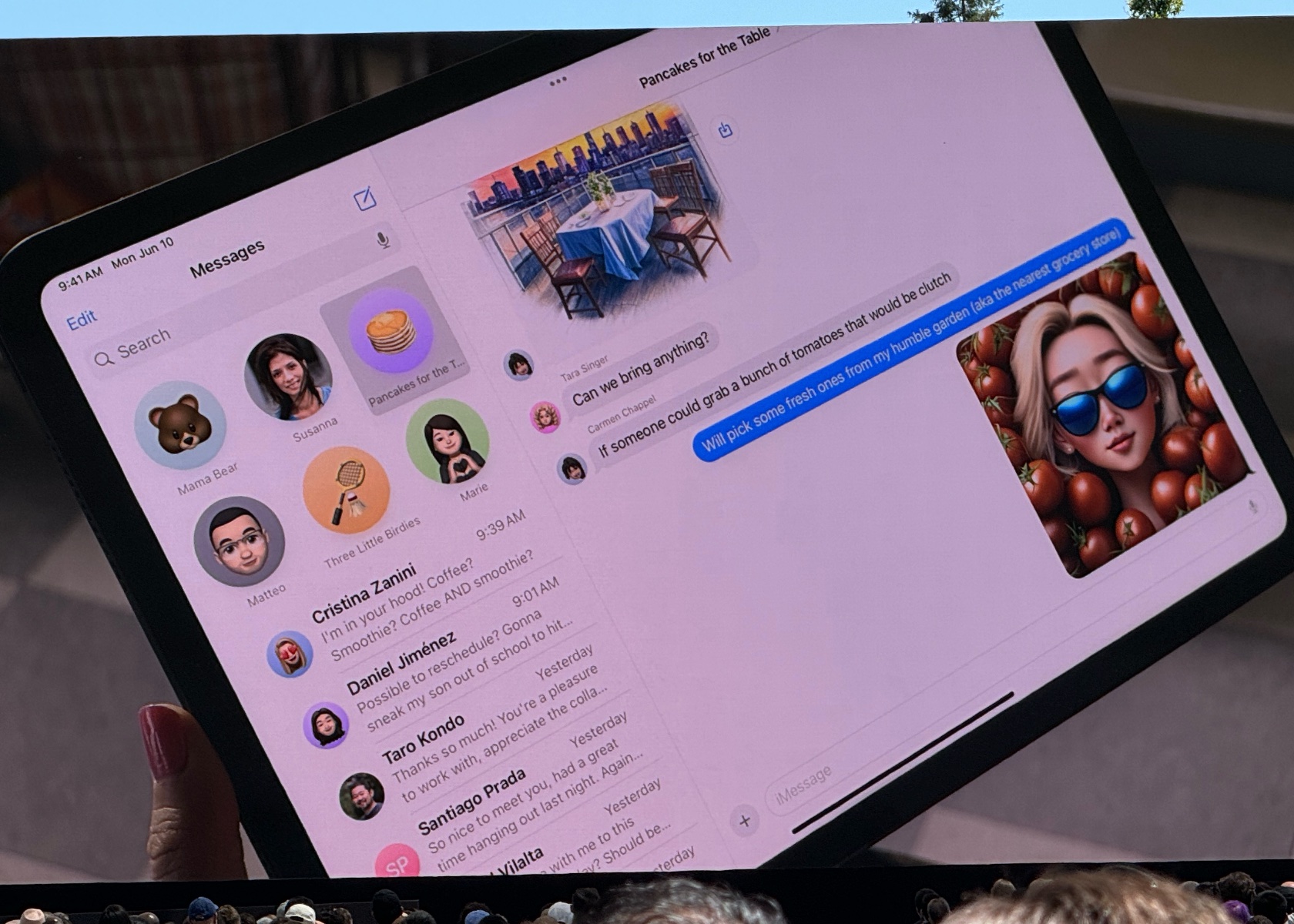

This journey of innovation is mirrored in Apple’s other groundbreaking products. From the revolutionary iPhone and iPad, which redefined how we communicate and consume media, to the Vision Pro, which promises to revolutionize augmented reality. The MacBook and iMac continue to set standards in computing performance and design, illustrating Apple’s dedication to pushing the boundaries of technology across all its product lines.

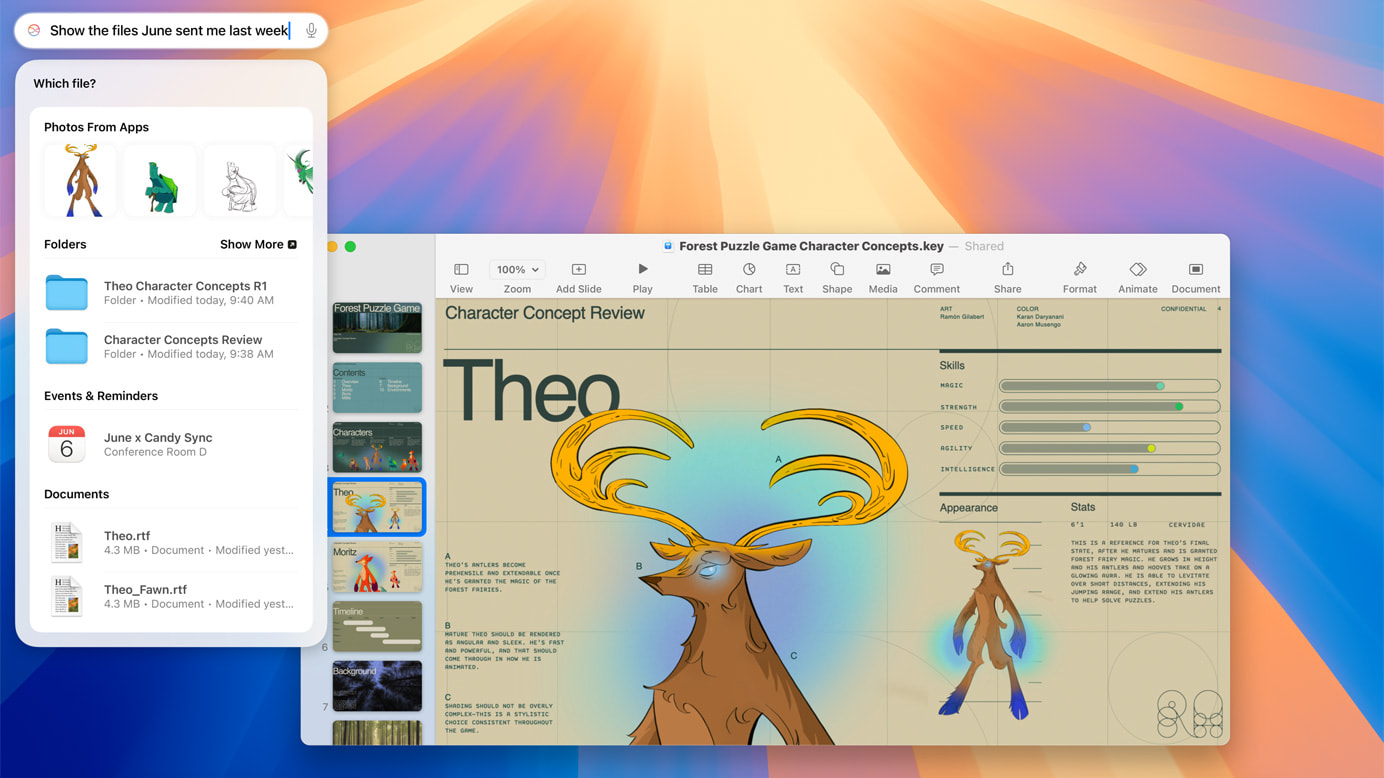

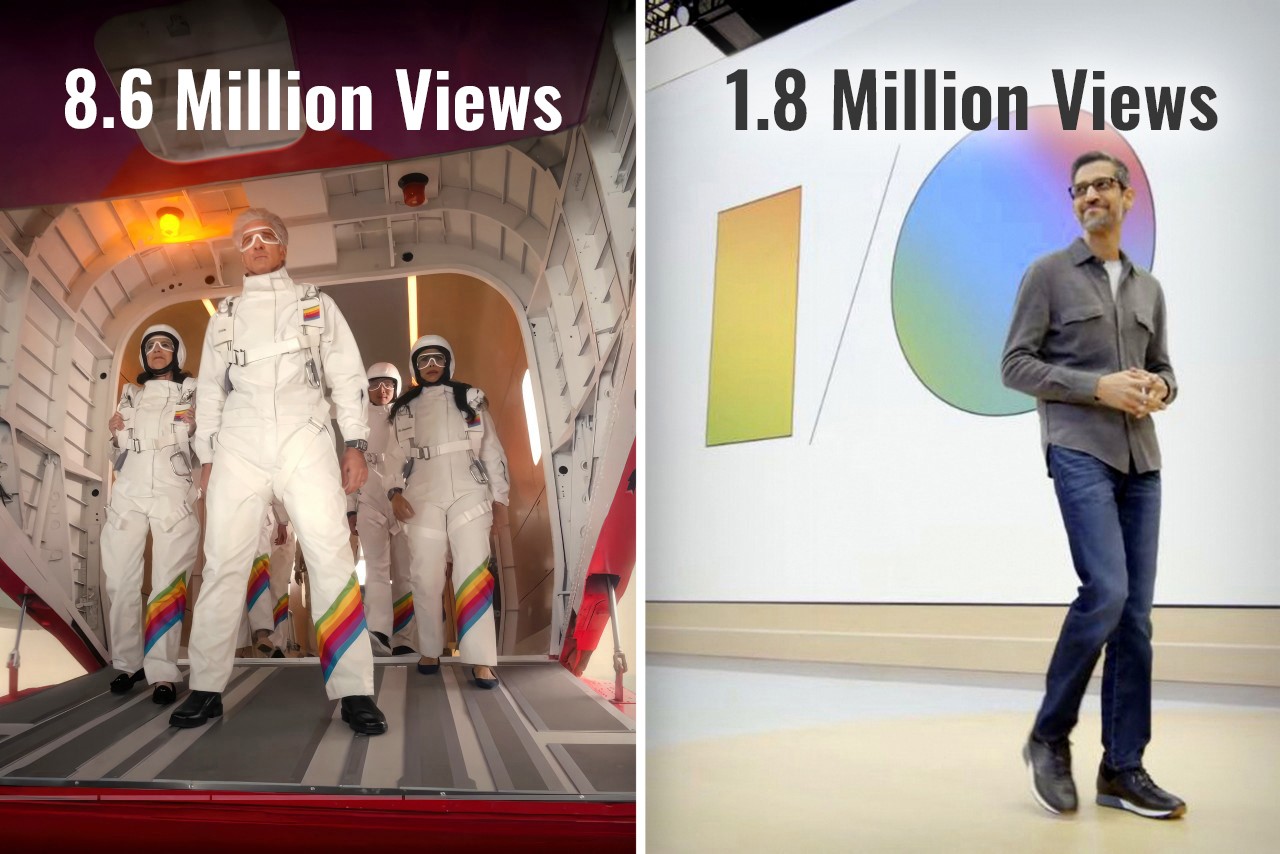

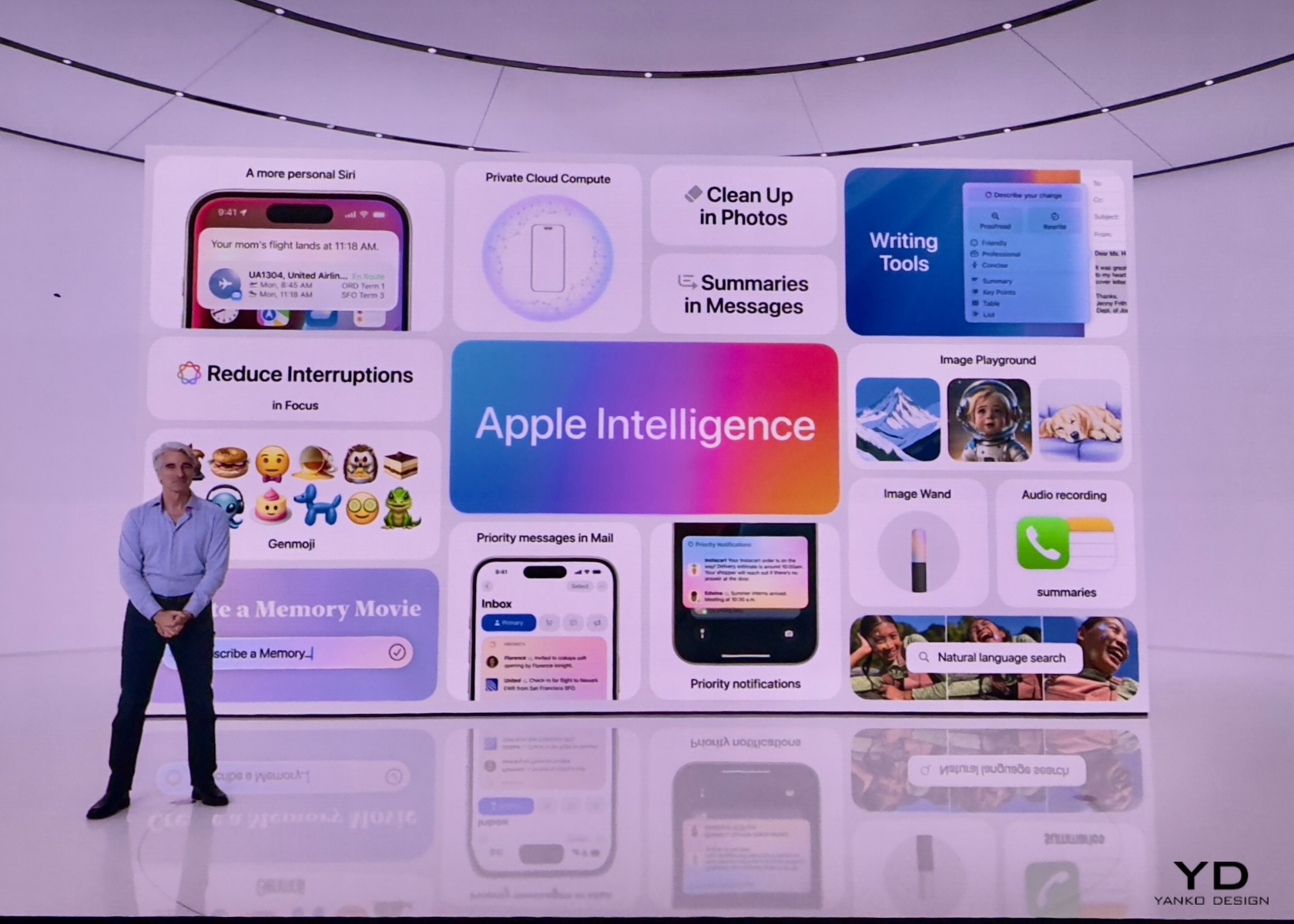

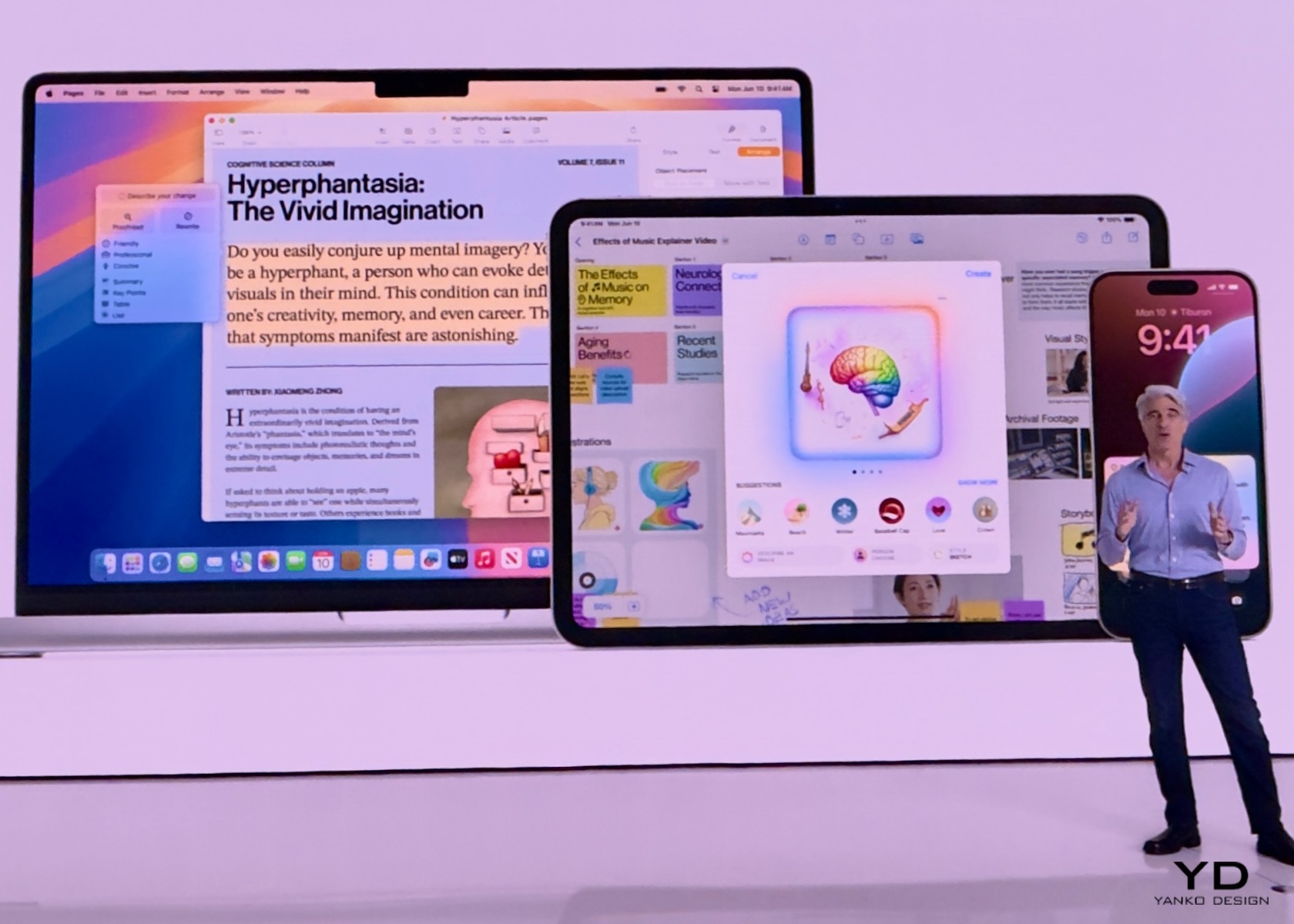

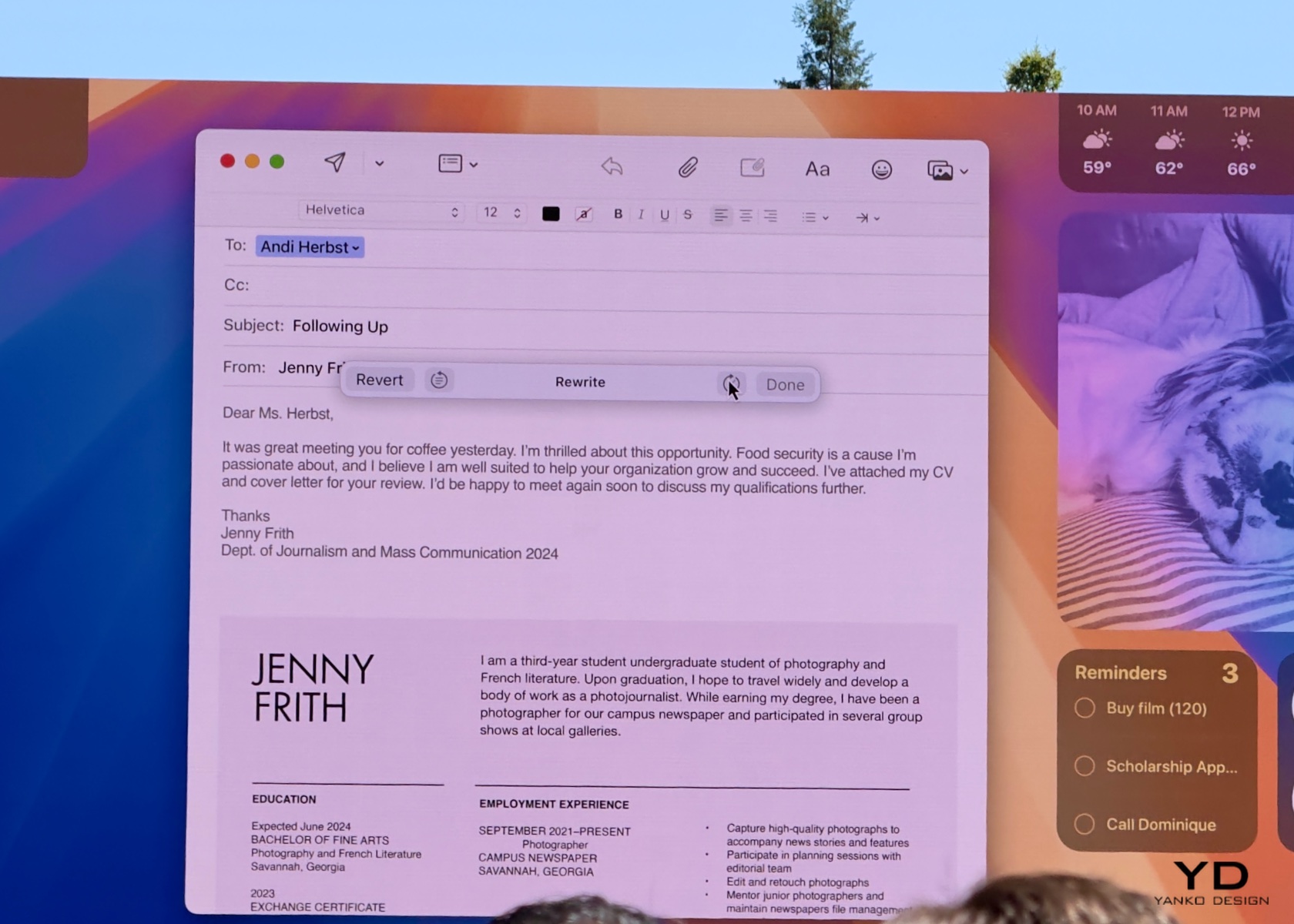

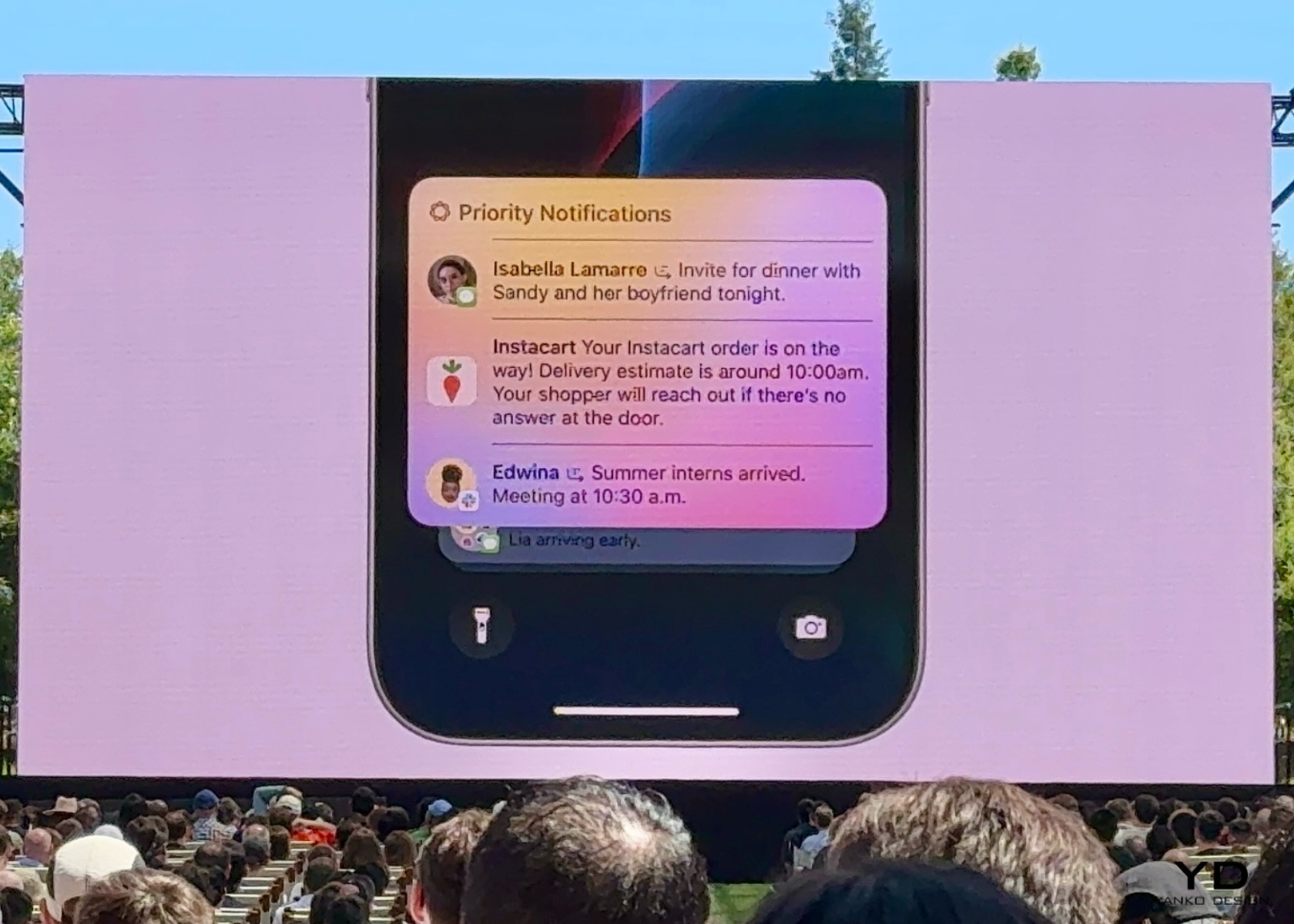

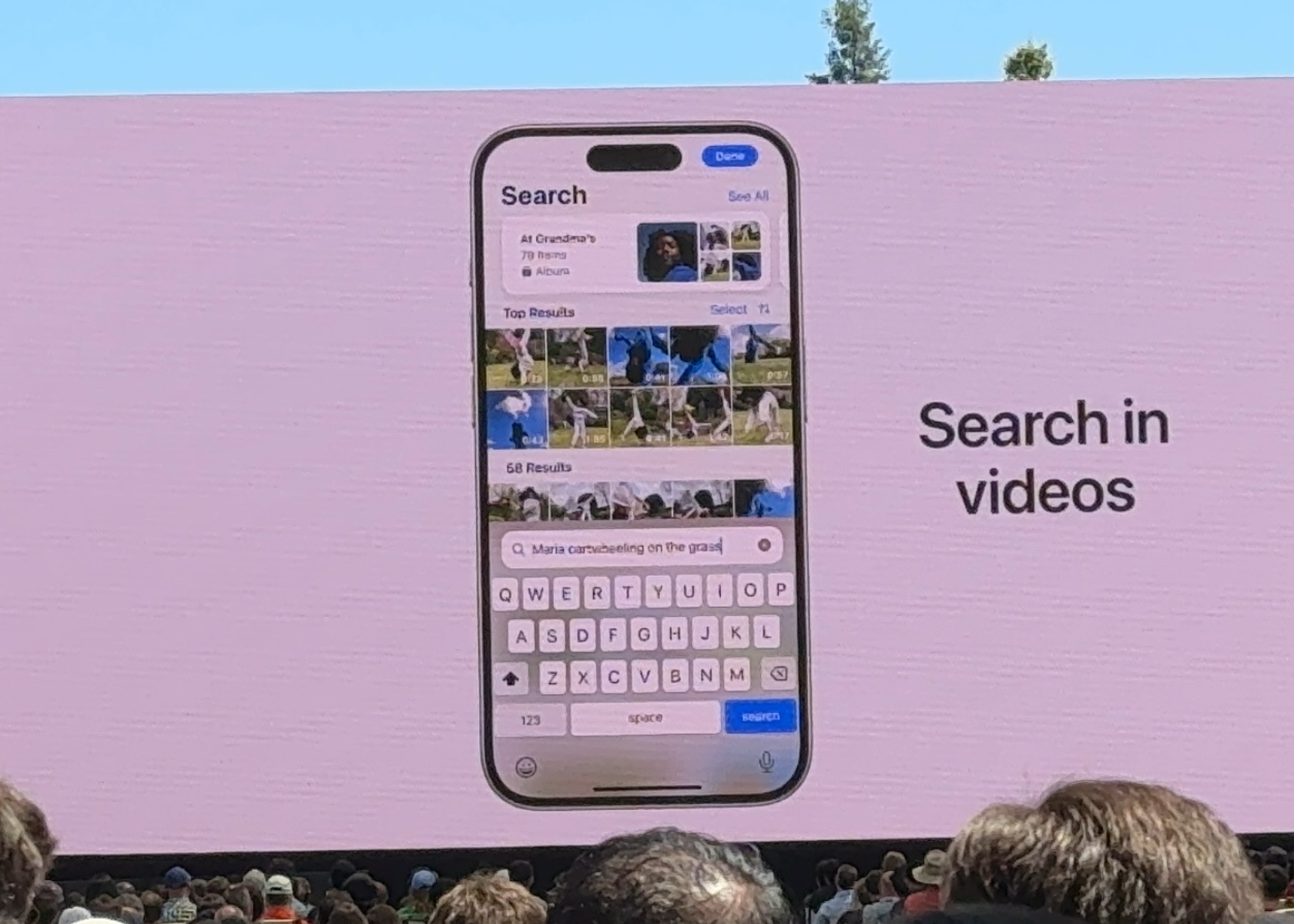

Apple WWDC 2024: A Theatrical Return to Roots

In 2024, Apple brought back the daring and eye-popping theatricality reminiscent of its 1984 ad. Senior Vice President of Software Engineering Craig Federighi kicked off the event at the Worldwide Developers Conference by parachuting down into the Apple headquarters. This dramatic entrance harkened back to Apple’s roots, showcasing its commitment to breaking the mold and captivating its audience.

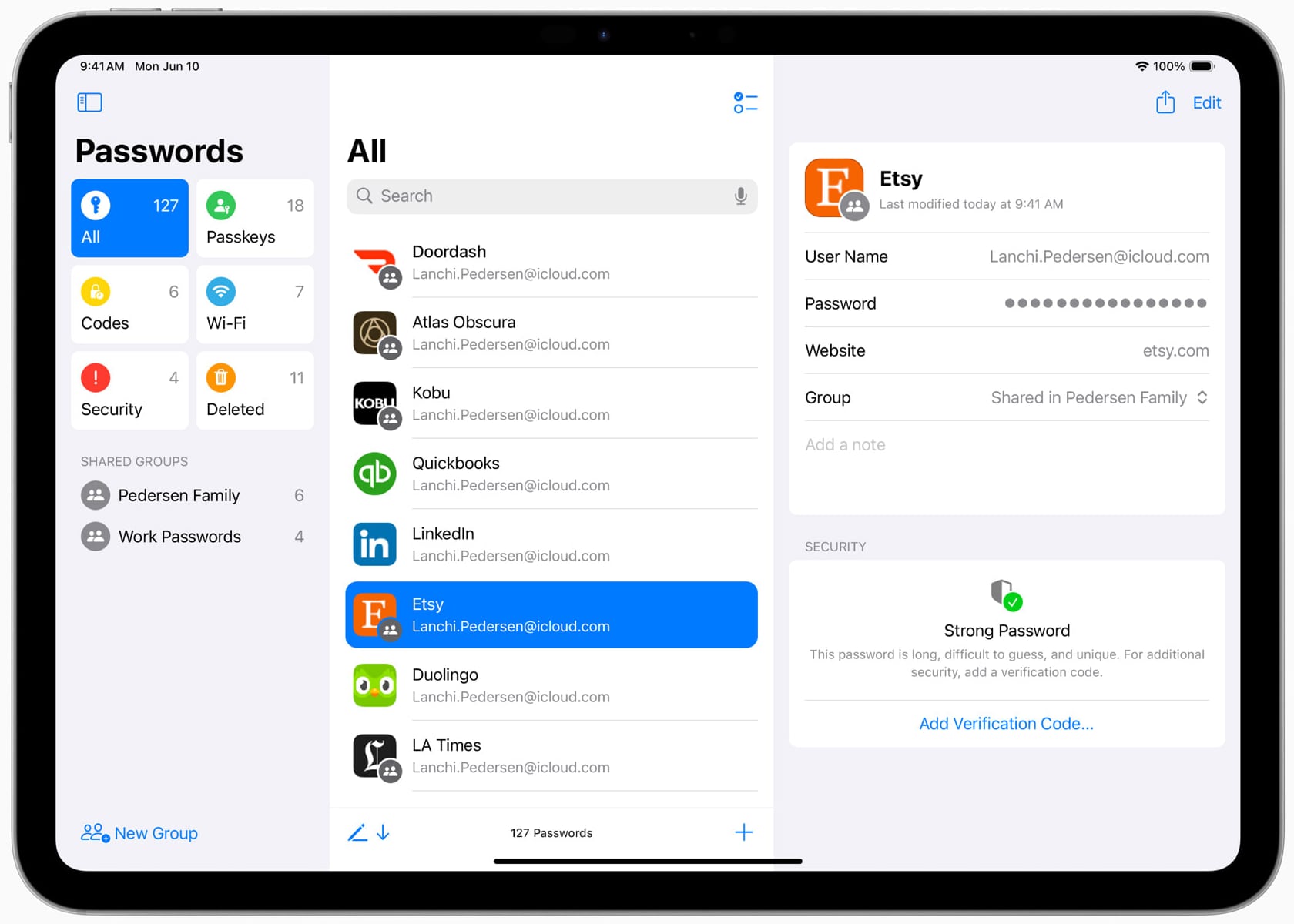

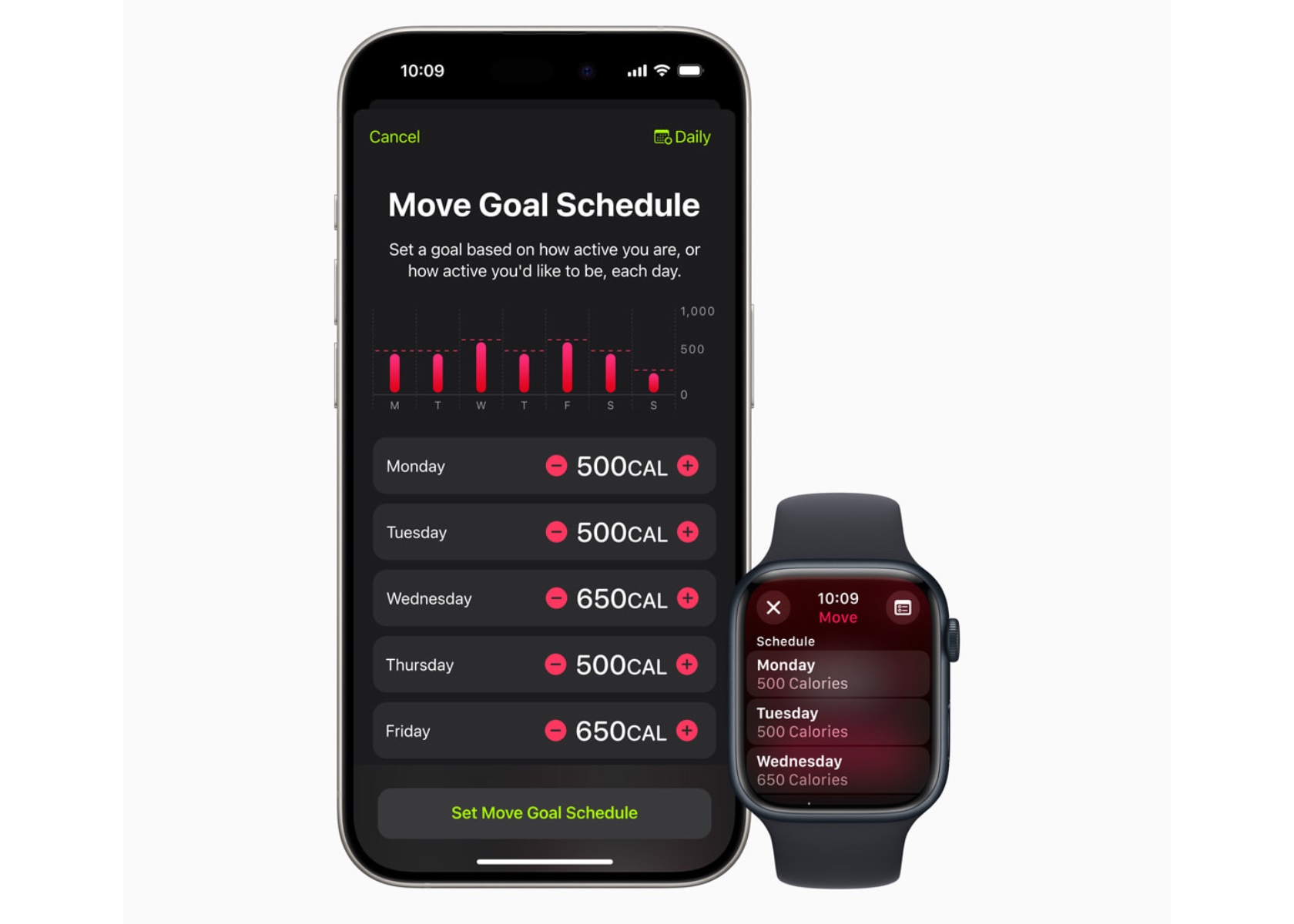

watchOS Public Beta: New Features to Love

watchOS 11 Public Beta

Building on this legacy of innovation, Apple has released the public beta for watchOS 11, packed with features that promise to enhance the user experience significantly. The Vitals app offers users a comprehensive view of their overnight health metrics. By wearing your watch to sleep, you can monitor heart rate, respiratory rate, wrist temperature, blood oxygen levels, and sleep duration. The Vitals app helps establish a typical range for each metric, providing notifications if multiple metrics fall outside this range, with context on possible factors like medications or illness. While traveling in Japan, I became ill, and the Vitals app flagged my vitals as being out of range. It was helpful to see the metrics.

watchOS 11 Vitals

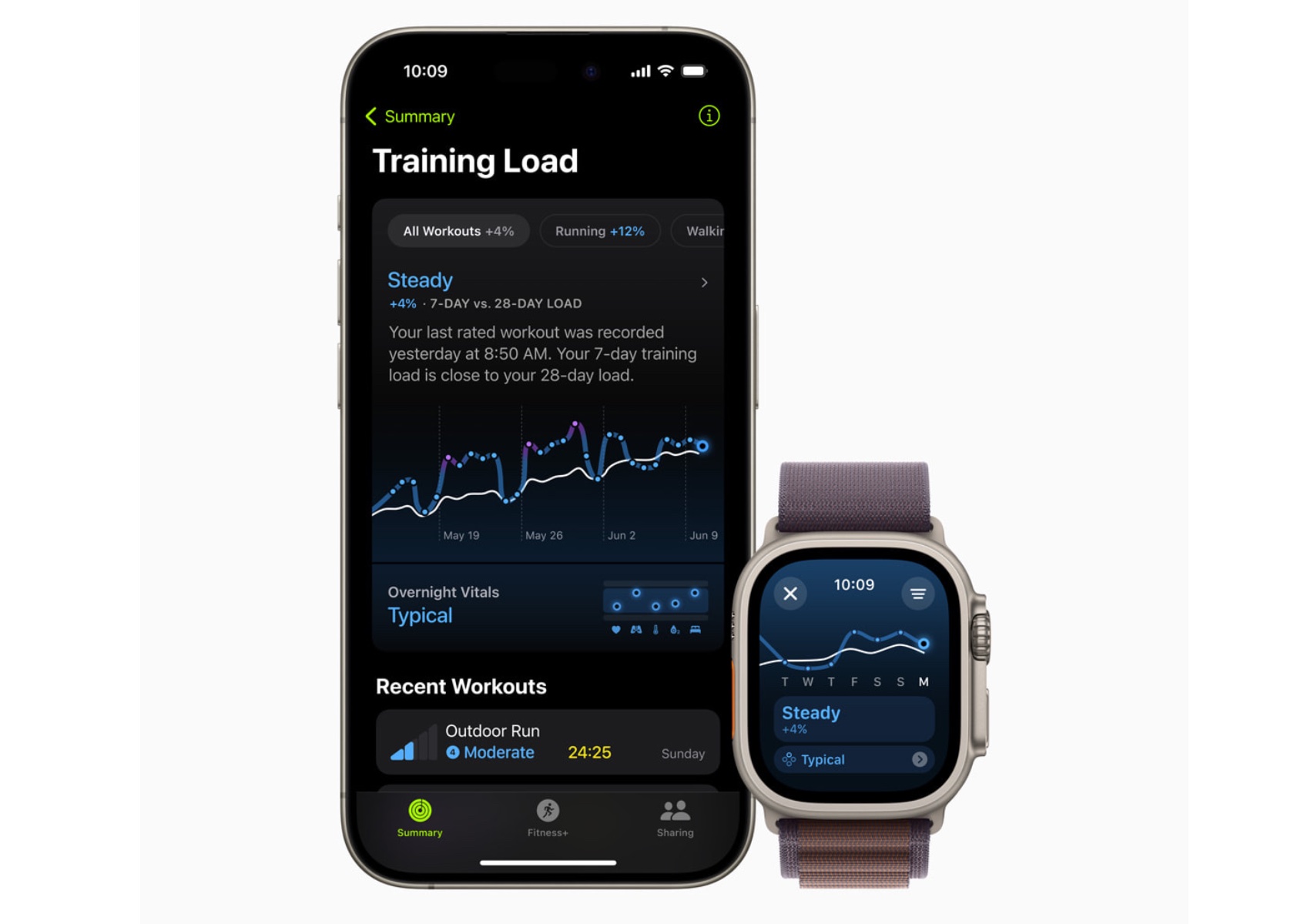

Training Load is another exciting feature, helping athletes see how workout intensity impacts their bodies over time. This tool lets users make informed decisions about their training schedules, especially when preparing for significant events. Enhanced GPS positioning for more workouts and custom workouts for pool swims are also noteworthy additions, providing users with more precise and personalized fitness tracking.

watchOS 11 Training Load

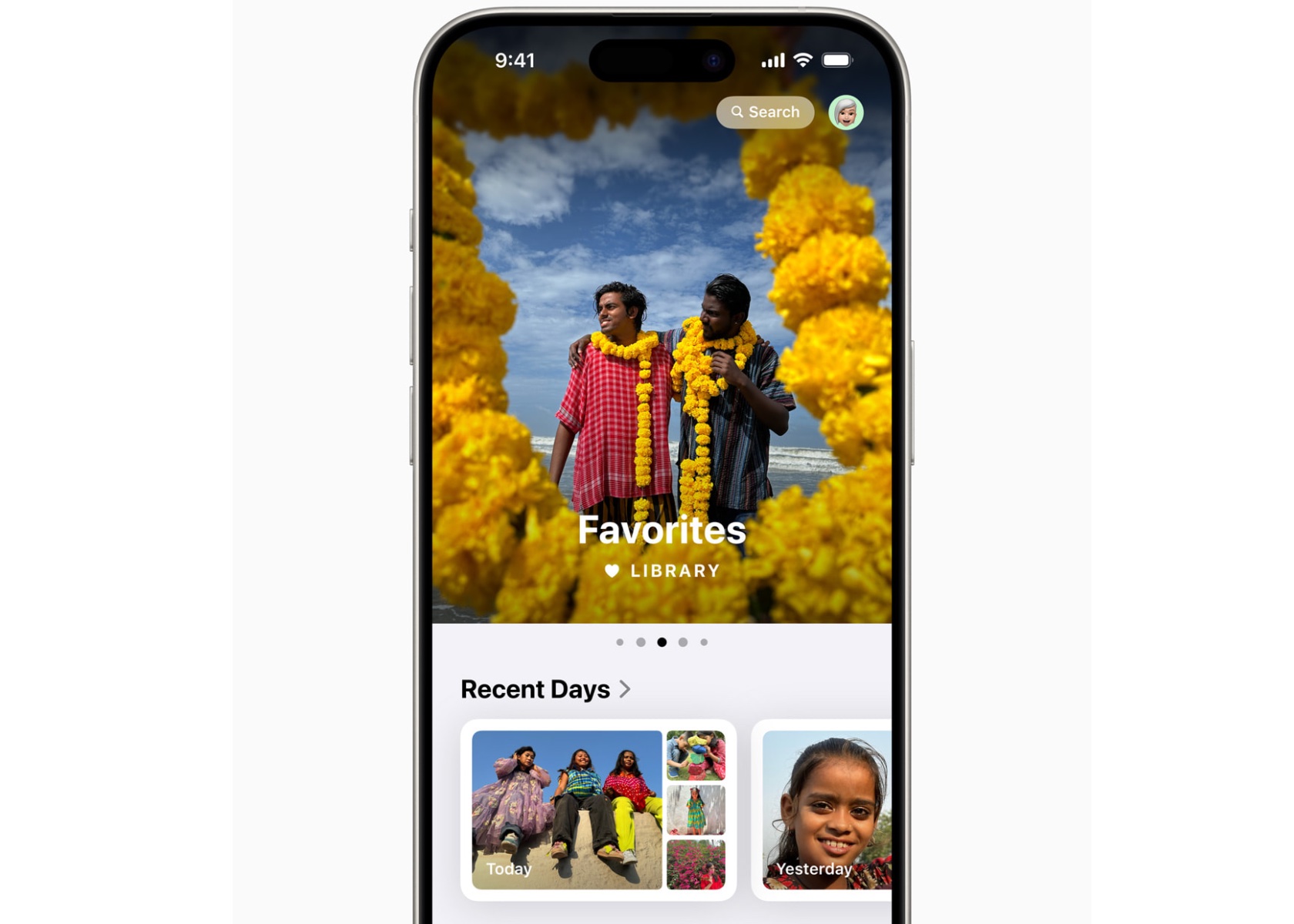

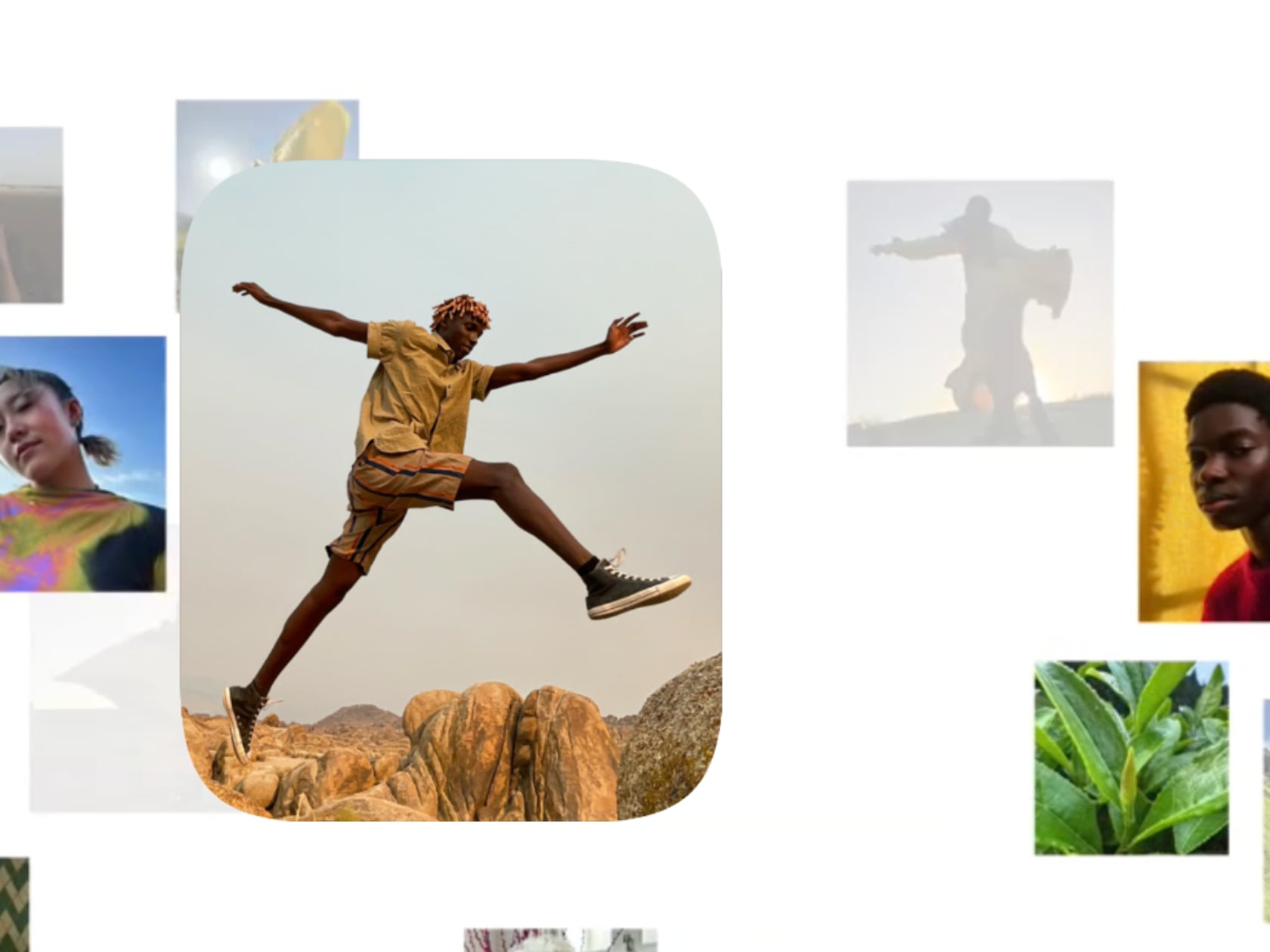

The Photos Face has been redesigned and powered by machine learning to curate the best images from your library for display on your watch. The Cycle Tracking app now includes features tailored for pregnancy, offering gestational age tracking and health recommendations.

watchOS 11 Photos Face

watchOS 11 Cycle Tracking

Smart Stack has become more intelligent, displaying widgets automatically based on time, location, and other factors. Live Activities are supported, and new widgets include severe weather alerts, Training Load, and Photos.

watchOS 11 Smart Stack

With the new Check-In feature, users can notify friends or loved ones when they arrive at a destination, providing peace of mind. Enhanced GPS positioning, custom pool swim workouts, and a new effort tracking metric for workouts round out the impressive list of new features in watchOS 11.

watchOS 11 Check In

Apple’s ability to captivate and innovate remains as strong as ever, from the bold declaration of the 1984 ad to the thrilling presentations of WWDC 2024. As a 50-year-old tech journalist covering technology for the last three decades, it’s been such a pleasure to have experienced each stage of innovation. I’m excited to see what Apple Intelligence has in store for us over the next few decades.

For those eager to explore the future of wearable technology, try the public beta. However, be cautious—once installed, you cannot roll back to the previous version without shipping the watch to Apple for service. For more detailed information on watchOS 11 features, visit the Apple watchOS 11 preview page.

The post From Orwellian Dystopia to Apple Watch Ultra: Apple’s Journey to watchOS 11 Public Beta first appeared on Yanko Design.