Following its recent AI makeover of Gmail, Google is bringing more Gemini-powered tools to Chrome. Starting today, a host of new features are rolling out for the browser, with more to come over the next few months.

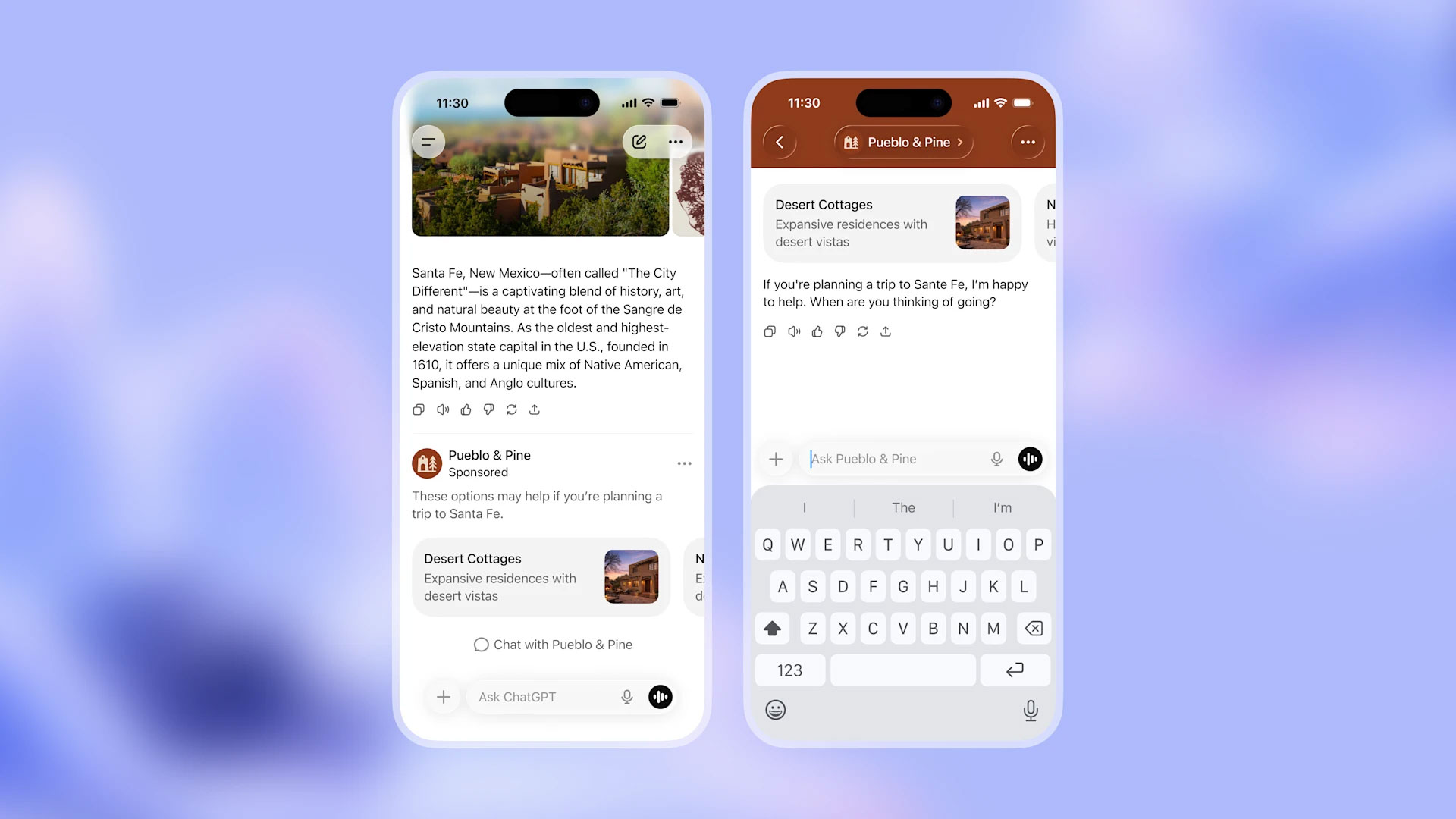

The first of the new features is a sidebar. Available to all Gemini in Chrome users, the interface allows you to chat with Gemini and keep a conversation going across multiple tabs. Google suggests the sidebar is useful for multitaskers. "Our testers have been using it for all sorts of things: comparing options across too-many-tabs, summarizing product reviews across different sites, and helping find time for events in even the most chaotic of calendars," the company writes.

The sidebar is also where you access the second new feature Google is adding to Chrome. Following its successful rollout within the Gemini app, Nano Banana, Google's in-house image generator, is available directly inside of the browser. With the addition, you won't need to open a new tab when you want Gemini to make you an AI image. You also won't need to download and upload a file when you want Gemini to edit an existing image for you. Instead, you can complete both of those tasks from any of your open tabs, thanks to the new sidebar.

Looking forward, Google plans to bring Personal Intelligence, which debuted inside of the Gemini app at the start of January, to Chrome in the coming months. Once the feature arrives, it will allow the browser to remember past conversations you've had with Gemini. In turn, Google says this will lead to a more personalized Chrome. "Personal Intelligence in Chrome transforms the browsing experience from a general purpose tool into a trusted partner that understands you and provides relevant, proactive, and context-aware assistance," the company said.

In the meantime, Gemini in Chrome already supports Google's Connected Apps feature, which allows the assistant to pull information from the company's other services, including Gmail and Calendar. During a press briefing, a Google employee demoed this feature by asking Gemini to pull up the dates of when their children would be on March break. Without telling the assistant where to look, Gemini sourced the correct time frame from the employee's email inbox.

Last but not least, Google is previewing a new auto browse feature inside of Chrome. In the demo the company showed, an employee asked Gemini to find and buy them the same winter jacket they bought a few seasons ago. The assistant first drafted a plan outlining how best to tackle the request. It reasoned the best place to start was with a search of the employee's email inbox to determine the correct model and size of jacket. It then went shopping.

While Gemini was working on this task, the employee was free to continue browsing in Chrome. At several points in the process, the assistant would stop before continuing to obtain the employee's permission to move forward. For instance, it paused when it needed login credentials, and again when it needed a credit card number to complete the purchase.

Judging from the demo, it will probably take you less time to do your online shopping and other browser tasks on your own. Google suggests the feature will appeal to those who are creatures of habit. Say you often order the same produce from a grocery delivery service every week, Gemini can automate the ordering. Plus, the feature is in preview, so early testers probably won't be too put off by Gemini's slow pace. In any case, Google AI Pro and Ultra subscribers in the US can try auto browse starting today.

This article originally appeared on Engadget at https://www.engadget.com/ai/google-brings-its-nano-banana-image-generator-to-chrome-180000104.html?src=rss