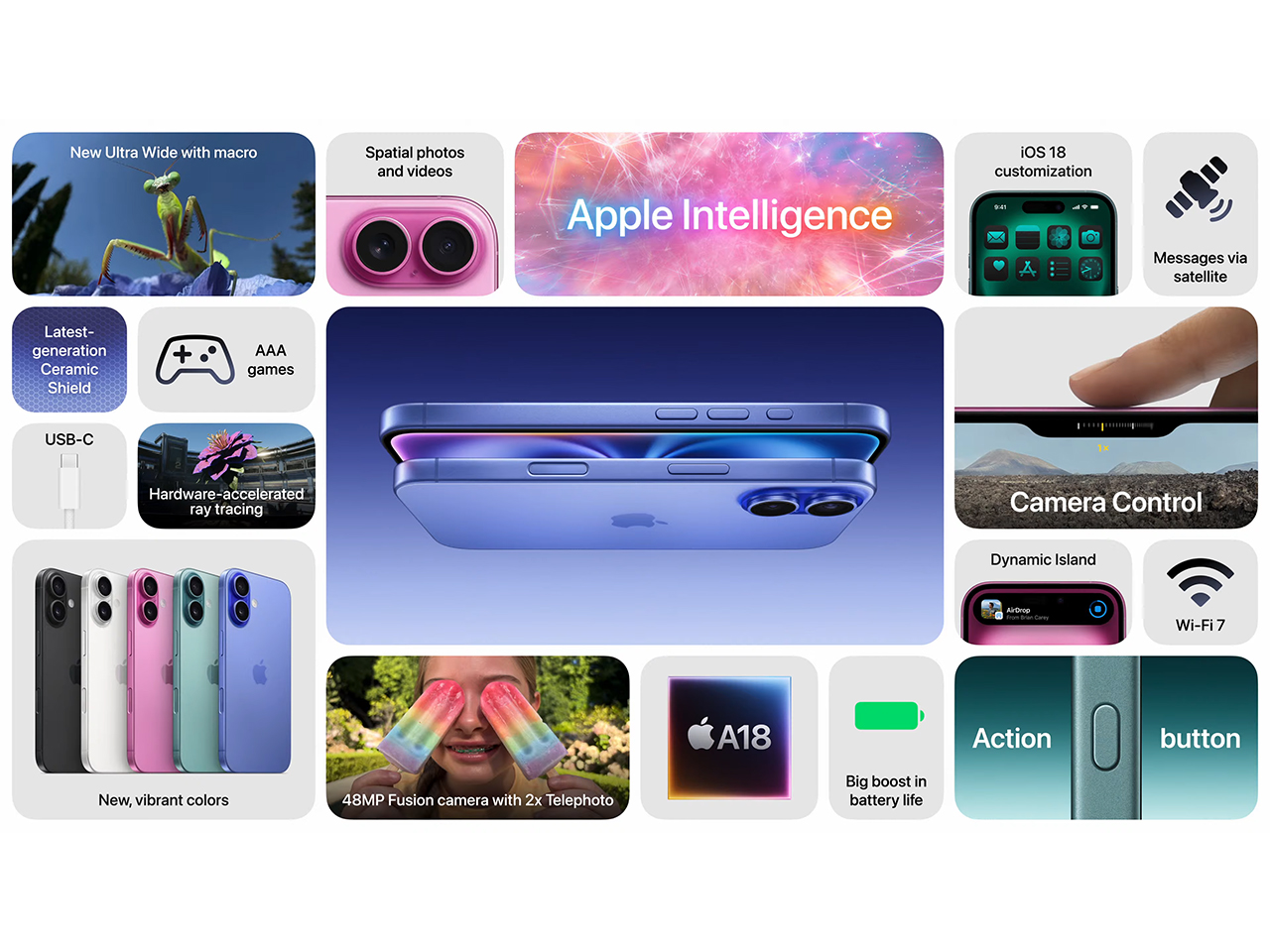

Apple has a reputation for taking established ideas and refining them into seamless, intuitive features, and it looks like they’ve done it again with their new Visual Intelligence technology in the iPhone 16. In contrast to Google Lens, which primarily scans objects or texts in photos and returns basic web-based results, Apple’s Visual Intelligence integrates advanced generative models and contextual awareness, creating a more profound, interactive experience. This blend of on-device intelligence and private cloud computing not only delivers more relevant information but does so in a way that feels personal and purposeful.

Let’s dive into why Apple’s Visual Intelligence may have just overshadowed Google Lens, and how it’s bringing more powerful insights to users right through the iPhone 16’s camera. Before we do, it’s important to note that Google HAS, in fact, demonstrated Gemini’s ability to ‘see’ the world around you and provide context-based insights… however, it seems like a lot of those features are limited to just Pixel phones because of their AI-capable Tensor chips. While Google Lens (an older product) is available across the board to both iOS and Android devices, Apple’s Visual Intelligence feature gives iPhones a highly powerful multimodal AI feature that would otherwise require existing Apple users to switch over to the Google Pixel.

Going Beyond Surface-Level Search

Google Lens has been a reliable tool for identifying objects, landmarks, animals, and text. It essentially acts as a visual search engine, allowing users to point their camera at something and receive search results based on Google’s vast index of web pages. While this is undoubtedly useful, it stops at merely recognizing objects or extracting text to launch a related Google search.

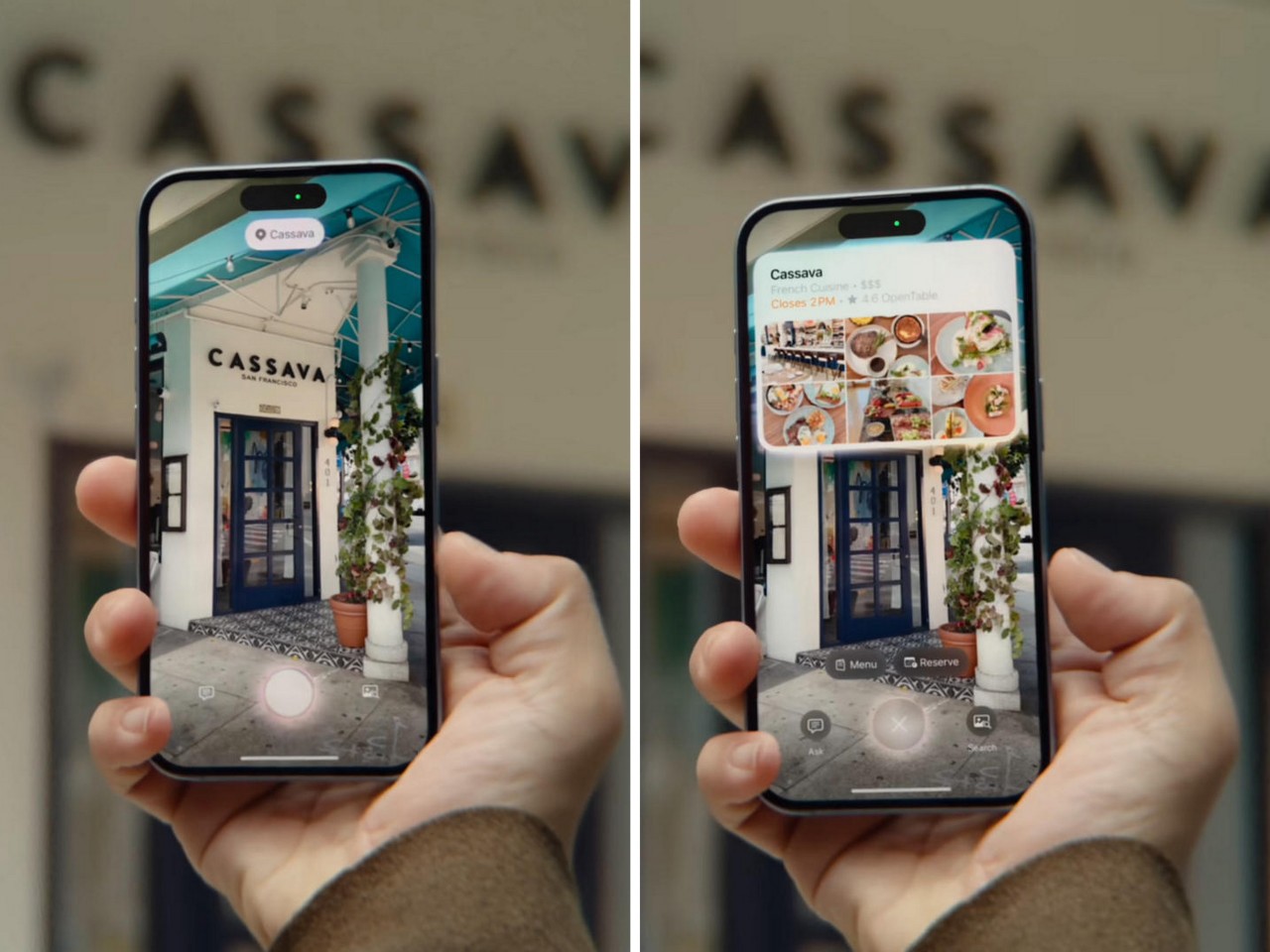

Apple’s Visual Intelligence, on the other hand, merges object recognition with contextual data retrieval. This means it can offer richer, more integrated information. During the Apple keynote, Craig Federighi demonstrated how users could point their iPhone at a restaurant and instantly retrieve operating hours, reviews, and options to make reservations—all without needing to open Safari or another app. Similarly, pointing the camera at a movie poster will not just yield a name or showtimes, but deeper context such as ratings, actor bios, and related media, providing a much more immersive and helpful experience.

The Power of Integration: Visual Intelligence and Third-Party Tools

One of the standout features of Apple’s Visual Intelligence is its seamless integration with third-party tools, offering expanded functionality. For instance, if you spot a bike you’re interested in, Visual Intelligence doesn’t just identify the brand and model; it can quickly connect you to third-party retailers via Google Search to check availability and pricing. This interplay between native intelligence and external databases exemplifies Apple’s mastery of pulling together useful, real-time data without breaking the user’s workflow.

But it doesn’t stop there. Apple has built-in support for querying complex topics with tools like ChatGPT. Imagine you’re reviewing lecture notes and stumble across a difficult concept. Simply hold your iPhone over the text and ask ChatGPT to explain it right on the spot. This deep contextual awareness and ability to provide real-time insights based on multiple external sources is something Google Lens simply cannot do at the same level.

Privacy at the Core

Another area where Apple shines is in its privacy-first approach to AI. All interactions through Visual Intelligence, such as identifying objects or pulling up information, are processed on-device or via Apple’s Private Cloud Compute, ensuring that no personal data is stored or shared unnecessarily. This is a stark contrast to Google’s cloud-based model, which has often raised concerns about the volume of user data being processed on external servers. By keeping the majority of computation on the device, Apple delivers peace of mind for privacy-conscious users—an area that Google has historically struggled with.

A Broader Reach: Enabling Personal Context

One of the most significant advantages of Apple’s approach is its deep integration into your phone’s personal data. Visual Intelligence doesn’t just analyze what’s in front of the camera; it connects the dots with your past interactions. For example, Siri, now supercharged with Visual Intelligence, can identify the contents of your messages or your calendar appointments and offer contextual suggestions based on what you’re viewing. If you’re looking at a flyer for an event, Visual Intelligence will not only retrieve details about the event but also cross-reference it with your schedule to automatically add it to your calendar—again, without having to lift a finger.

Google Lens, by comparison, lacks this deep personal integration. It’s effective as a standalone visual search tool but hasn’t yet reached the level of intuitive, user-centered design that Apple has mastered.

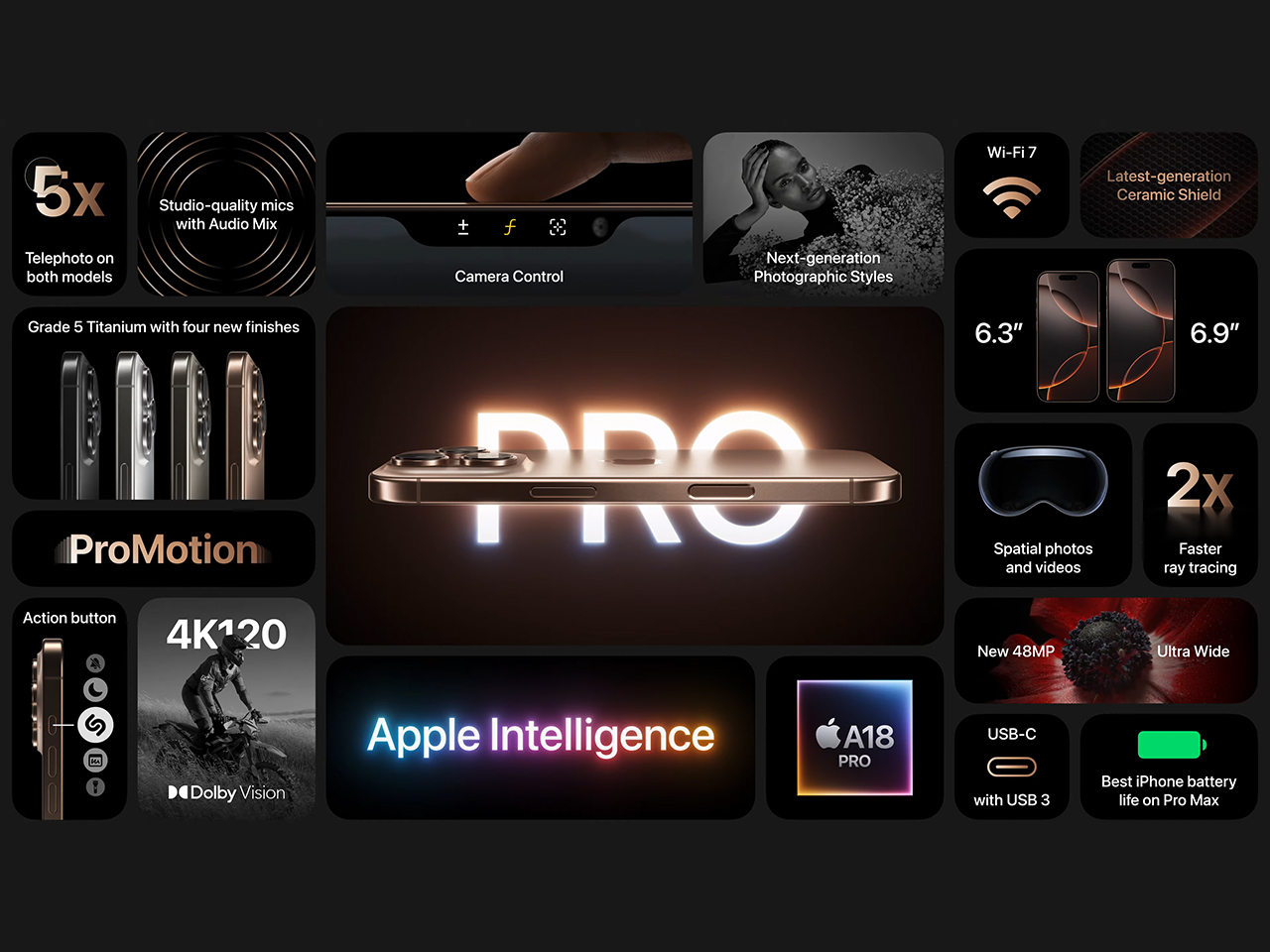

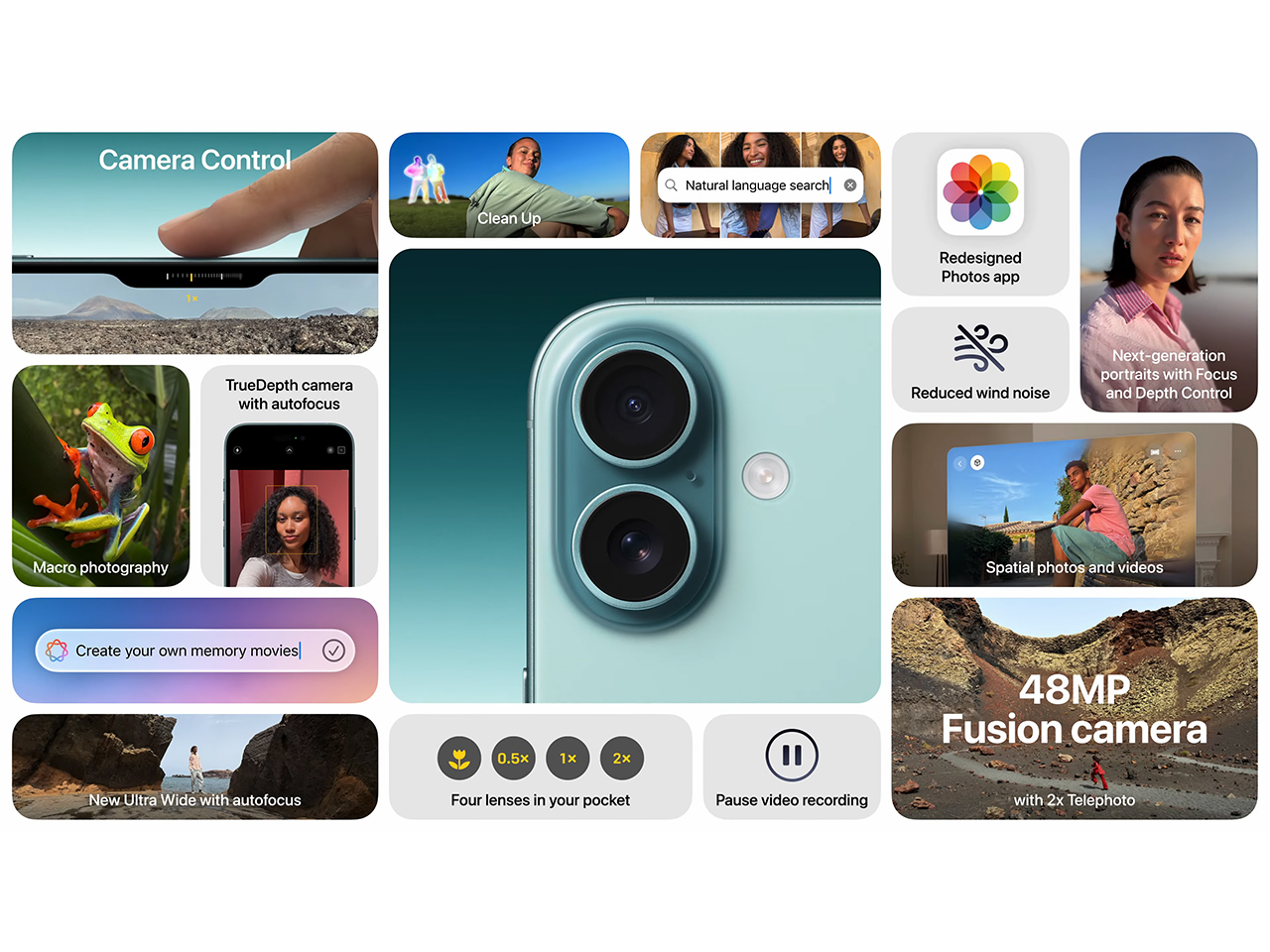

A New Era for Intelligent Photography

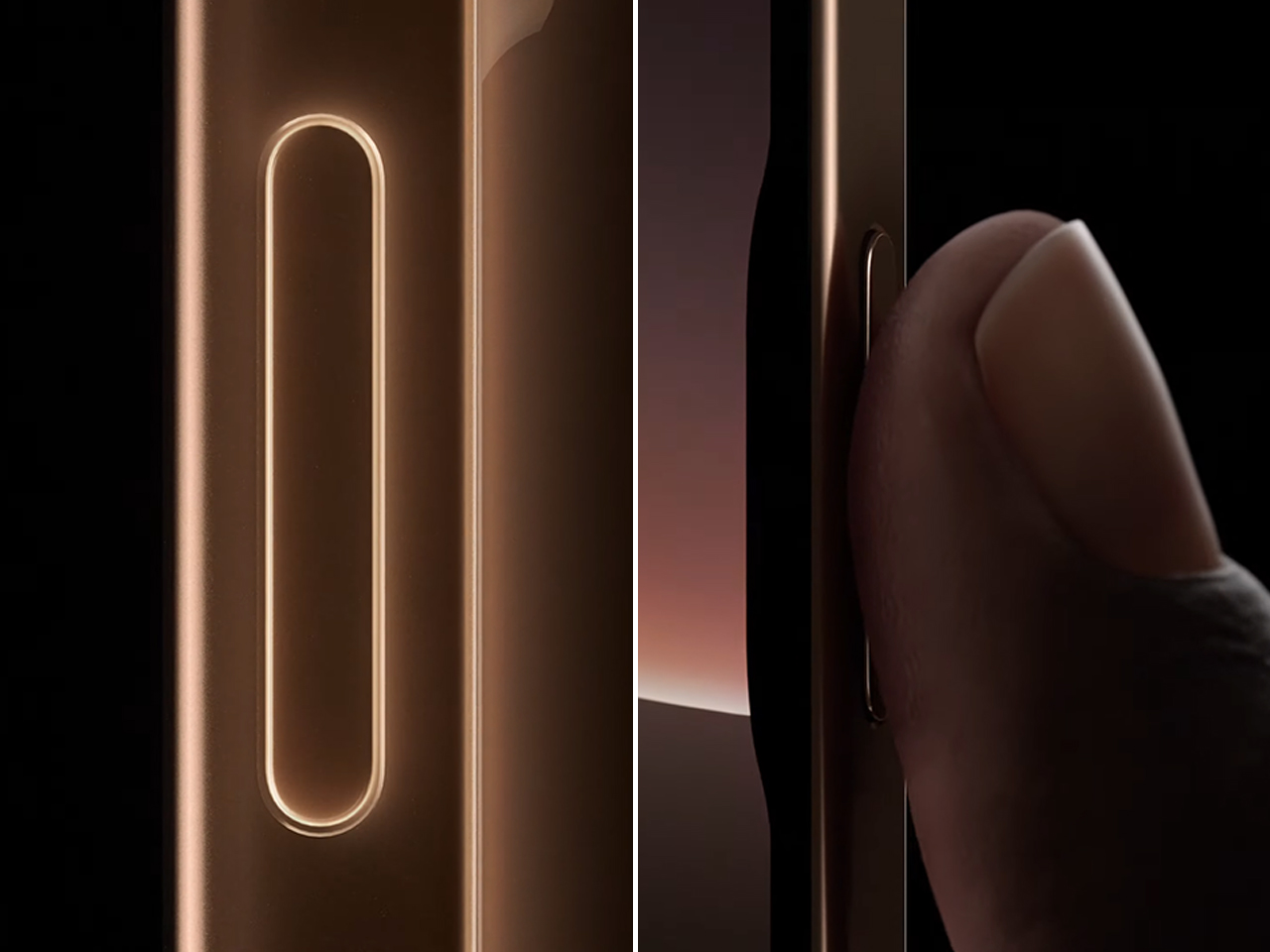

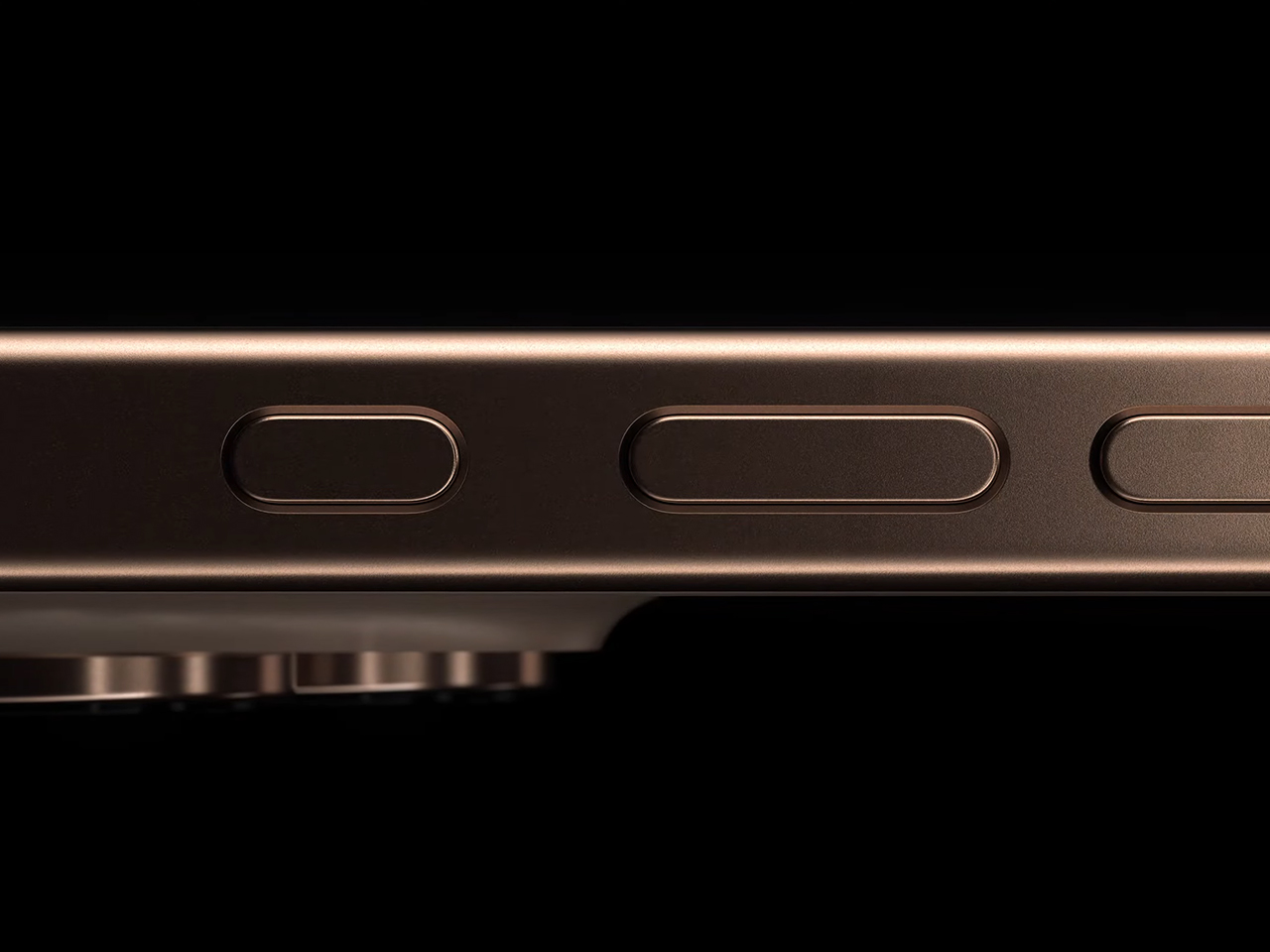

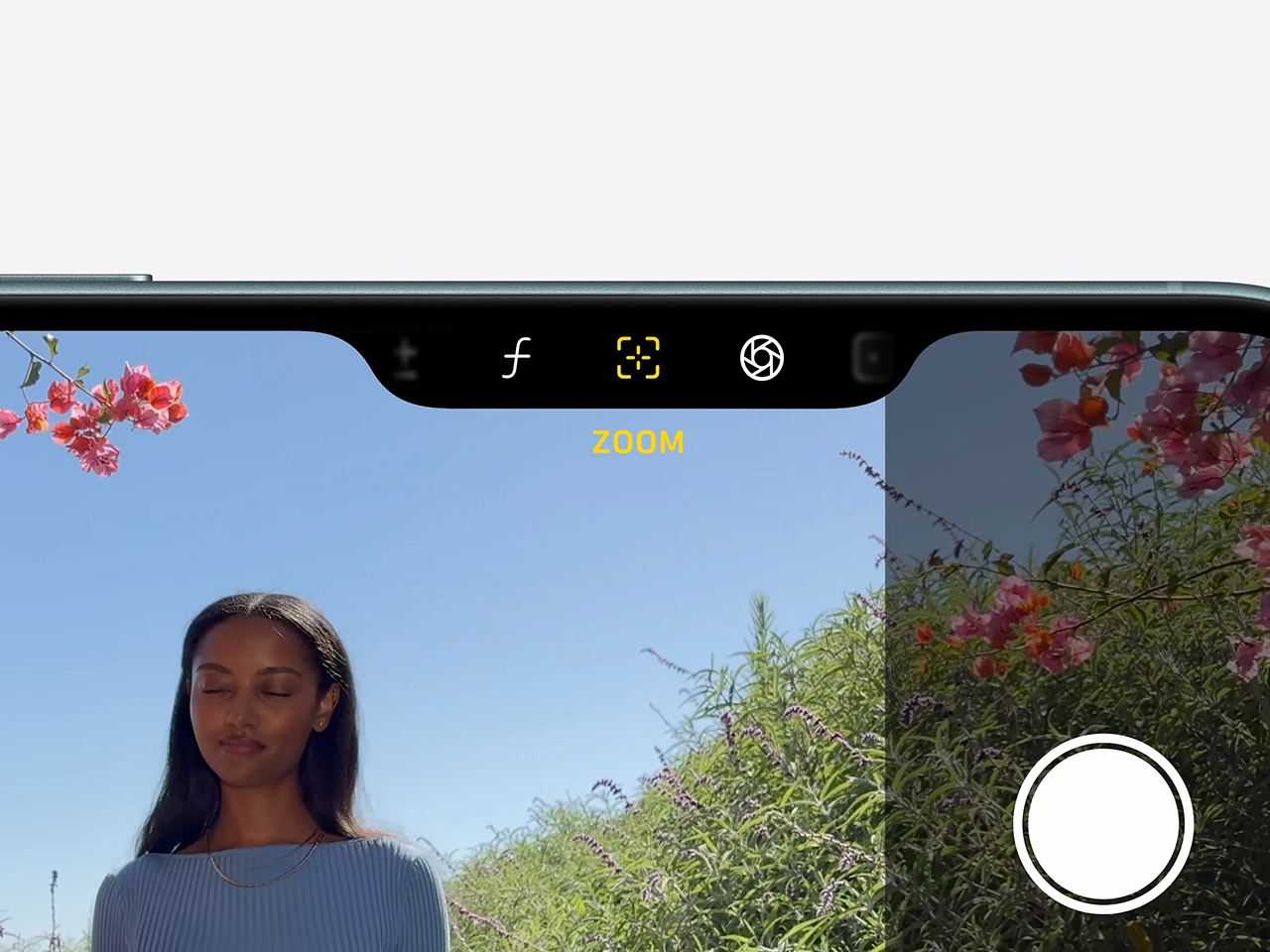

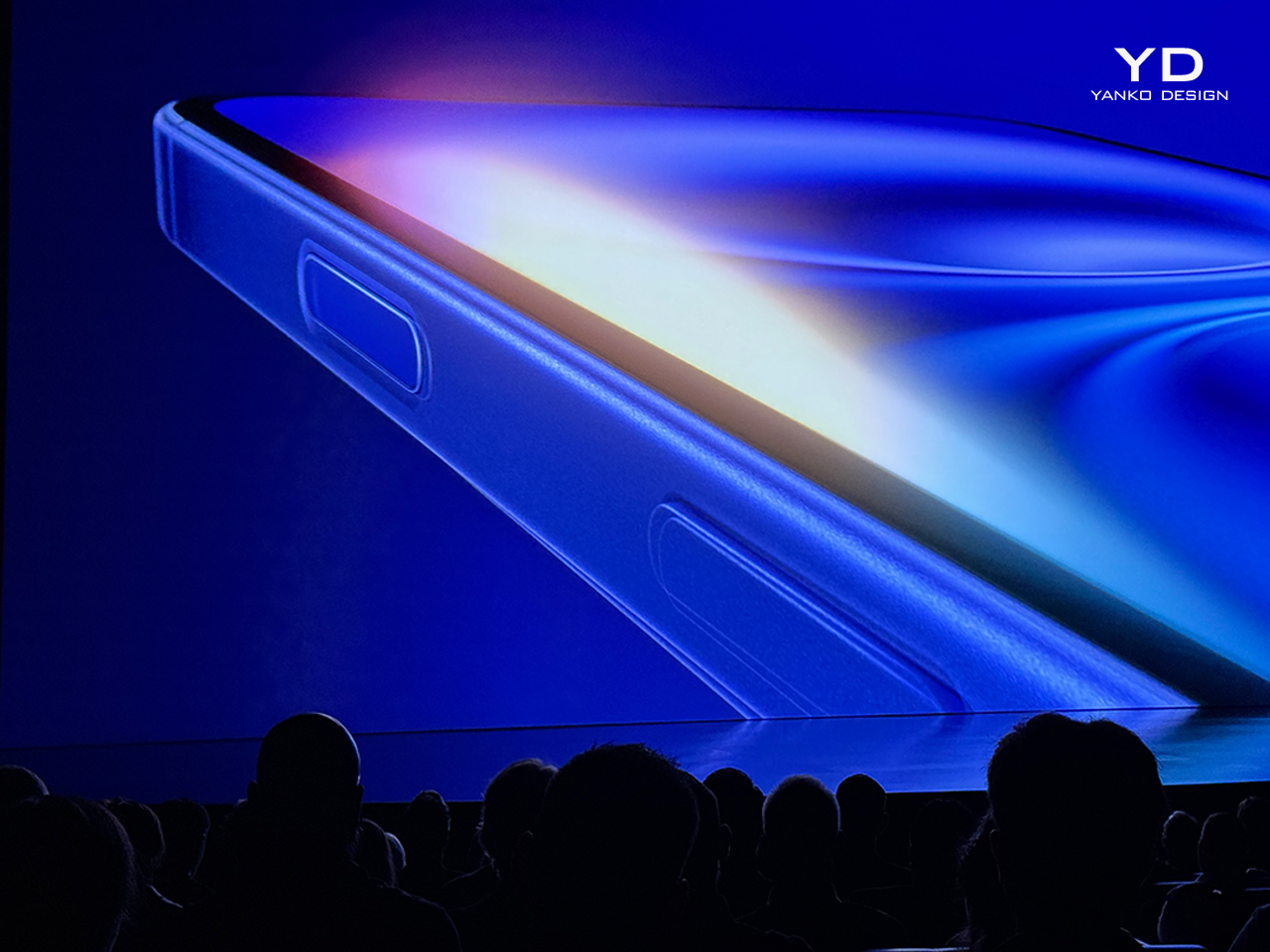

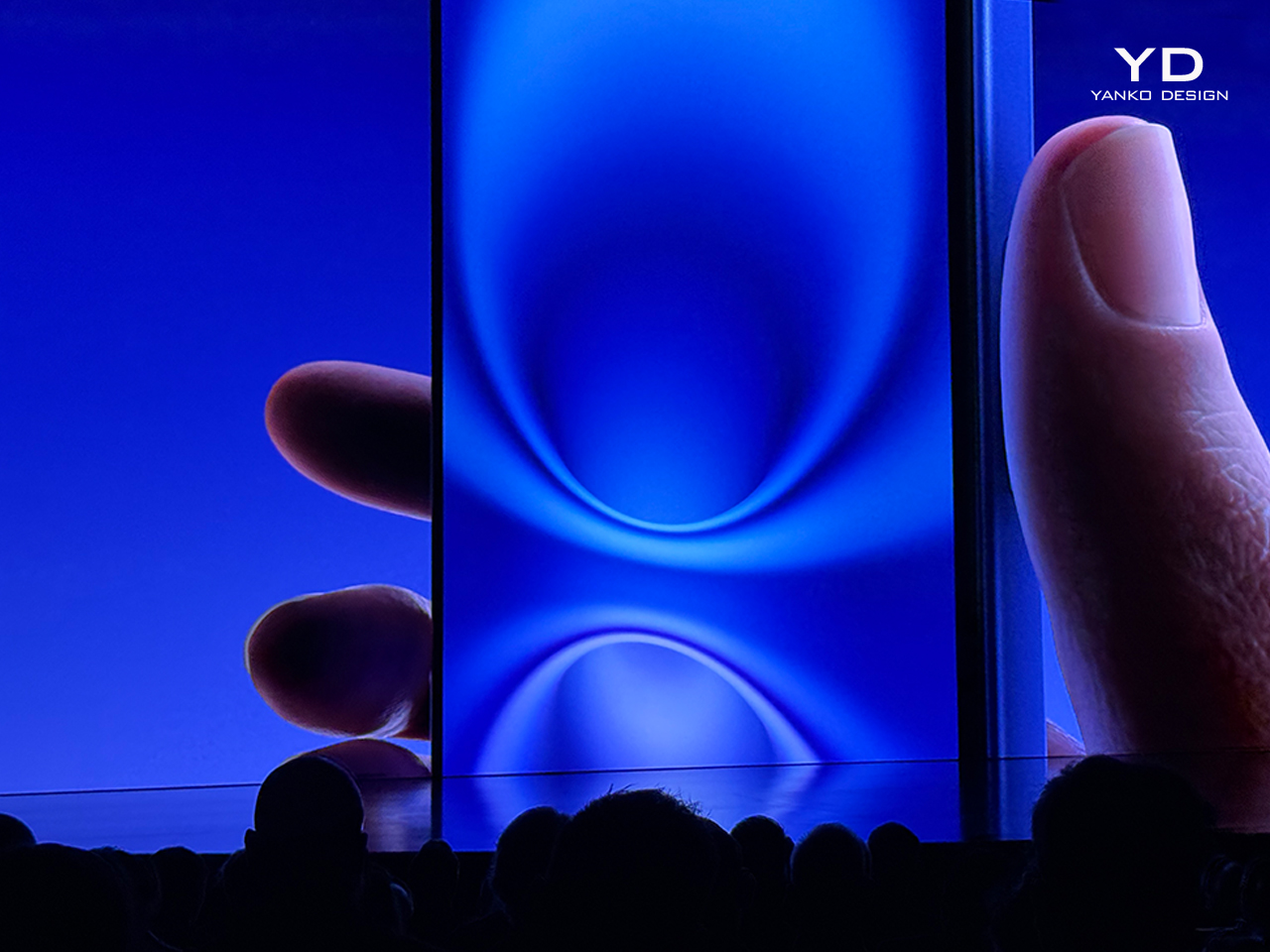

Apple’s innovation also extends into how we interact with our cameras. The new camera control on the iPhone 16 doubles as a gateway to Visual Intelligence. This means users can quickly snap a photo and receive actionable insights immediately. With a simple press of the camera control, users can tap into features like instant translations, object recognition, or even educational tools like ChatGPT.

Google Lens, while impressive in its object recognition, doesn’t offer this seamless experience. It requires users to jump between apps or tabs to get additional information, while Apple’s integration means the iPhone is one fluid tool—camera, intelligence, and action all in one place.

Apple Executes Where Google Initiated

Google Lens may have launched first, but Apple has undeniably refined and expanded the concept. It’s a tendency that we’ve come to learn and love about Apple – they usually don’t believe in being first to the market, but rather believe in executing features so well, people tend to ignore the competition – they did so with the Vision Pro AND with Apple Intelligence. Visual Intelligence is a bold step forward, leveraging on-device power and privacy to deliver more meaningful, contextual insights. Where Google Lens excels at basic object recognition, Apple’s approach feels more like a true assistant, offering deeper information, smarter integrations, and a more secure experience.

The post Apple’s New ‘Visual Intelligence’ feature on the iPhone 16 basically makes Google Lens obsolete first appeared on Yanko Design.