Cross-language conversations create a familiar kind of friction. You hold a phone over menus, miss half a sentence while an app catches up, or watch a partner speak fast in a meeting while your translation lags behind. Even people who travel or work globally still juggle apps, hand-held translators, and guesswork just to keep up with what is being said in the room, which pulls attention away from the actual conversation.

Leion Hey2 is translation that lives where your eyes already are, in a pair of glasses that quietly turns speech into subtitles without asking you to look down or pass a device back and forth. The glasses were built for translation first, not as an afterthought on top of entertainment or social features, and they are meant to last through full days of meetings or classes instead of dying halfway through, when you need them most.

Designer: LLVision

Glasses That Care About Conversation, Not Spectacle

Leion Hey2 is a pair of professional AR translation glasses from LLVision, a company that has spent more than a decade deploying AR and AI in industrial and public-sector settings. Hey2 is not trying to be an all-in-one headset; it is engineered from the ground up for real-time translation and captioning, supporting more than 100 languages and dialects with bidirectional translation and latency under 500 ms in typical conditions, plus 6–8 hours of continuous translation on a single charge.

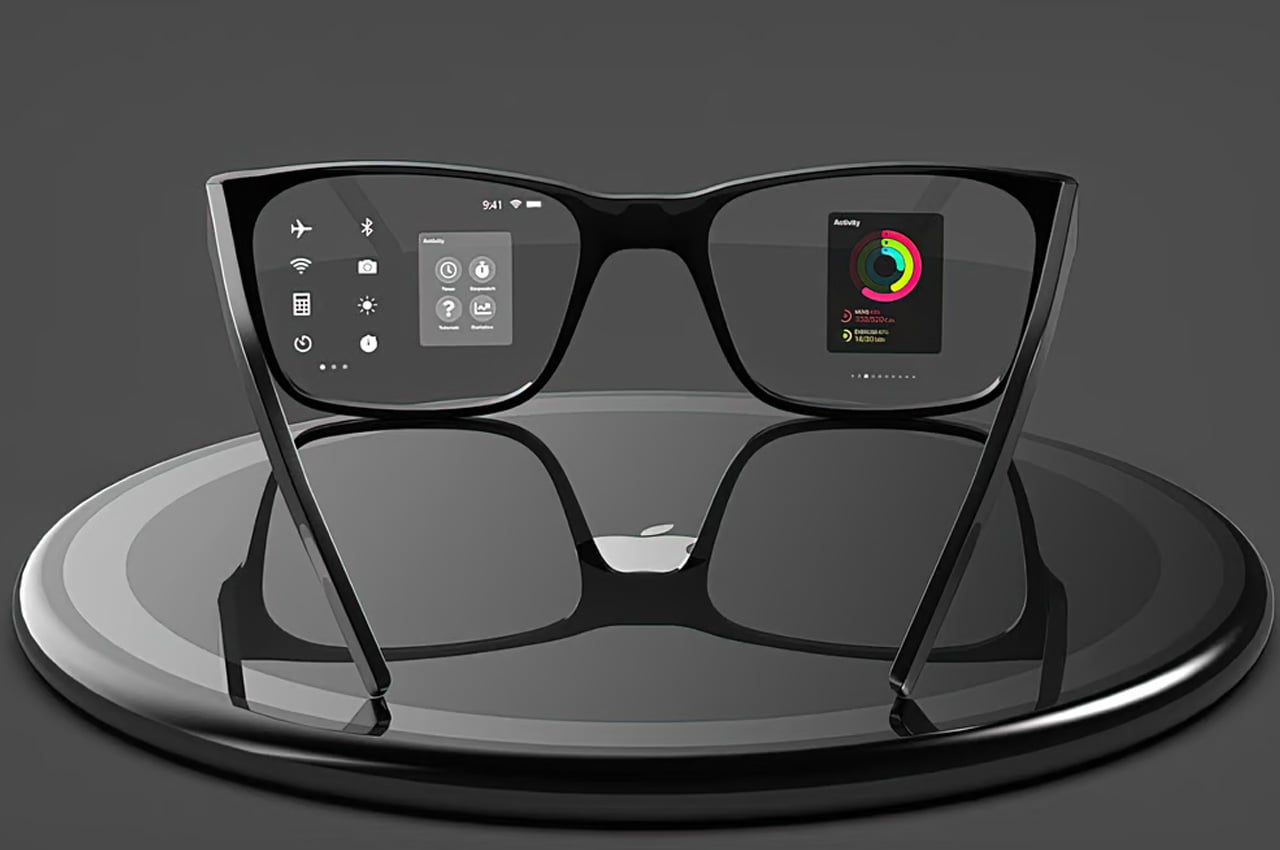

Hey2 is designed to wear like everyday eyewear rather than a gadget. The classic browline frame, 49g weight, magnesium-lithium alloy structure, and adjustable titanium nose pads are all chosen to make it feel like a normal pair of glasses you forget you are wearing. A stepless spring hinge adapts to different faces, and the camera-free, microphone-only design, which follows GDPR-aligned privacy principles and is supported by a secure cloud infrastructure built on Microsoft Azure, helping keep both wearers and bystanders more comfortable in sensitive environments.

Subtitles in Your Line of Sight

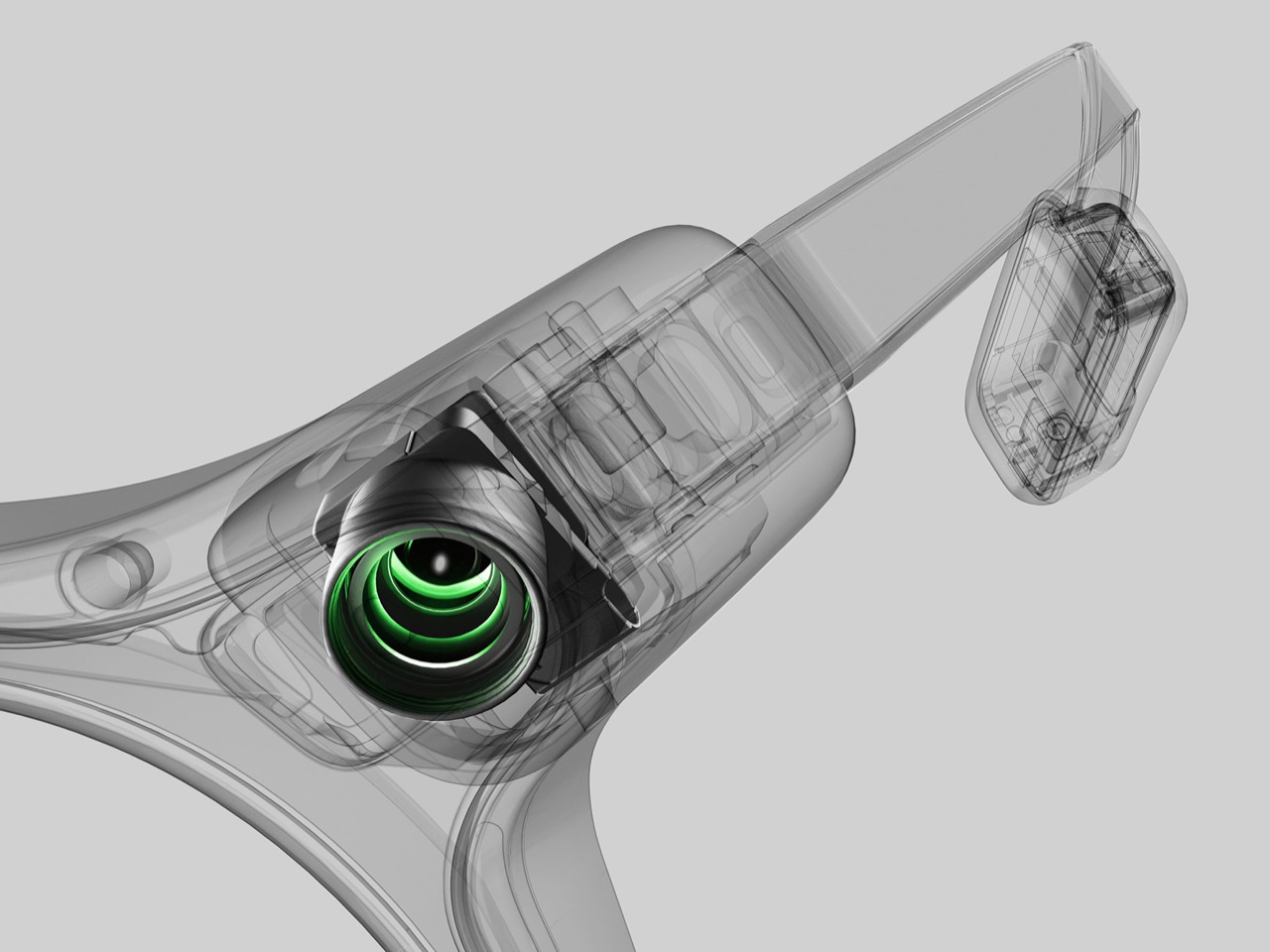

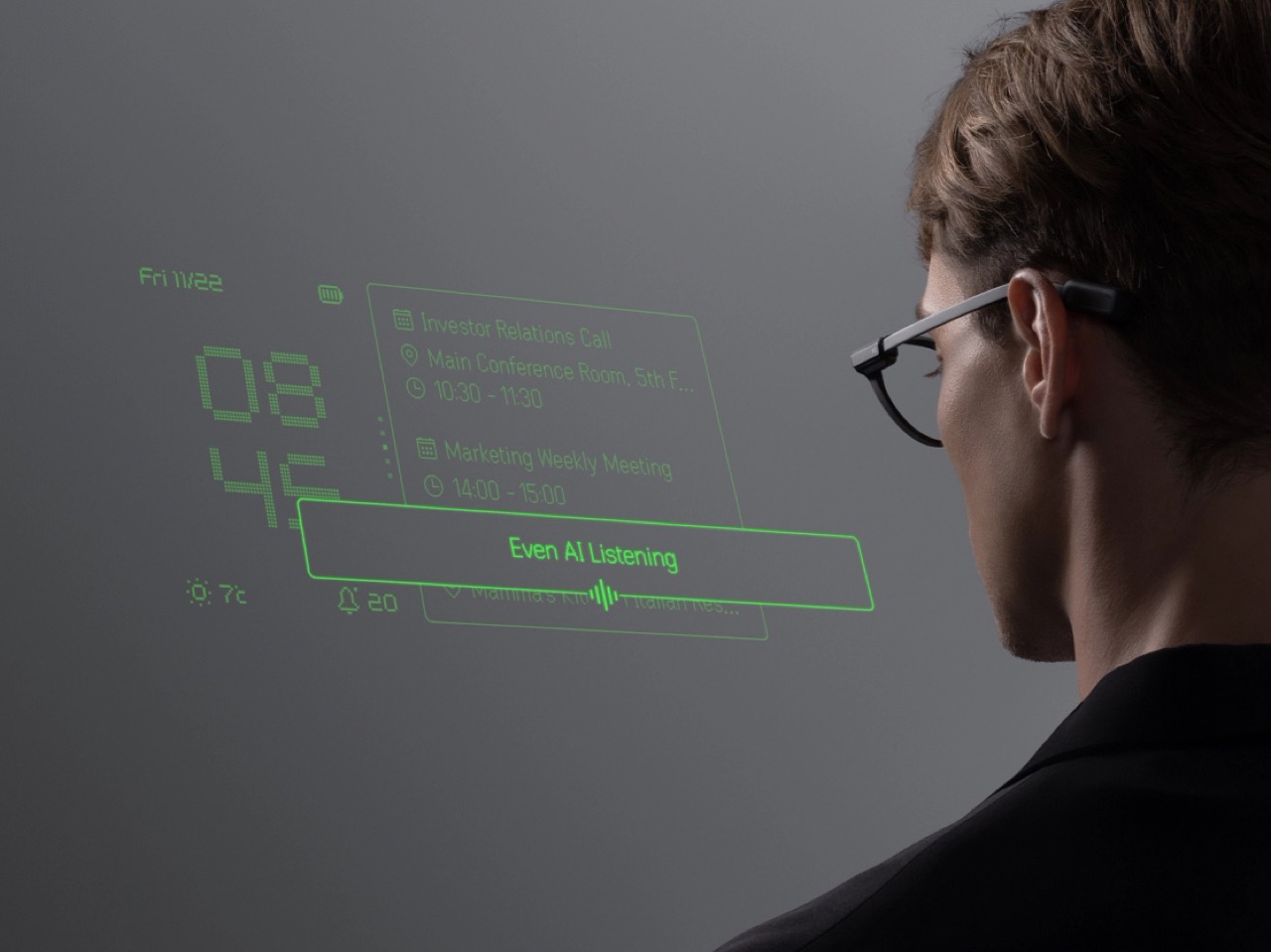

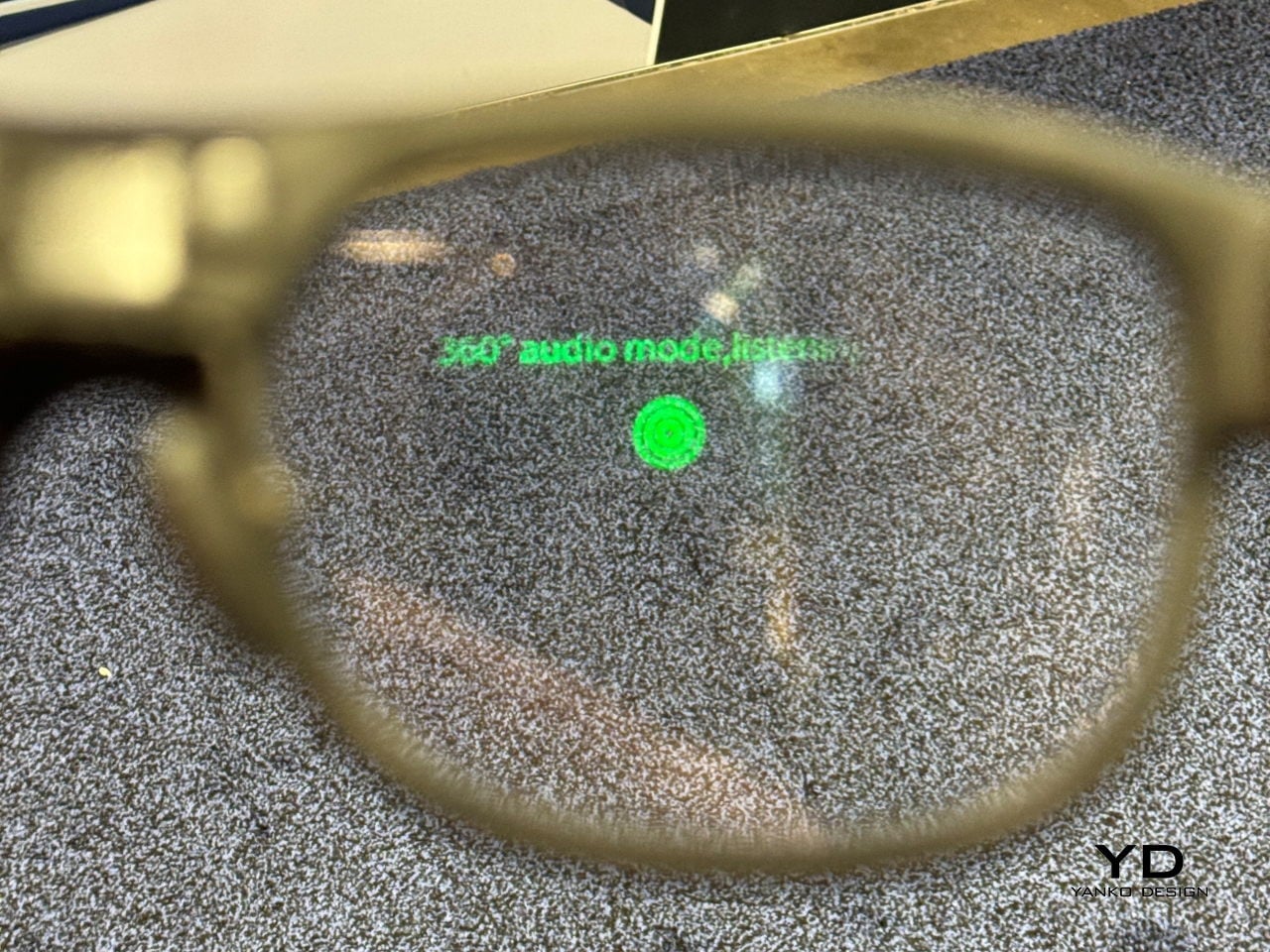

Hey2 uses waveguide optics and a micro-LED engine to project crisp, green subtitles into both eyes, with a 25-degree field of view and more than 90% passthrough so the real world stays bright. The optical engine is tuned to reduce rainbow artifacts by up to 98%, keeping text stable and readable in different lighting conditions, while three levels of subtitle size and position let you decide how prominently captions sit in your forward field of view.

The audio side relies on a four-microphone array that performs 360-degree spatial detection to identify who is speaking, while face-to-face directional pickup prioritizes the person within roughly a 60-degree cone in front of you. A neural noise-reduction algorithm uses beamforming and multi-channel processing to isolate the main voice, which helps in noisy restaurants, busy trade-show floors, or classrooms where questions come from different directions, without forcing you to constantly adjust settings.

Modes That Support Work, Learning, and Accessibility

In translation and Free Talk modes, foreign speech is converted into your language as subtitles in your line of sight, so you can mix languages freely and still follow long-form speech without constantly checking a screen. In Free Talk, Hey2 provides subtitles for what you hear and spoken translation for what you say, turning a two-language conversation into something that feels more like a normal chat than a tech demo, with the charging case extending total use to 96 hours across 12 recharges.

Teleprompter mode scrolls your script in your line of sight and advances it automatically as you speak, useful for lectures, pitches, or keynotes where you want to keep eye contact without glancing at notes. AI Q&A, triggered by a temple tap, taps into ChatGPT-powered answers for discreet look-ups, while Captions mode turns fast speech into clean text, helping students, professionals, and Deaf or hard-of-hearing users stay on top of what is being said, even in noisy environments where handheld devices struggle.

A Different Kind of AR Story

When Leion Hey2 steps onto the CES 2026 stage, it represents a quieter kind of AR story. Instead of chasing spectacle, it narrows the brief to something very human, helping people speak, listen, and be understood across languages and hearing abilities. For a show that often celebrates what technology can do, Hey2 is a reminder that sometimes the most interesting innovation is the one that simply lets you keep your head up and stay in the conversation.

The post Leion Hey2 Brings First AR Glasses Built for Translation to CES 2026 first appeared on Yanko Design.