Last week at CES, Lego introduced its new Smart Play system, with a tech-packed Smart Brick that can recognize and interact with sets and minifigures. It was unexpected and delightful to see Lego come up with a way to modernize its bricks without the need for apps, screens or AI.

So I was a little surprised this week when the Lego Education group announced its latest initiative is the Computer Science and AI Learning Solution. After all, generative AI feels like the antithesis of Lego’s creative values. But Andrew Silwinski, Lego Education’s head of product experience, was quick to defend Lego’s approach, noting that being fluent in the tools behind AI is not about generating sloppy images or music and more about expanding what it means by teaching computer science.

“I think most people should probably know that we started working on this before ChatGPT [got big],” Silwinski told Engadget earlier this week. “Some of the ideas that underline AI are really powerful foundational ideas, regardless of the current frontier model that's out this week. Helping children understand probability and statistics, data quality, algorithmic bias, sensors, machine perception. These are really foundational core ideas that go back to the 1970s.”

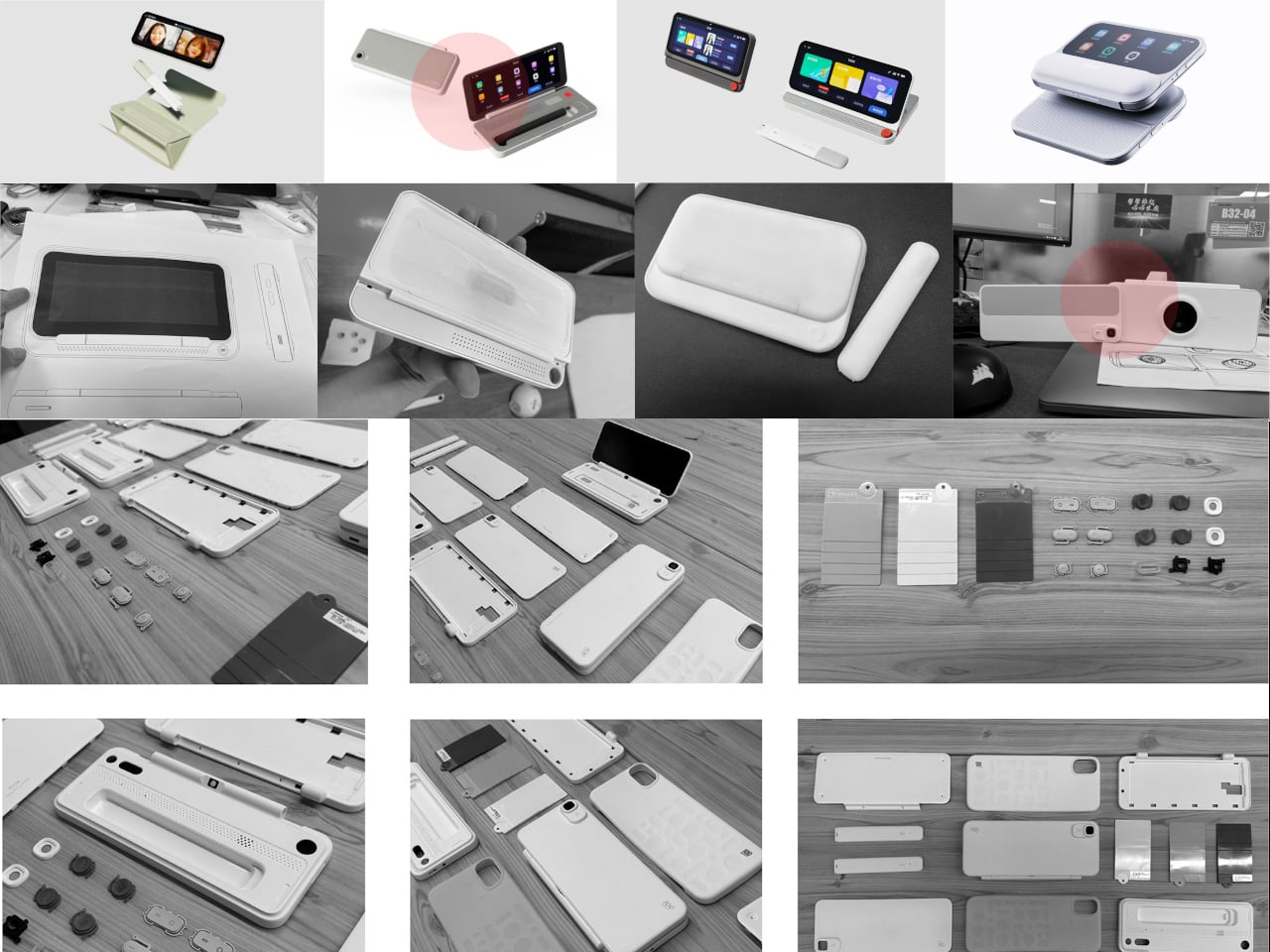

To that end, Lego Education designed courses for grades K-2, 3-5 and 6-8 that incorporate Lego bricks, additional hardware and lessons tailored to introducing the fundamentals of AI as an extension of existing computer science education. The kits are designed for four students to work together, with teacher oversight. Much of this all comes from learnings Lego found in a study it commissioned showing that teachers often find they don’t have the right resources to teach these subjects. The study showed that half of teachers globally say “current resources leave students bored” while nearly half say “computer science isn’t relatable and doesn’t connect to students’ interests or day to day.” Given kids’ familiarity with Lego and the multiple decades of experience Lego Education has in putting courses like this together, it seems like a logical step to push in this direction.

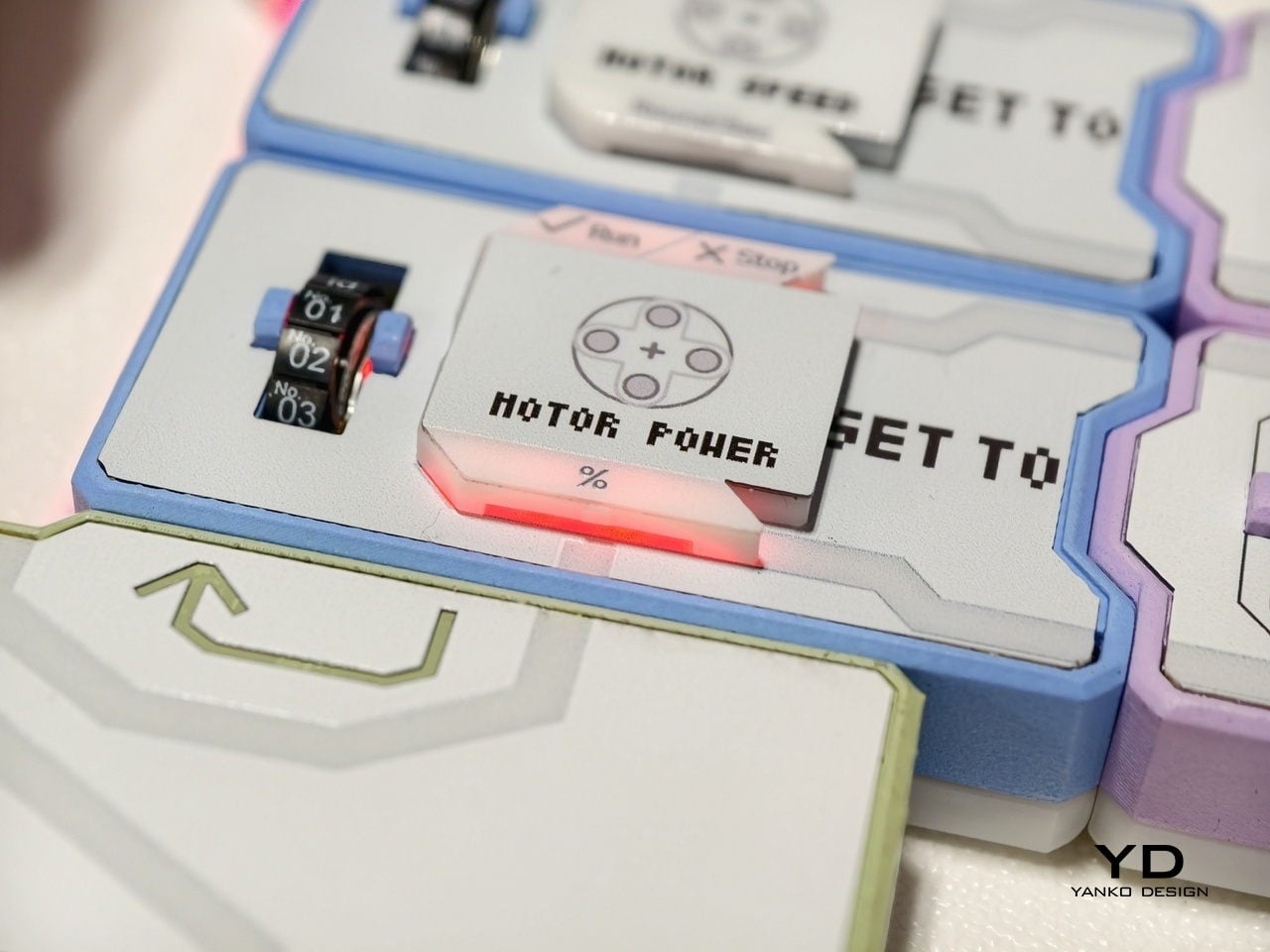

In Lego’s materials about the new courses, AI is far from the only subject covered. Coding, looping code, triggering events and sequences, if/then conditionals and more are all on display through the combination of Lego-built models and other hardware to motorize it. It feels more like a computer science course that also introduces concepts of AI rather than something with an end goal of having kids build a chatbot.

In fact, Lego set up a number of “red lines” in terms of how it would introduce AI. “No data can ever go across the internet to us or any other third party,” Silwinski said. “And that's a really hard bar if you know anything about AI.” So instead of going to the cloud, everything had to be able to do local inference on, as Silwinski said, “the 10-year-old Chromebooks you’ll see in classrooms.” He added that “kids can train their own machine learning models, and all of that is happening locally in the classroom, and none of that data ever leaves the student's device.”

Lego also says that its lessons never anthropomorphize AI, one of the things that is so common in consumer-facing AI tools like ChatGPT, Gemini and many more. “One of the things we're seeing a lot of with generative AI tools is children have a tendency to see them as somehow human or almost magical. A lot of it's because of the conversational interface, it abstracts all the mechanics away from the child.”

Lego also recognized that it had to build a course that’ll work regardless of a teacher’s fluency in such subjects. So a big part of developing the course was making sure that teachers had the tools they needed to be on top of whatever lessons they’re working on. “When we design and we test the products, we're not the ones testing in the classroom,” Silwinski said. “We give it to a teacher and we provide all of the lesson materials, all of the training, all of the notes, all the presentation materials, everything that they need to be able to teach the lesson.” Lego also took into account the fact that some schools might introduce its students to these things starting in Kindergarten, whereas others might skip to the grade 3-5 or 6-8 sets. To alleviate any bumps in the courses for students or teachers, Lego Education works with school districts and individual schools to make sure there’s an on-ramp for those starting from different places in their fluency.

While the idea of “teaching AI” seemed out of character for Lego initially, the approach it’s taking here actually reminds me a bit of Smart Play. With Smart Play, the technology is essentially invisible — kids can just open up a set, start building, and get all the benefits of the new system without having to hook up to an app or a screen. In the same vein, Silwinski said that a lot of the work you can do with the Computer Science and AI kit doesn’t need a screen, particularly the lessons designed for younger kids. And the sets themselves have a mode that acts similar to a mesh, where you connect numerous motors and sensors together to build “incredibly complex interactions and behaviors” without even needing a computer.

For educators interested in checking out this latest course, Lego has single kits up for pre-order starting at $339.95; they’ll start shipping in April. That’s the pricing for the K-2 sets, the 3-5 and 6-8 sets are $429.95 and $529.95, respectively. A single kit covers four students. Lego is also selling bundles with six kits, and school districts can also request a quote for bigger orders.

This article originally appeared on Engadget at https://www.engadget.com/ai/legos-latest-educational-kit-seeks-to-teach-ai-as-part-of-computer-science-not-to-build-a-chatbot-184636741.html?src=rss