The launch of the Apple Vision Pro has made people interested in augmented reality and spatial computing technologies again, but the price tag on that product, not to mention its limited availability, means very few will be able to see what the fuss is all about, pardon the pun. On the other end of the spectrum, headsets like the Meta Quest, primarily designed for VR and the Metaverse, are indeed more accessible but also less comfortable to use, let alone bring along with you anywhere. Fortunately, these aren’t the only options, and AR glasses like the Xreal Air series have been trying to give everyone a taste of AR, regardless of what device they have. To make the experience even easier, XREAL is launching a curious new device that looks like a smartphone and acts almost like a smartphone, except that it’s dedicated to letting you not only consume but even create content in full 3D AR.

Designer: XREAL

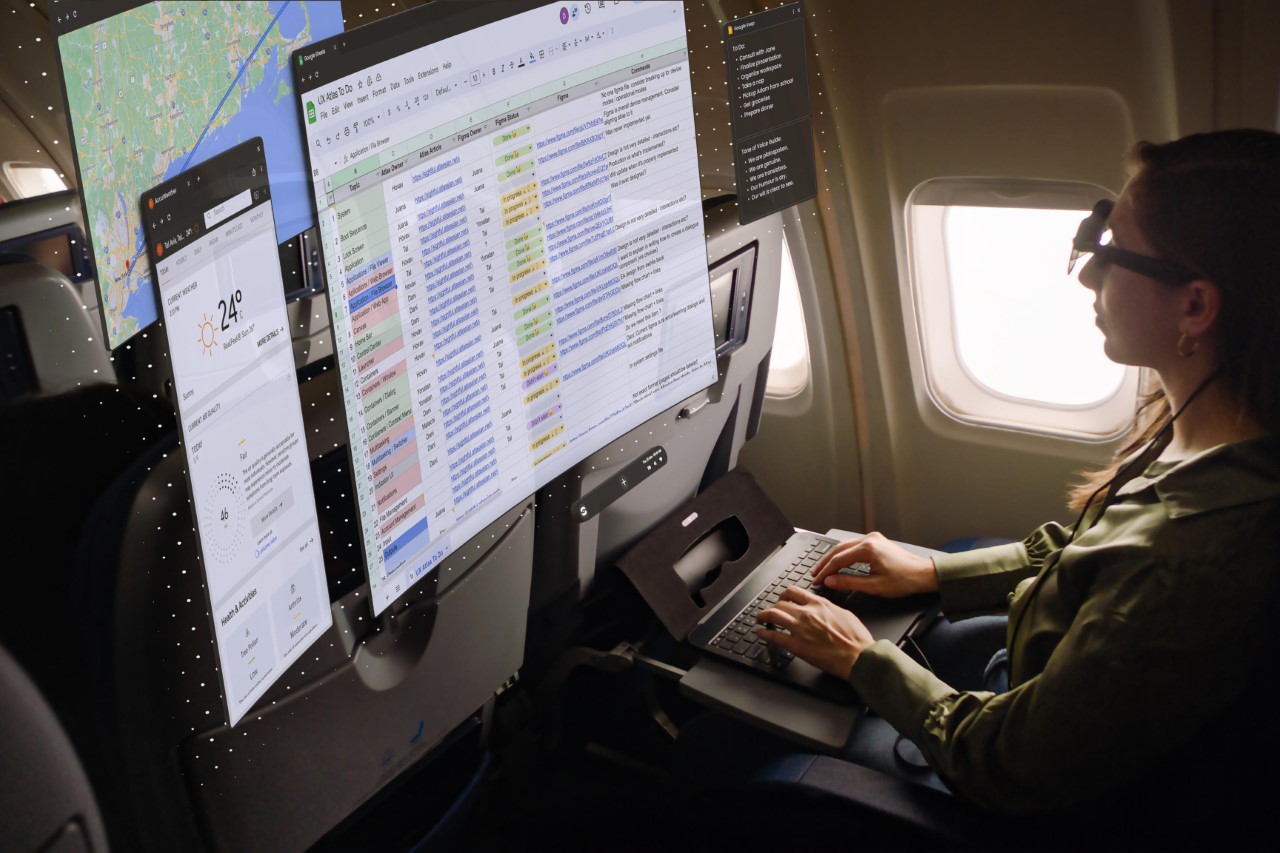

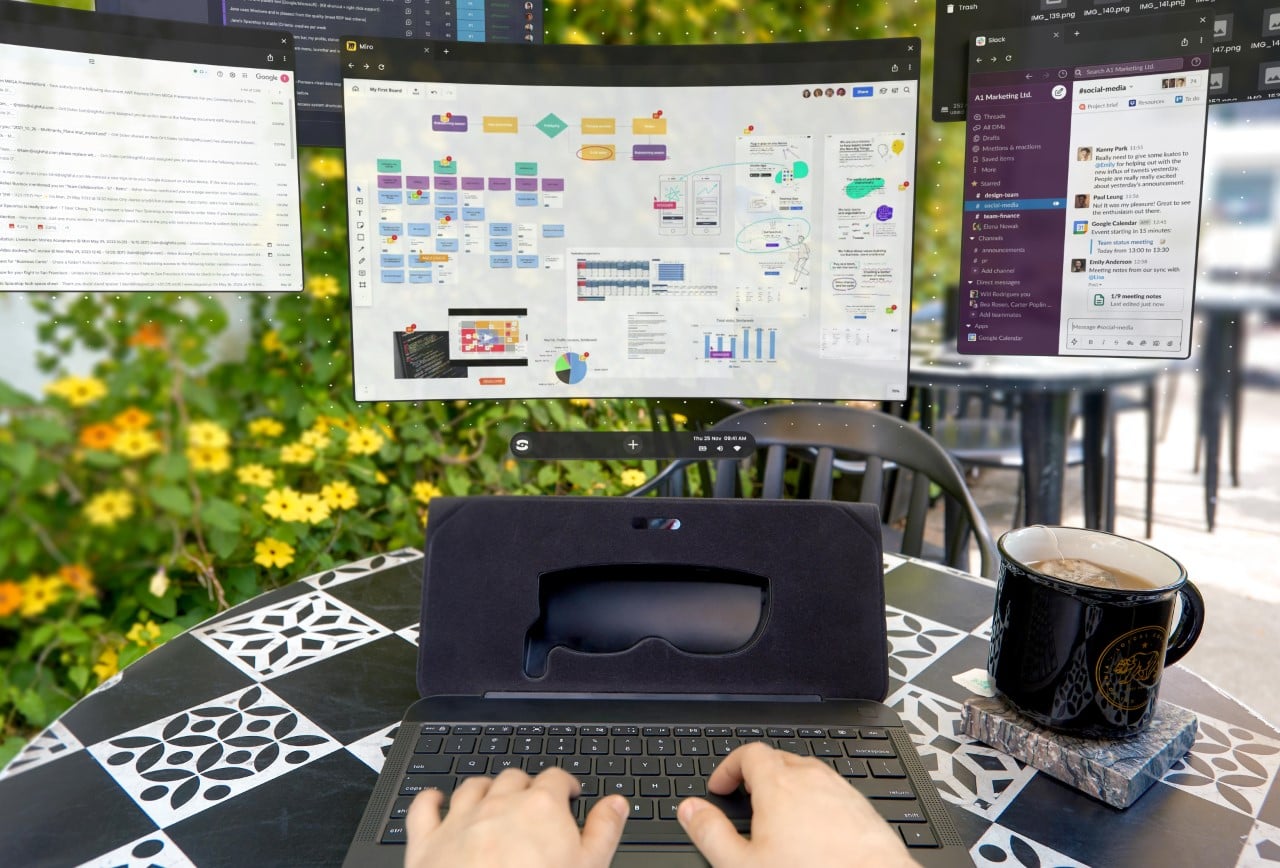

AR glasses practically act like second or external monitors to computers and smartphones, relying on an external device for all the computing, content, and even power. The spectacles themselves provide the image projection hardware and sensors that can then be used by that external device to do things like pinning a screen to a specific location or even displaying a wall of windows that follow your head movement. This design simplifies the setup and saves you from having to spend too much on powerful hardware that will be quickly outdated, but it also means the experience isn’t exactly optimized for AR.

The new XREAL Beam Pro solves that problem by offering a device and a user experience tailored specifically for augmented reality, especially around the brand’s line of AR glasses. And it does so in a form that’s all too familiar to everyone these days: an Android phone. The device features a 6.5-inch LCD 2K (2400×1080) touchscreen running a customized version of Android 14 with Google Play support. It’s even powered by a Snapdragon processor with up to 8GB of RAM and 256GB of internal storage, just like a phone. The similarities with a phone, however, end there.

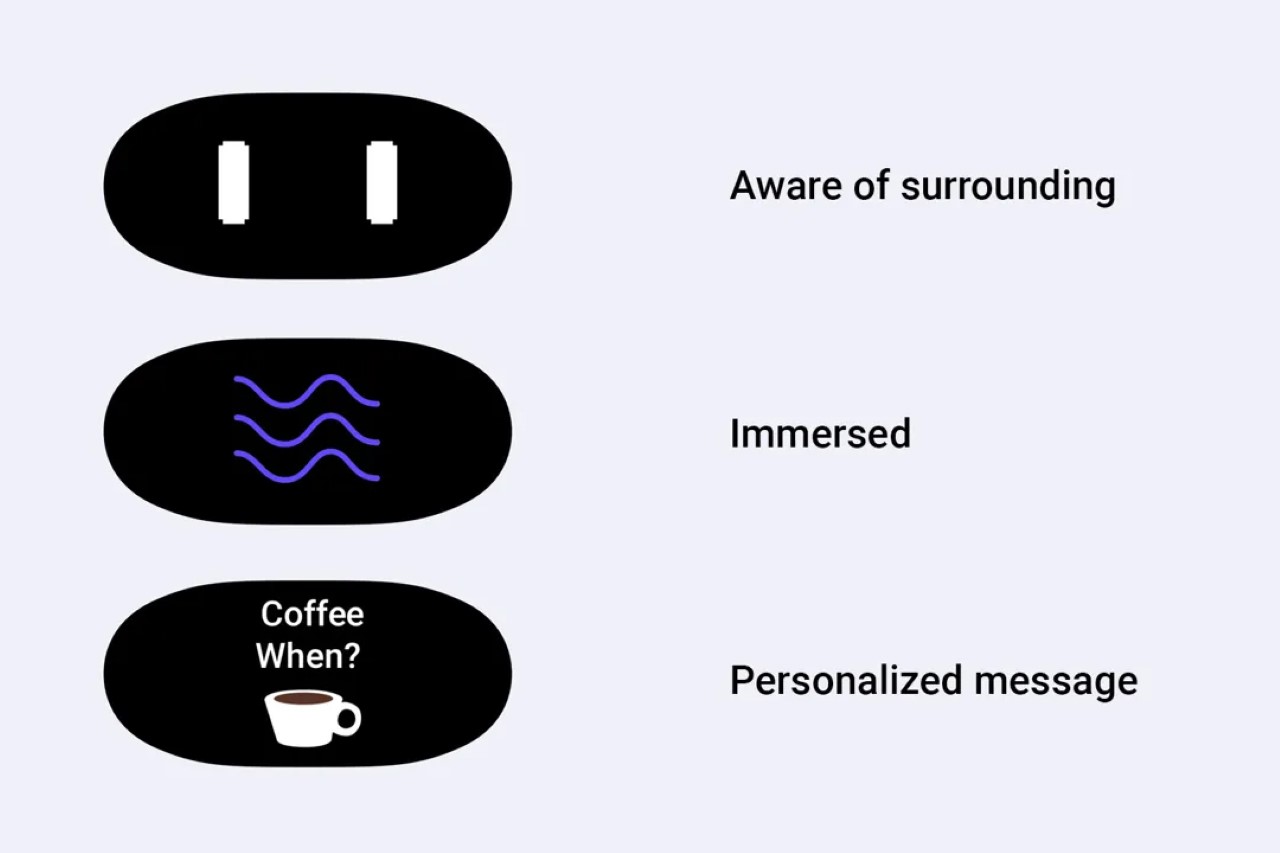

Running on top of Android is XREAL’s NebulaOS, a user interface designed for augmented reality, and it kicks in once you connect an Xreal Air or later models. This software allows you to enjoy “normal” 2D content as if they were made for AR, letting you place two windows side by side, have them stick to their position “in the air” no matter where you turn your head, or have the display follow your head smoothly. Thanks to built-in sensors in the glasses, users can enjoy 3DoF (Xreal Air, Air 2, Air 2 Pro) or 6DoF (Xreal Air 2 Ultra) smooth movement so you don’t have to manually adjust the screen each time.

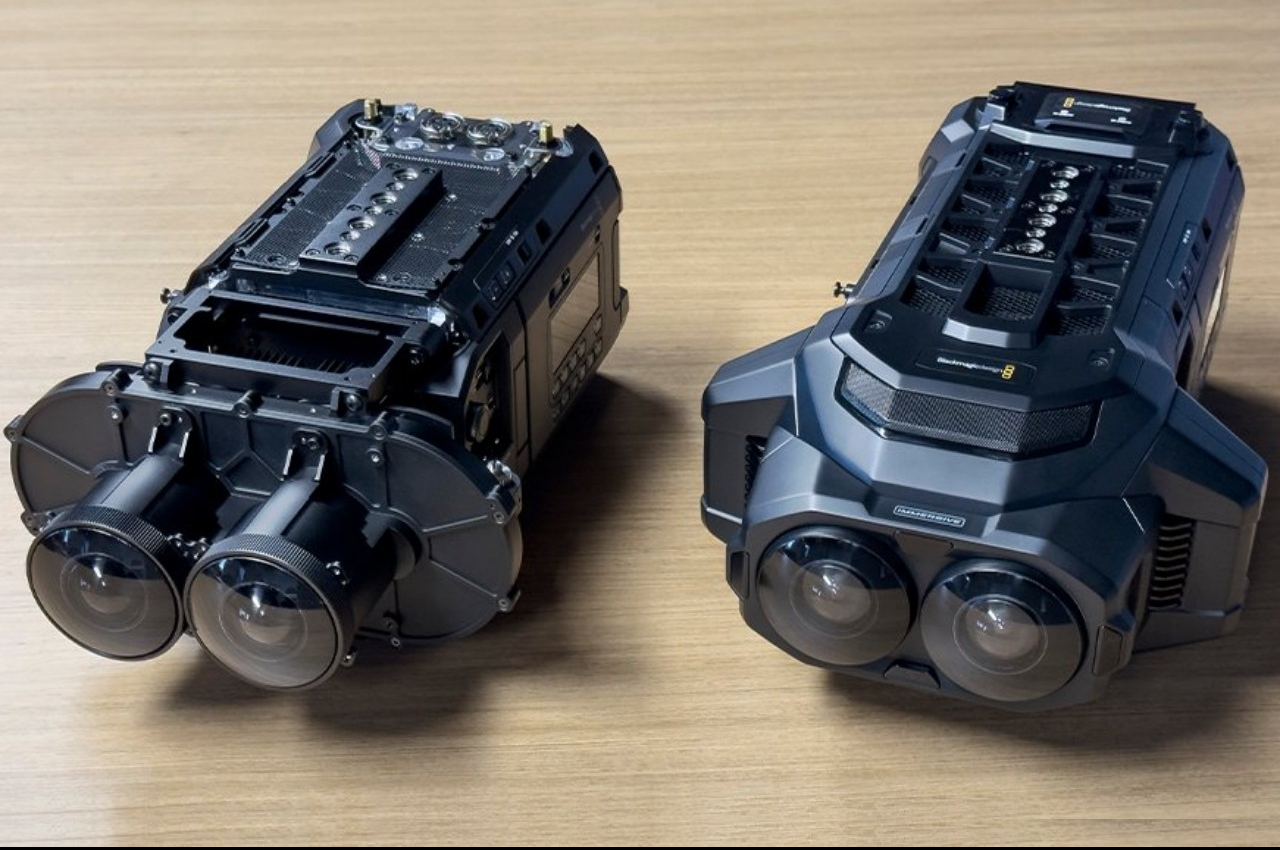

What all this means is that you can enjoy all your favorite Android games, streaming content, and even Internet activities in an immersive AR environment, anytime anywhere. Even better, the dual 50MP cameras on its back are intentionally spaced far apart to let you create 3D content as well. XREAL has partnered with many cloud service providers to bring as much content to your hands and eyes as possible, including NVIDIA’s CloudXR platform, Amazan Luna and Xbox Cloud Gaming streaming services, and more.

That said, some people might be a bit confused by the XREAL Beam Pro’s phone-like design and Android interface. While it does have Wi-Fi and 5G, it doesn’t seem to support phone features like calls and SMS, especially if it doesn’t have a built-in mic. It’s still a perfectly usable data-only Android handheld, though, even without the XREAL Air glasses, but you’ll be missing out on what makes the device special in that case. Global pre-orders for the XREAL Beam Pro start today with a rather surprising price tag of $199 for the base 6GB RAM/128GB storage model.

The post XREAL Beam Pro is an Android mobile device for creating and enjoying AR content first appeared on Yanko Design.