Lumus got a major boost in brand recognition when one of its waveguides was selected for use in the Meta Ray-Ban Display glasses. But that already feels like old tech now because at CES 2026, the company brought some of its latest components to the show and based on what I saw, they seem poised to seriously elevate the optical quality of the next wave of high-end smartglasses.

When the Meta Ray-Ban Displays glasses came out, they wowed users as they were (and still are) one of a handful of smartglassess to feature a full-color in-lens display with at least a 20-degree field of view. But going by the specs on Lumus’ newest waveguides, we’re set for a major upgrade in terms of future capabilities.

The first model I tried featured Lumus’ optimized Z-30 waveguides, which not only offer a much wider 30-degree FOV, they are also 30 percent lighter and 40 percent thinner than previous generations. On top of that, Lumus says they are also more power efficient with the waveguides capable of hitting more than 8,000 nits per watt. This is a big deal because smartglasses are currently quite limited by the size of batteries they can use, especially if you want to make them small and light enough to wear all day. When I tried them on, I was dazzled by both the brightness and sharpness I saw from the Z-30s despite them being limited to 720 x 720 resolution. Not only did the increase in FOV feel much larger than 10 degrees, colors were very rich, including white, which is often one of the most difficult shades to properly reproduce.

However, even after seeing how good that first model was, I was totally not prepared for Lumus’ 70-degree FOV waveguides. I was able to view some videos and a handful of test images and I was completely blown away with how much area they covered. It was basically the entire center portion of the lens, with only small unused areas around the corners. And while I did notice some pincushion distortion along the sides of the waveguide’s display, a Lumus representative told me that it will be possible to correct for that in final retail units. But make no mistake, these waveguides undoubtedly produced some of the sharpest, brightest and best-looking optics I’ve seen from any smartglasses, from either retail models or prototypes or. It almost made me question how much wider FOV these types of gadgets really need, though to be clear, I don’t think we’ve hit the point of diminishing returns yet.

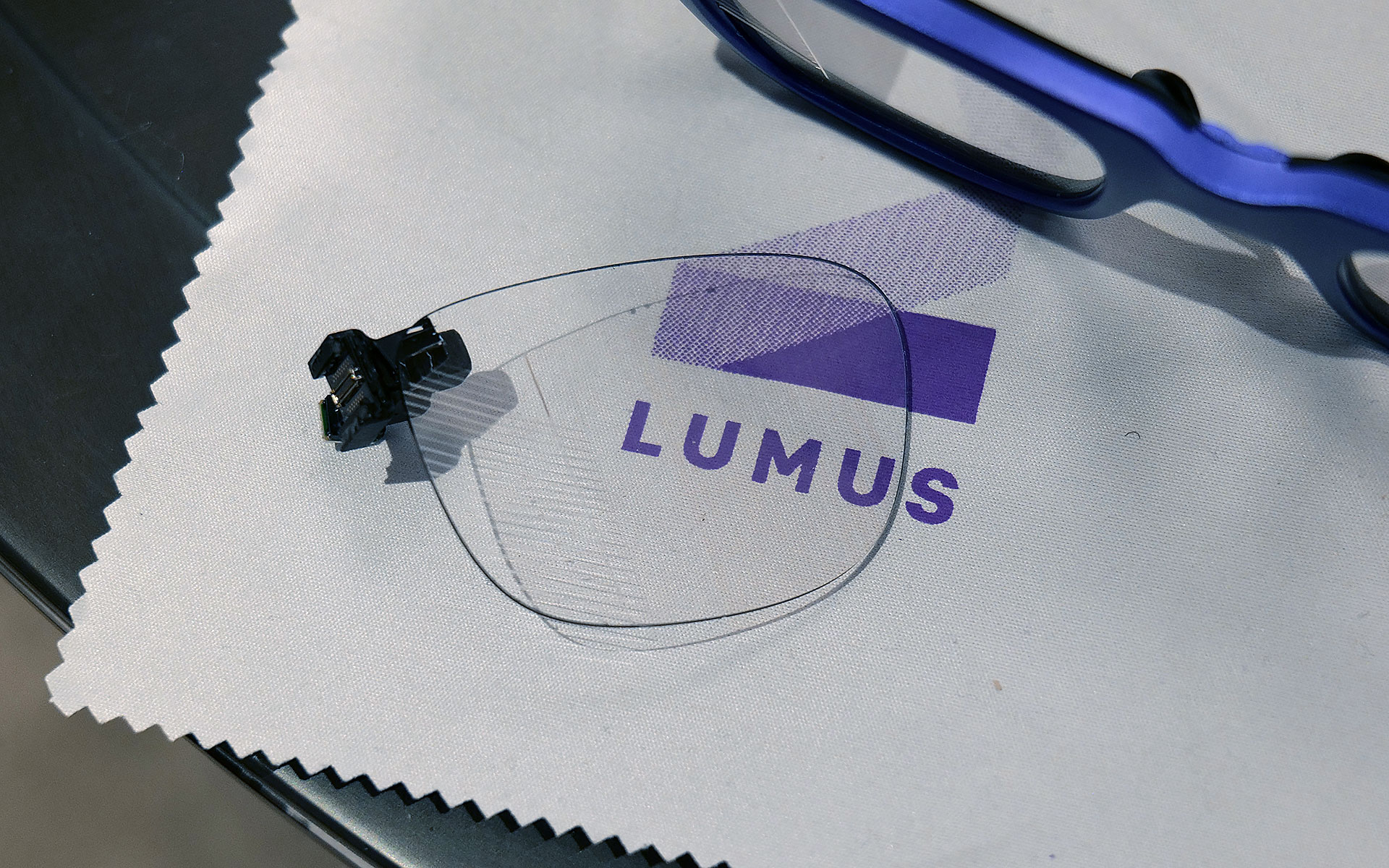

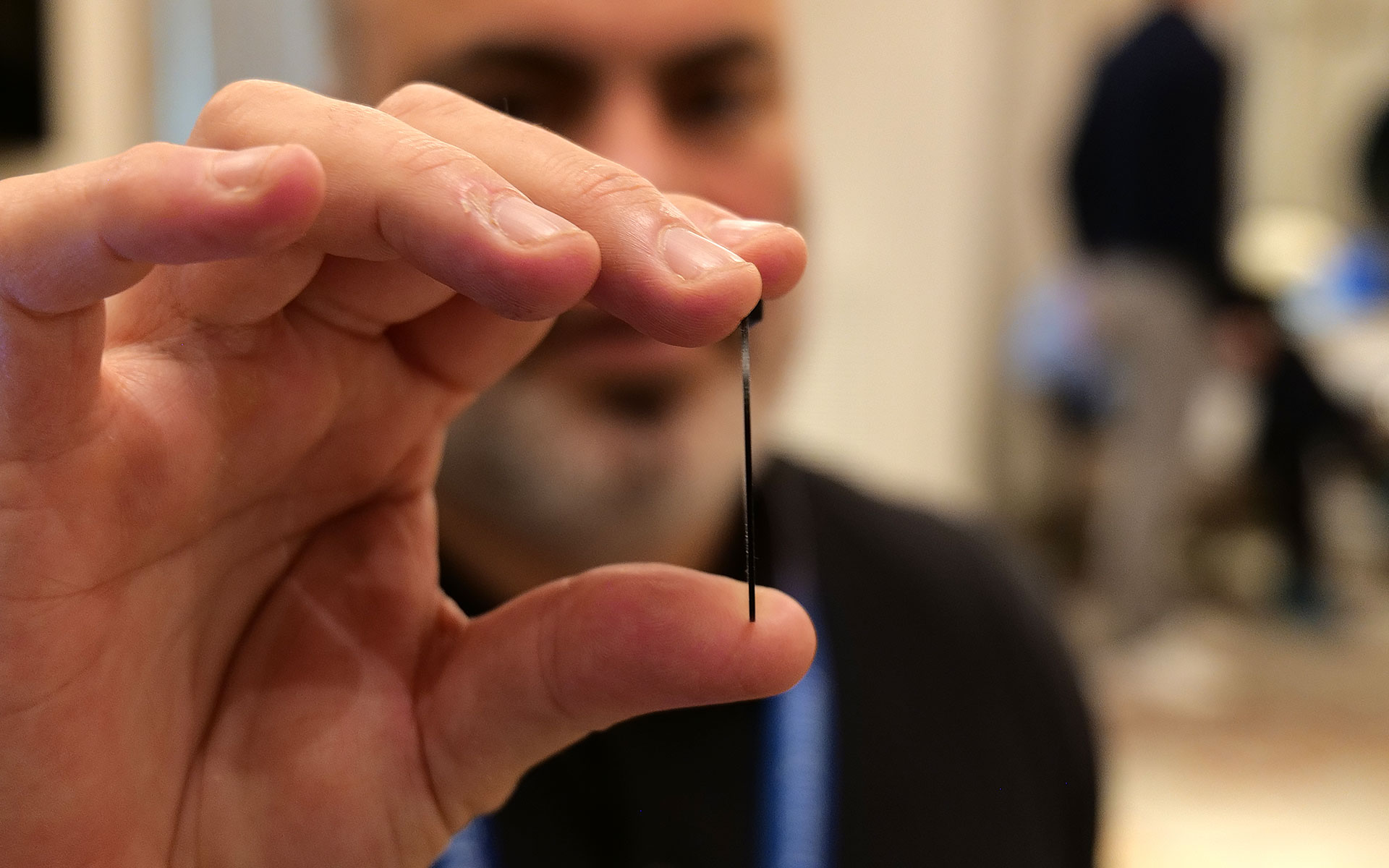

Other advantages of Lumus’ geometric reflective waveguides include better overall efficiency than their refractive counterparts along with the ability to optically bond the displays to smartglasses lenses. That means unlike a lot of rivals, Lumus’ waveguides can be paired with transitions lenses instead of needing to resort to clip-on sunglass attachments when you go outside. Lumus also claims its designs also simplifies the manufacturing process, resulting in thinner waveguides (as small as 0.8mm) and generally higher yields.

Unfortunately, taking high-quality photos of content from smartglasses displays is incredibly challenging, especially when you’re using extremely delicate prototypes, so you’ll just have to take my word for now. But with Lumus in the process of ramping up production of its new waveguides with help from partners including Quanta and SCHOTT, it feels like there will be a ton of smartglasses makers clamoring for these components as momentum continues to build around the industry’s pick for the next “big” thing.