PROS:

- Impressive auto tracking and framing performance

- Good video quality for such a compact camera

- Accessible price tag for a professional tool

CONS:

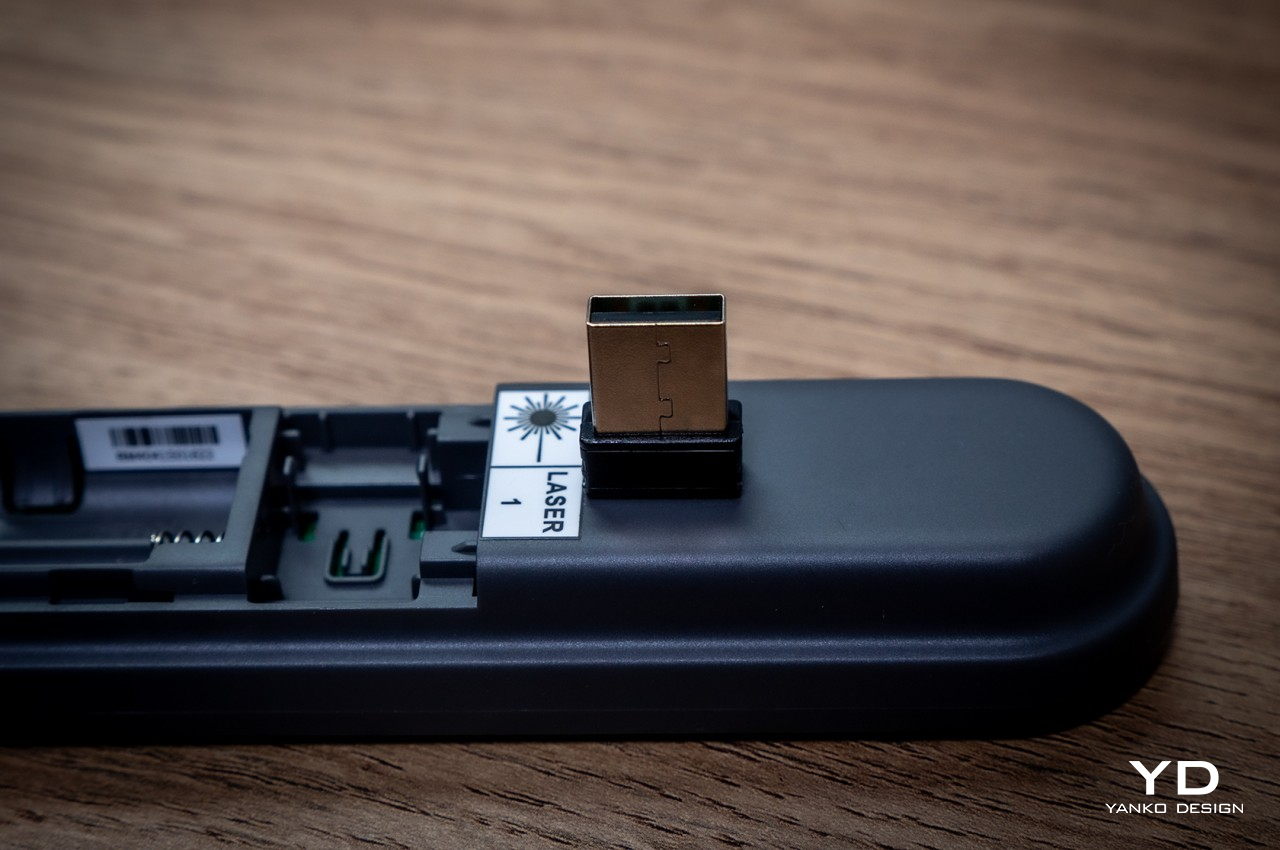

- Slower USB 2.0 connection

Video streaming has become its own entertainment category and industry, allowing almost anyone to reach out to millions across the world and maybe even make some money while doing so. The barrier to entry is quite low, at least when it comes to equipment, as long as you have a smartphone or even a computer with a webcam. As you grow your content and your audience, however, you will eventually find yourself looking for tools that are made to support such activities, like a webcam that can help bring out the best in your video content and presentations. Of course, webcams are a dime a dozen, especially if you consider the cheap and unsurprisingly basic options out there. You might think you need to spend big bucks for a really good webcam, but the new OBSBOT Tiny 2 Lite says otherwise. Compact yet packing quite a punch, the 4K PTZ webcam promises AI-powered features at an affordable price, and that naturally piqued our curiosity to see if it’s really good as it sounds.

Designer: OBSBOT

Aesthetics

Webcam designs are myriad and varied, and most of them are meant to sit on top of computer monitors or laptop lids for use in video chats. That often means using clips or, worse, adhesives, and they take on shapes that are designed to blend in with the monitor, leading to rather uninspiring designs. It might be called a webcam, but the OBSBOT Tiny 2 Lite is really a versatile gimbal camera that can be used for almost any purpose and in almost any setting as long as you’re connected to a computer, of course.

As such, it’s no surprise that the OBSBOT Tiny 2 Lite looks more like one of those gimbal cameras than a webcam, and for good reason. It’s a PTZ or “pan, tilt, zoom” camera, after all, and its base and arm work to move the camera as needed. Yes, you don’t have to position the camera yourself, but more on that later. What this means is that this webcam hardly looks like a webcam at all. Its rounded square base and the square camera hanging from its arm make it resemble a miniature professional video camera, and that’s a comparison that’s more than just skin deep.

All in all, the OBSBOT Tiny 2 Lite has a compact and minimalist design that is distinctive but not distracting. It’s small enough to take with you anywhere your laptop and your work need to go, while still packing quite a collection of powerful features. The camera itself barely has any physical controls, creating a clean and professional-looking aesthetic. That means you’ll have to rely on indirect methods of control, like the OBSBOT App, hand gestures, or the optional remote control.

Ergonomics

You won’t be holding the OBSBOT Tiny 2 Lite in your hand, not unless you put it on a selfie stick or handle. It’s meant to either mount on top of you a monitor, stand on a desk, or attach to a tripod, and the camera’s design supports all three. Rather than relying on a separate clip that you might lose, the Tiny 2 Lite features a built-in stand that unfolds from the bottom, forming a simple cantilever-like mechanism that uses gravity and physics to stick to the top of a computer screen. It is, however, a very simple mechanism, and it might struggle to support older, thicker monitors as well as very slim laptop lids.

When in use, you won’t be touching the camera directly either, since there are no buttons in the first place, other than turning the camera down to activate its privacy mode. Your primary control method will be through the computer app that configures the camera’s settings, but OBSBOT really wants you to rely on automatic operations powered by its AI. For more precise control from a distance, however, you might prefer to spend an extra $49 for the optional remote control slash presentation clicker. Depending on your workflow, you might find this absence of direct control liberating or extra work.

Performance

OBSBOT made a name for itself with 4K webcams packed in tiny designs, and the Tiny 2 Lite is no different. What is different, however, is that it selects only the hardware and features that deliver the best possible experience without asking too much from the consumer’s finances. For example, the 1/2-inch CMOS sensor is quite capable, enabling 4K 30fps as well as 1080p 60fps video recording with crisp and clear details. It supports HDR, though not the PixGain HDR that the more expensive non-Lite OBSBOT Tiny 2 boasts of, and it only has a single ISO for all kinds of lighting conditions.

While the video quality that the Tiny 2 Lite produces is already good, the camera’s real selling point is its intelligent hands-free controls. Of course, this newer model leverages plenty of AI so that you can leave it to decide what it thinks is the best shot, whether it’s zooming up close or using a more panoramic shot. The camera tracks you as you go around, making presentations and demonstrations look more dynamic and natural. It also supports auto framing, where it pans or zooms to adjust to the number of people going in and out of view. If you need more direct control, you don’t have to reach for the remote and just use hand gestures to adjust the camera to your liking. As for that movement, it’s pretty smooth and quick, easily adjusting to your own movement as if you have a human behind the camera.

As many AI features that OBSBOT crammed in such a small and accessible device, it also had to leave out quite a number of them that you’d see on the OBSBOT Tiny 2. It doesn’t have voice control, for example, which might actually be a good thing for more privacy-concerned users, but neither does it have a desktop mode where the camera swings down to capture, rotate, and frame what you’re doing on the desk, which could be your notes or instructions for some process. The biggest “downgrade,” however, is using a slower USB 2.0 connection only, a decision that’s sure to become a bottleneck when you need fast video transfers from camera to computer. Fortunately, most of these features can be considered “extras” from a content creator’s point of view, allowing the OBSBOT Tiny 2 Lite to still deliver a solid performance at almost half the price of its older sibling.

Sustainability

One of the reasons why webcams are so ubiquitous is because of how easy and cheap it is to get the materials needed to make them. That means a load of plastic, which is admittedly lighter and more resilient than a premium but hefty aluminum chassis. Unfortunately, that doesn’t bode well for the sustainability of these products, especially the ones that feel and look cheap and are more likely to be thrown out the moment they start malfunctioning.

The OBSBOT Tiny 2 Lite thankfully doesn’t look cheap nor feel like a throwaway product, but it’s still not something that will last you a long time if you aren’t careful. You won’t want to take it on daring adventures, especially in extreme conditions. This isn’t an action cam anyway, but it could still let you do some outdoor streaming if the weather allows it.

Value

OBSBOT launched the Tiny 2 last year to much applause for the wide array of smart features packed in a compact and stylish design. The one complaint has been its rather steep price tag, and the new Tiny 2 Lite finally addresses that. For only $179, it crams many of those AI features, particularly the core functions that truly define the Tiny 2. Naturally, it had to leave some out, but did OBSBOT cut off too much?

That’s hardly the case, as the Tiny 2 Lite delivers a solid PTZ webcam experience. Admittedly, that price tag might still look a bit too high, and not all features will appeal to everyone who needs a webcam. Those who mainly use webcams for meetings will find little reason to spend more on such a camera, though there are options like sleep mode made for those times when you need to briefly step away from a meeting.

Verdict

Being stuck at home doing video meetings and chats has made us realize how webcams seem to have been stuck in the early 2000s. A whole new crop of more powerful cameras has grown from this need, some going beyond just making you look presentable for a meeting. The OBSBOT Tiny 2 Lite is designed for budding creators who need to focus on the content they’re recording instead of having to fiddle with camera controls. More than just high-quality 4K video, this small yet powerful PTZ webcam leverages AI to do the heavy lifting of framing the perfect shot to captivate your audience, clearly get your point across, or simply have fun. Best of all, you won’t have to break the bank just to get your hands on a tool that looks so simple yet packs quite a punch, helping you look professional in any video.

The post OBSBOT Tiny 2 Lite 4K PTZ Webcam Review: Budget-Friendly AI Camera Crew first appeared on Yanko Design.