Merriam-Webster just named “slop” its word of the year, defining it as “digital content of low quality that is produced usually in quantity by means of artificial intelligence.” The choice is blunt, almost mocking, and it captures something that has been building for months: a collective exhaustion with AI hype that promises intelligence but delivers mediocrity. Over the past three months, that exhaustion has started bleeding into Wall Street. Investors, analysts, and even CEOs of AI companies themselves have been openly questioning whether we are living through an AI bubble. OpenAI’s Sam Altman warned in August that investors are “overexcited about AI,” and Google’s Sundar Pichai admitted to “elements of irrationality” in the sector. The tech industry is pouring trillions into AI infrastructure while revenues lag far behind, raising fears of a dot-com-style correction that could rattle the entire economy.

CES 2026 is going to be ground zero for this tension. Every booth will have an “AI-powered” sticker on something, and a lot of those products will be genuine innovations built on real on-device intelligence and agentic workflows. But a lot of them will also be slop: rebranded features, cloud-dependent gimmicks, and shallow marketing plays designed to ride the hype wave before it crashes. If you are walking the show floor or reading coverage from home, knowing how to separate real AI from fake AI is not just a consumer protection issue anymore. It is a survival skill for navigating a market that feeds on confusion and a general lack of awareness around actual Artificial Intelligence.

1. If it goes offline and stops working, it was never really AI

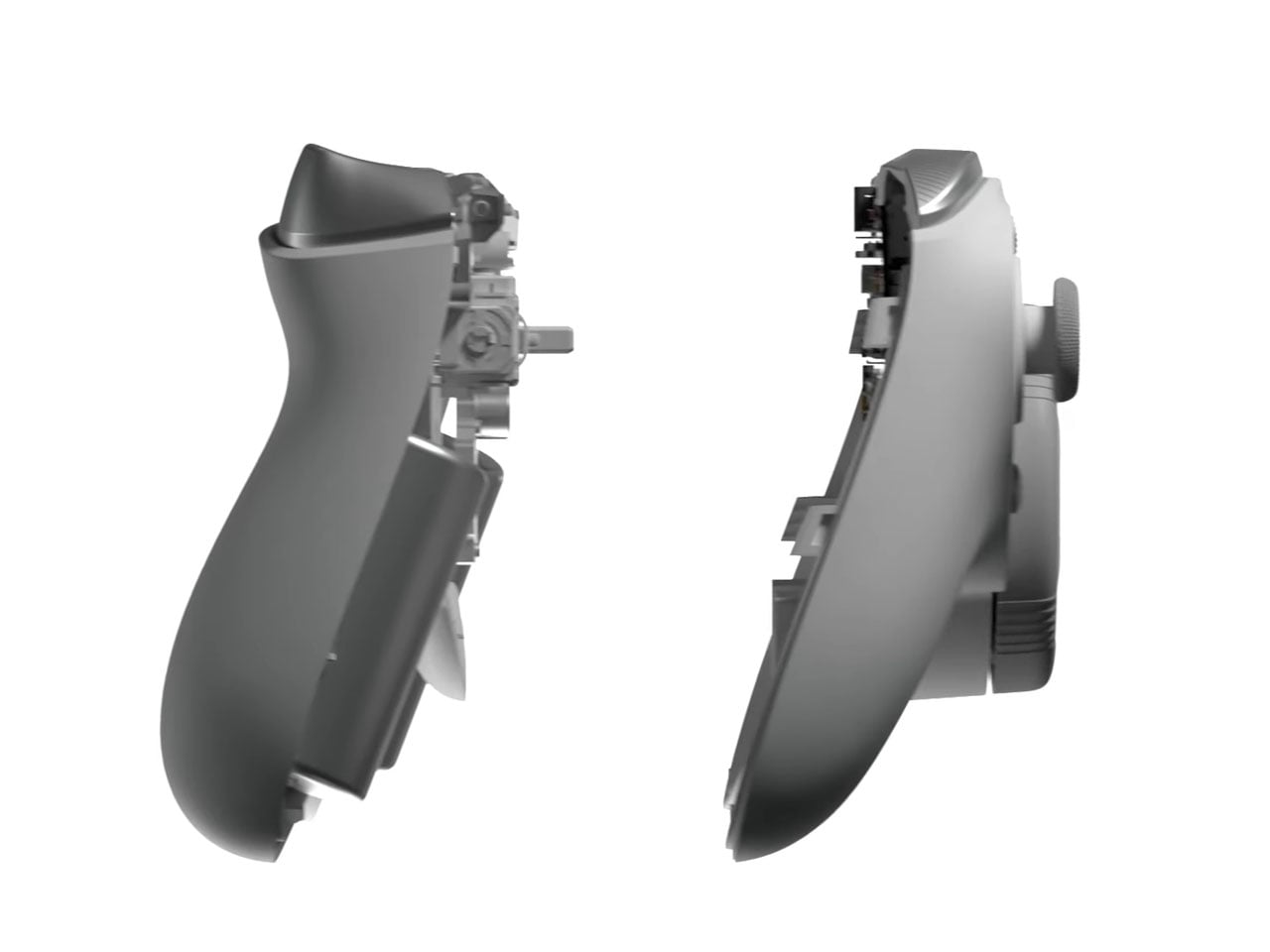

The simplest test for fake AI is also the most reliable: ask what happens when the internet connection drops. Real AI that lives on your device will keep functioning because the processing is happening locally, using dedicated chips and models stored in the gadget itself. Fake AI is just a thin client that calls a cloud API, and the moment your Wi-Fi cuts out, the “intelligence” disappears with it.

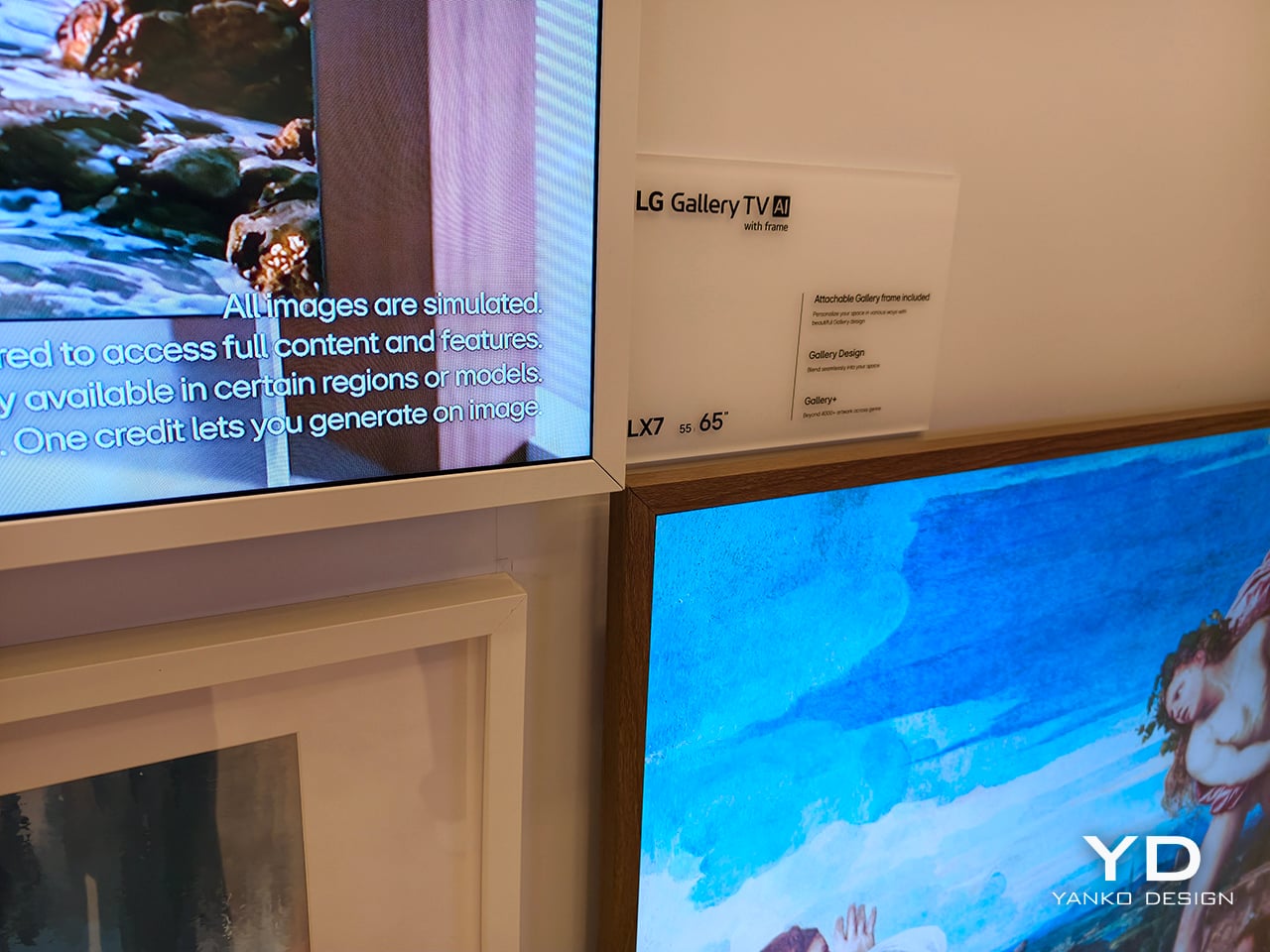

Picture a laptop at CES 2026 that claims to have an AI writing assistant. If that assistant can still summarize documents, rewrite paragraphs, and handle live transcription when you are on a plane with no internet, you are looking at real on-device AI. If it gives you an error message the second you disconnect, it is cloud-dependent marketing wrapped in an “AI PC” label. The same logic applies to TVs, smart home devices, robot vacuums, and wearables. Genuine AI products are designed to think locally, with cloud connectivity as an optional boost rather than a lifeline.

The distinction matters because on-device AI is expensive to build. It requires new silicon, tighter integration between hardware and software, and real engineering effort. Companies that invested in that infrastructure will want you to know it works offline because that is their competitive edge. Companies that skipped that step will either avoid the question or bury it in fine print. At CES 2026, press the demo staff on this: disconnect the device from the network and see if the AI features still run. If they do not, you just saved yourself from buying rebranded cloud software in a shiny box.

If your Robot Vacuum has Microsoft Copilot, RUN!

2. If it’s just a chatbot, it isn’t AI… it’s GPT Customer Care

The laziest fake AI move at CES 2026 will be products that open a chat window, let you type questions, and call that an AI feature. A chatbot is not product intelligence. It is a generic language model wrapper that any company can license from OpenAI, Anthropic, or Google in about a week, then slap their logo on top and call it innovation. If the only AI interaction your gadget offers is typing into a text box and getting conversational responses, you are not looking at an AI product. You are looking at customer service automation dressed up as a feature.

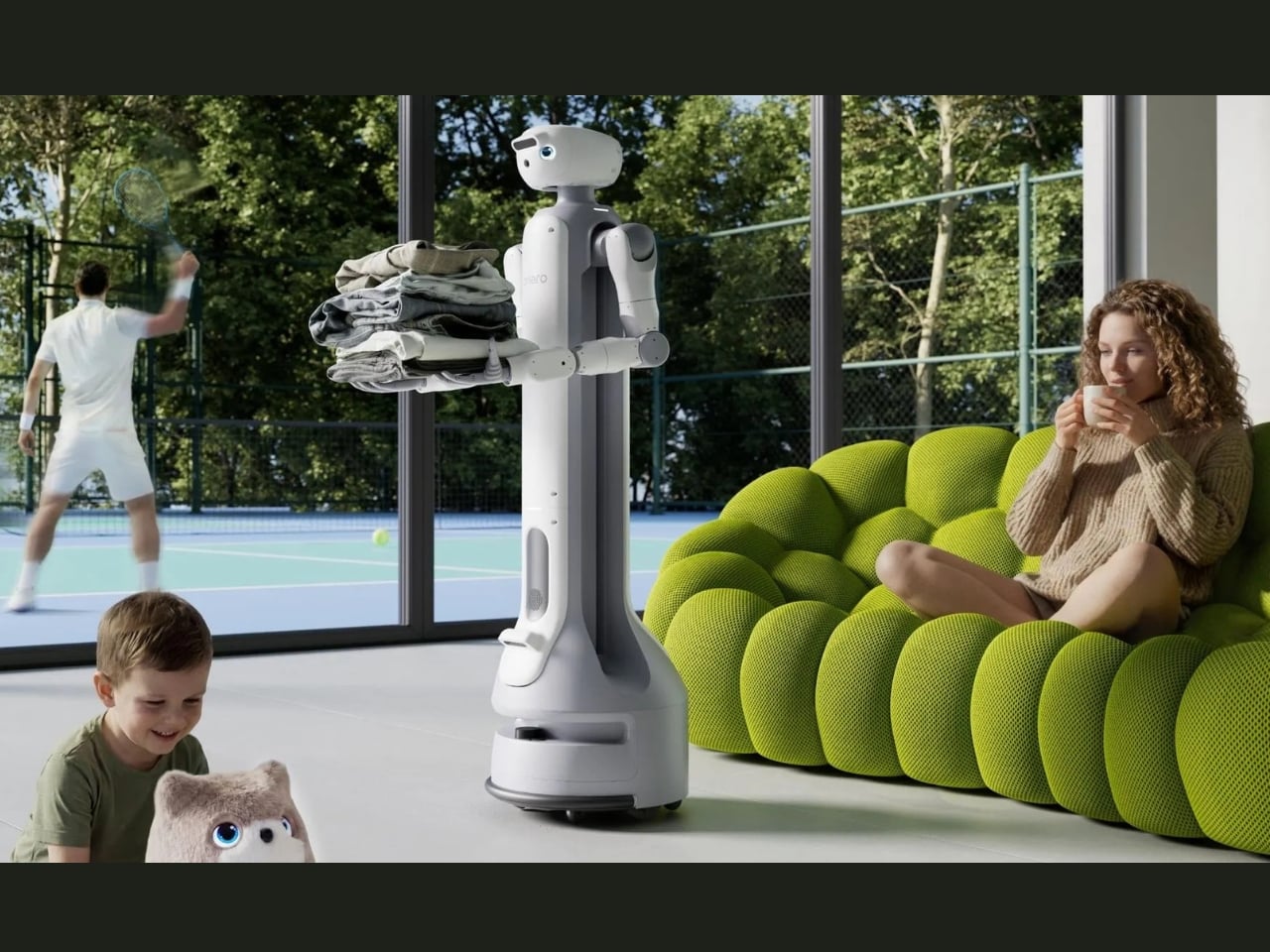

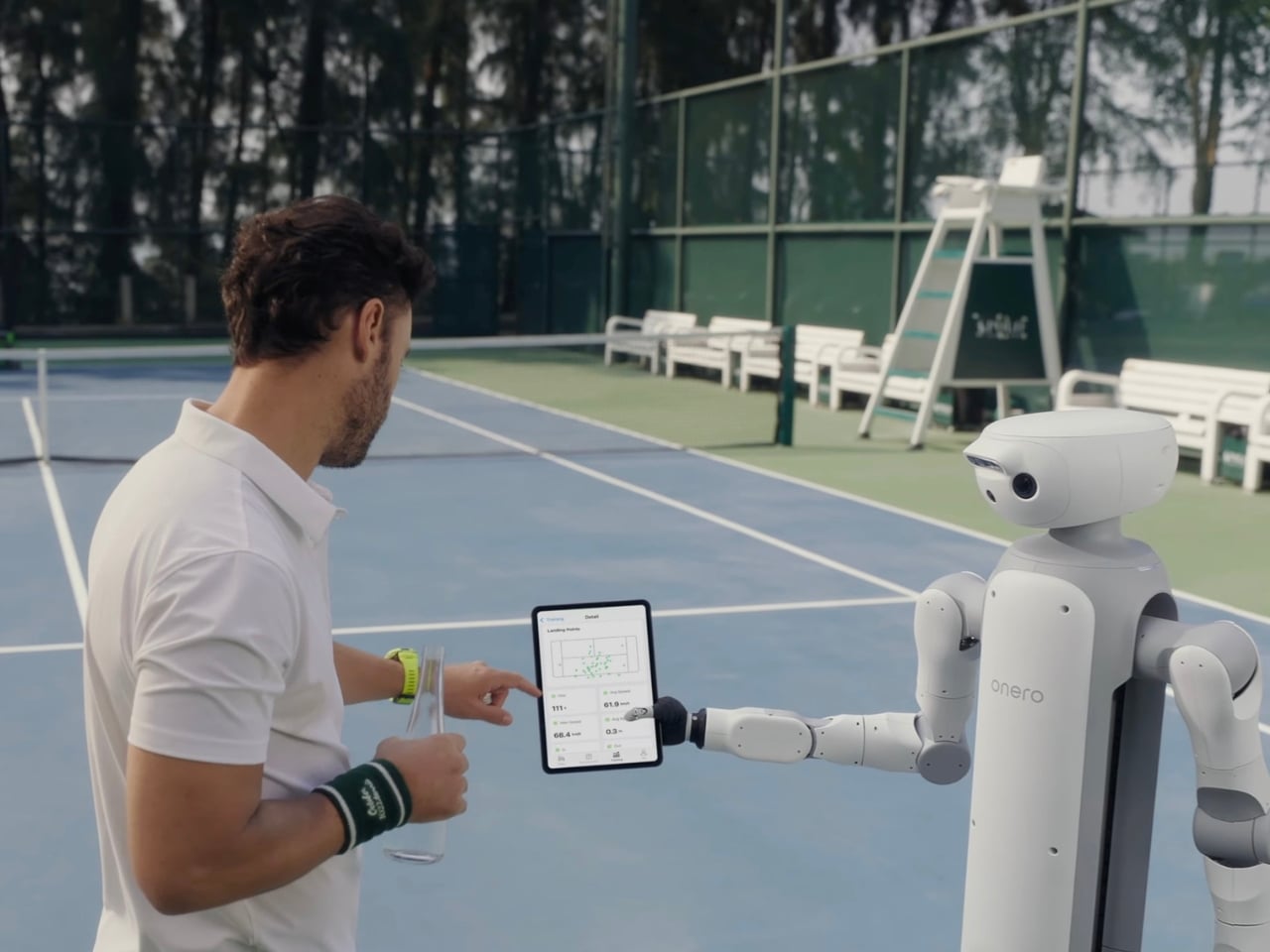

Real AI is embedded in how the product works. It is the robot vacuum that maps your home, decides which rooms need more attention, and schedules itself around your routine without you opening an app. It is the laptop that watches what you do, learns your workflow, and starts suggesting shortcuts or automating repetitive tasks before you ask. It is the TV that notices you always pause shows when your smart doorbell rings and starts doing it automatically. None of that requires a chat interface because the intelligence is baked into the behavior of the device itself, not bolted on as a separate conversation layer.

If a company demo at CES 2026 starts with “just ask it anything,” probe deeper. Can it take actions across the system, or does it just answer questions? Does it learn from how you use the product, or is it the same canned responses for everyone? Is the chat interface the only way to interact with the AI, or does the product also make smart decisions in the background without prompting? A chatbot can be useful, but it is table stakes now, not a differentiator. If that is the whole AI story, the company did not build AI into their product. They rented a language model and hoped you would not notice.

3. If the AI only does one narrow thing, it is probably just a renamed preset

Another red flag is when a product’s AI feature is weirdly specific and cannot generalize beyond a single task. A TV that has “AI motion smoothing” but no other intelligent behavior is not running a real AI model; it is running the same interpolation algorithm TVs have had for years, now rebranded with an AI label. A camera that has “AI portrait mode” but cannot recognize anything else is likely just using a basic depth sensor and calling it artificial intelligence. Real AI, especially the kind built into modern chips and operating systems, is designed to generalize across tasks: it can recognize objects, understand context, predict user intent, and coordinate with other devices.

Ask yourself: does this product’s AI learn, adapt, or handle multiple scenarios, or does it just trigger a preset when you press a button? If it is the latter, you are looking at a marketing gimmick. Fake AI products love to hide behind phrases like “AI-enhanced” or “AI-optimized,” which sound impressive but are deliberately vague. Real AI products will tell you exactly what the system is doing: “on-device object recognition,” “local natural language processing,” “agentic task coordination.” Specificity is a sign of substance. Vagueness is a sign of slop.

The other giveaway is whether the AI improves over time. Genuine AI systems get smarter as they process more data and learn from user behavior, often through firmware updates that improve the underlying models. Fake AI products ship with a fixed set of presets and never change. At CES 2026, ask demo reps if the product’s AI will improve after launch, how updates work, and whether the intelligence adapts to individual users. If they cannot give you a clear answer, you are looking at a one-time software trick masquerading as artificial intelligence.

Don’t fall for ‘AI Enhancement’ presets or buttons that don’t do anything related to AI.

4. If the company cannot explain what the AI actually does, walk away

Fake AI thrives on ambiguity. Companies that bolt a chatbot onto a product and call it AI-powered know they do not have a real differentiator, so they lean into buzzwords and avoid specifics. Real AI companies, by contrast, will happily explain what their models do, where the processing happens, and what problems the AI solves that the previous generation could not. If a booth rep at CES 2026 gives you vague non-answers like “it uses machine learning to optimize performance” without defining what gets optimized or how, that is a warning sign.

Push for concrete examples. If a smart home hub claims to have AI coordination, ask: what decisions does it make on its own, and what still requires manual setup? If a wearable says it has AI health coaching, ask: is the analysis happening on the device or in the cloud, and can it work offline while hiking in the wilderness? If a laptop advertises an AI assistant, ask: what can it do without an internet connection, and does it integrate with other apps (agentic) or just sit in a sidebar? Companies with real AI will have detailed, confident answers because they built the system from the ground up. Companies with fake AI will deflect, generalize, or change the subject.

The other test is whether the AI claim matches the price and the hardware. If a $200 gadget promises the same on-device AI capabilities as a $1,500 laptop with a dedicated neural processing unit, somebody is lying. Real AI requires real silicon, and that silicon costs money. Budget products can absolutely have useful AI features, but they will typically offload more work to the cloud or use simpler models. If the pricing does not line up with the technical claims, it is worth being skeptical. At CES 2026, ask what chip is powering the AI, whether it has a dedicated NPU, and how much of the intelligence is local versus cloud-based. If they cannot or will not tell you, that is your cue to move on.

5. Check if the AI plays well with others, or if it lives in a silo

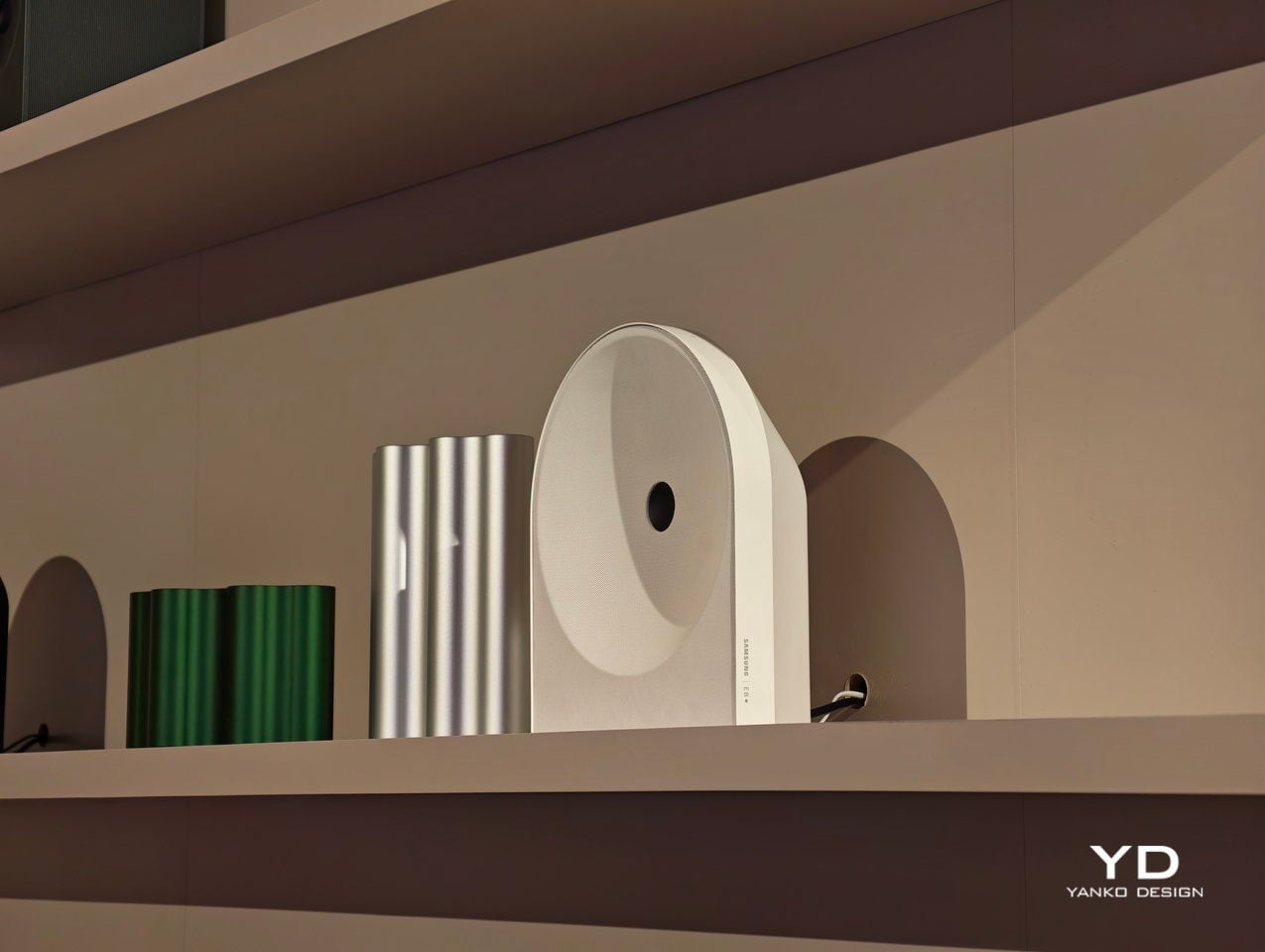

One of the clearest differences between real agentic AI and fake “AI inside” products is interoperability. Genuine AI systems are designed to coordinate with other devices, share context, and act on your behalf across an ecosystem. Fake AI products exist in isolation: they have a chatbot you can talk to, but it does not connect to anything else, and it cannot take actions beyond its own narrow interface. Samsung’s CES 2026 exhibit is explicitly built around AI and interoperability, with appliances, TVs, and smart home products all coordinated by a shared AI layer. That is what real agentic AI looks like: the fridge, washer, vacuum, and thermostat all understand context and can make decisions together without you micromanaging each one. Fake AI, by contrast, gives you five isolated apps with five separate chatbots, none of which talk to each other. If a product at CES 2026 claims to have AI but cannot integrate with the rest of your smart home, car, or workflow, it is not delivering the core promise of agentic systems.

Ask demo reps: does this work with other brands, or only within your ecosystem? Can it trigger actions in other apps or devices, or does it just respond to questions? Does it understand my preferences across multiple products, or does each device start from scratch? Companies that built real AI ecosystems will brag about cross-device coordination because it is hard to pull off and it is the whole point. Companies selling fake AI will either avoid the topic or try to upsell you on buying everything from them, which is a sign they do not have real interoperability.

6. When in doubt, look for the slop

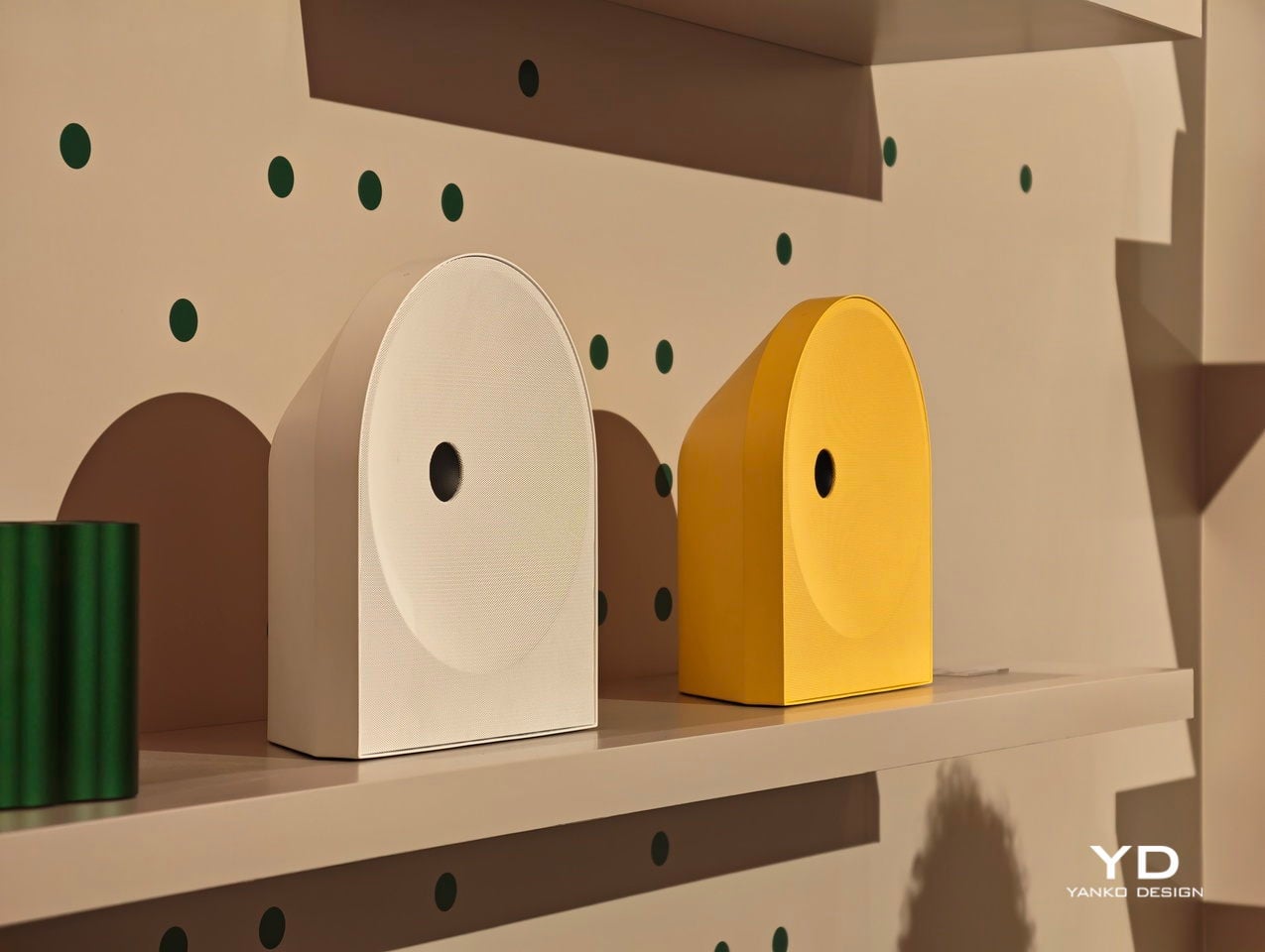

The rise of AI-generated “slop” gives you a shortcut for spotting lazy AI products: if the marketing materials, product images, or demo videos look AI-generated and low-effort, the product itself is probably shallow too. Merriam-Webster defines slop as low-quality digital content produced in quantity by AI, and it has flooded everything from social media to advertising to product launches. Brands that cut corners on their own marketing by using obviously AI-generated visuals are signaling that they also cut corners on the actual product development.

Watch for telltale signs: weird proportions in product photos, uncanny facial expressions in lifestyle shots, text that sounds generic and buzzword-heavy with no real specifics, and claims that are too good to be true with no technical backing. Real AI products are built by companies that care about craft, and that care shows up in how they present the product. Fake AI products are built by companies chasing a trend, and the slop in their marketing is the giveaway. At CES 2026, trust your instincts: if the booth, the video, or the pitch feels hollow and mass-produced, the gadget probably is too.

The post How to Spot Fake AI Products at CES 2026 Before You Buy first appeared on Yanko Design.