Snow White’s stepmother would have a hard time finding out who’s the fairest of them all, as everyone’s reflection in this Kinect-powered mirror looks equally fluffy.

New York artist Daniel Rozin seems to have developed an obsession for mirrors, considering that most of his portfolio focuses on them. His latest creation, simply titled PomPom Mirror, might convince you that Rozin is not a big fan of sharpness. The contraption makes use of a Kinect sensor, motors and plenty of faux fur pom poms to create fluffy reflections of whoever stands in front of it.

Obviously, the effect couldn’t have been achieved with a small number of faux fur pom poms and motors. With that in mind, Rozin employed 464 motors that turn 928 spherical puffs from beige to black depending on what motion is captured by the Kinect sensor.

People’s reaction to seeing this concept is preponderantly positive, with some of them even wanting to sleep on this mirror. Well, the surface definitely wouldn’t suffice, but maybe that’s what people should suggest Rodin to try in this future projects: a full length mirror that doubles as a fluffy mattress when not in use. After all, the ladies may want to admire their dresses, and the current PomPom Mirror cannot encompass their entire length.

The Kinect sensor tracks the people standing in front of the mirror and sends the data to a microcontroller that, in turn, flips the motors to determine the change in color. The reflection appears in real-time, but don’t expect instant reactions. After all, something as fluffy as this mirror shouldn’t make sudden moves, as they would contradict the whole concept. One might argue that the fluffy mirror has a life of its own, and if you’re considering its aspect and motion, you wouldn’t be that far from the truth.

In case the above video isn’t enough for you, and you happen to be in the Big Apple these days, don’t hesitate to visit the Descent with Modification artworks exhibition. Rozin’s PomPom Mirror is currently showcased there, and can be tested by any of the visitors. The exhibition runs through July 1, 2015, so don’t miss your chance of witnessing a completely unique form of art. Unique and fluffy, that is.

Be social! Follow Walyou on Facebook and Twitter, and read more related stories about the depth-sensing cameras on Kinect v2, or how Disney Aireal enhances Kinect gaming with tactile feedback.

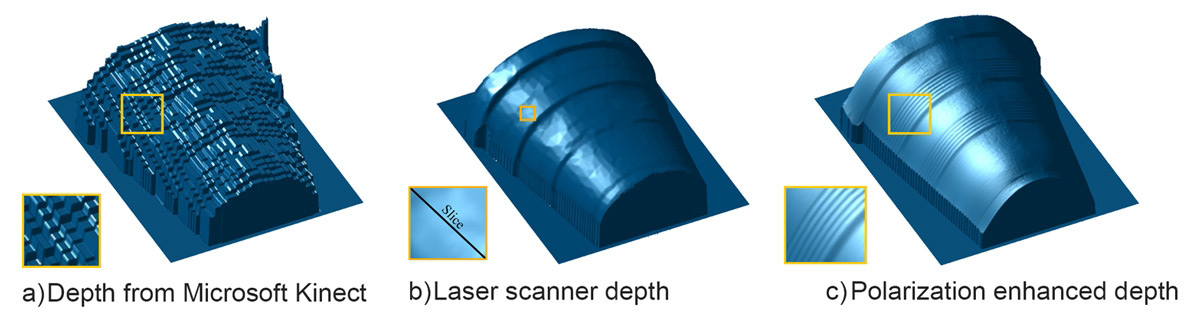

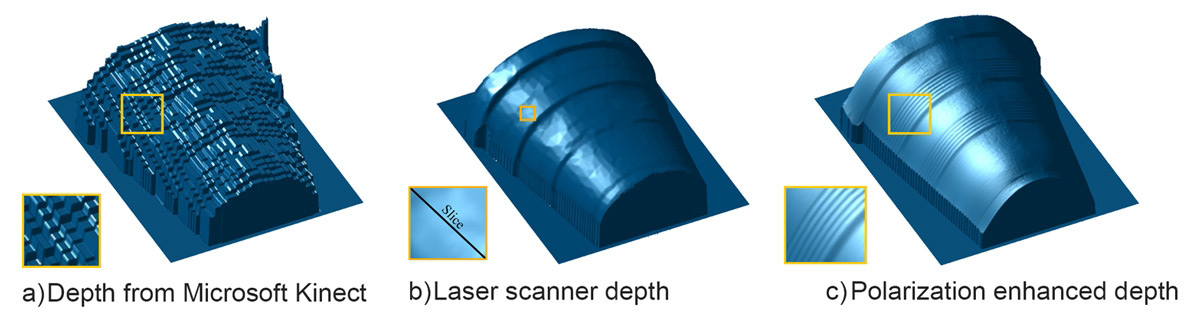

Many 3D cameras and scanners produce rough images, especially as they get smaller and cheaper. You often need a big laser scanner just to get reasonably accurate results. If MIT researchers have their way, though, even your smartphone could capture...

Many 3D cameras and scanners produce rough images, especially as they get smaller and cheaper. You often need a big laser scanner just to get reasonably accurate results. If MIT researchers have their way, though, even your smartphone could capture...

Many 3D cameras and scanners produce rough images, especially as they get smaller and cheaper. You often need a big laser scanner just to get reasonably accurate results. If MIT researchers have their way, though, even your smartphone could capture...

Many 3D cameras and scanners produce rough images, especially as they get smaller and cheaper. You often need a big laser scanner just to get reasonably accurate results. If MIT researchers have their way, though, even your smartphone could capture...

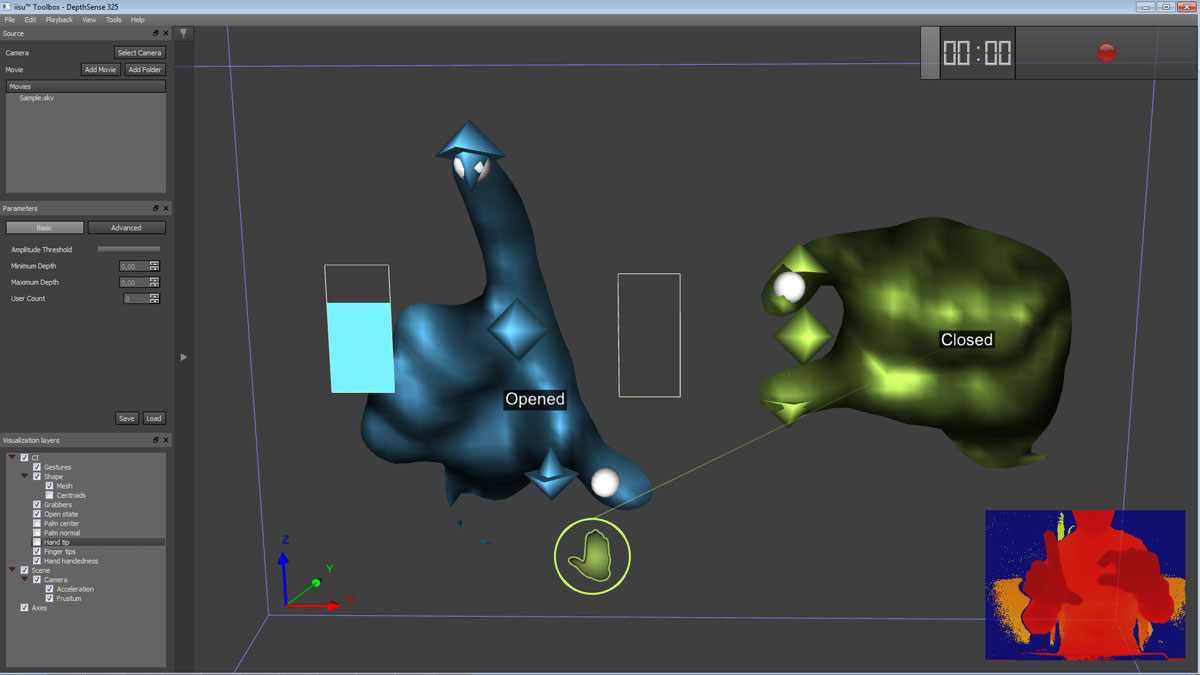

You don't want to stand in front of an X-ray machine for any longer than necessary, and scientists have found a clever way to make that happen: the Kinect sensor you might have picked up with your Xbox. Their technique has the depth-sensing camera m...

You don't want to stand in front of an X-ray machine for any longer than necessary, and scientists have found a clever way to make that happen: the Kinect sensor you might have picked up with your Xbox. Their technique has the depth-sensing camera m...

After months of teasing, beta testing and announcements, the Xbox One's big update for Windows 10, an all-new UI and backwards compatibility with some Xbox 360 games will arrive tomorrow. According to Major Nelson, the new software will start rolli...

After months of teasing, beta testing and announcements, the Xbox One's big update for Windows 10, an all-new UI and backwards compatibility with some Xbox 360 games will arrive tomorrow. According to Major Nelson, the new software will start rolli...

When the New Xbox One Experience hits consoles on November 12th, it will remove Kinect gestures from the dashboard entirely, platform head Mike Ybarra confirmed to Windows Central. The update is poised to be massive, overhauling most of the Xbox On...

When the New Xbox One Experience hits consoles on November 12th, it will remove Kinect gestures from the dashboard entirely, platform head Mike Ybarra confirmed to Windows Central. The update is poised to be massive, overhauling most of the Xbox On...

With the holiday season just around the corner, Microsoft is looking to capitalize on Xbox One sales over the next couple of months. As such, the company has announced a limited-time deal for the kit that includes its latest console and companion m...

With the holiday season just around the corner, Microsoft is looking to capitalize on Xbox One sales over the next couple of months. As such, the company has announced a limited-time deal for the kit that includes its latest console and companion m...

Sony has purchased SoftKinetic, a Belgian startup that's most famous for creating image sensors that can digitally capture objects in 3D. The firm specializes in time of flight, a camera technology that you'll be familiar with if you've ever used t...

Sony has purchased SoftKinetic, a Belgian startup that's most famous for creating image sensors that can digitally capture objects in 3D. The firm specializes in time of flight, a camera technology that you'll be familiar with if you've ever used t...