Over the last few decades, computer graphics have become a more and more essential part of industrial design – especially in the automotive industry. The ability to visualize designs digitally has given designers and engineers the freedom to test and refine concepts and functionality prior to expending time and money on physical prototypes.

Ford is definitely on the leading edge of using virtual reality techniques to visualize new vehicles. I’ve spent some time checking out the automaker’s virtual reality environments, and they’re truly impressive, allowing you to walk around and step inside of virtual vehicles. Now the company has announced its using a new technology called ICE (Immersive Cinematic Engineering) which goes one step further than the systems I’ve seen.

Developed under the leadership of Ford virtual reality and advanced visualization specialist Elizabeth Baron, ICE tech allows digital vehicles to be observed with truly photorealistic details, allowing designers and engineers to see their vehicles with the same material properties as they would in reality. This can be helpful for reducing distracting reflections in the cabin and for other drivers on the road, as well as seeing how light plays with the various materials used in the vehicle.

Cinematic ray tracing and global illumination techniques help to produce images that look so realistic, that every surface, crease, and crevice plays with light like it does in the real world. Previous tech would use precomputed shadows and reflections, while ICE renders these in real time. This way everything from the way that the headlights and interior lights will work to how light will cast through the windshield can be realistically rendered.

I can only imagine that these improvements will have an even more profound impact on the freedom that designers have when creating vehicles, as they’ll be able to refine even the tiniest details of how light affects a car long before the car goes into production.

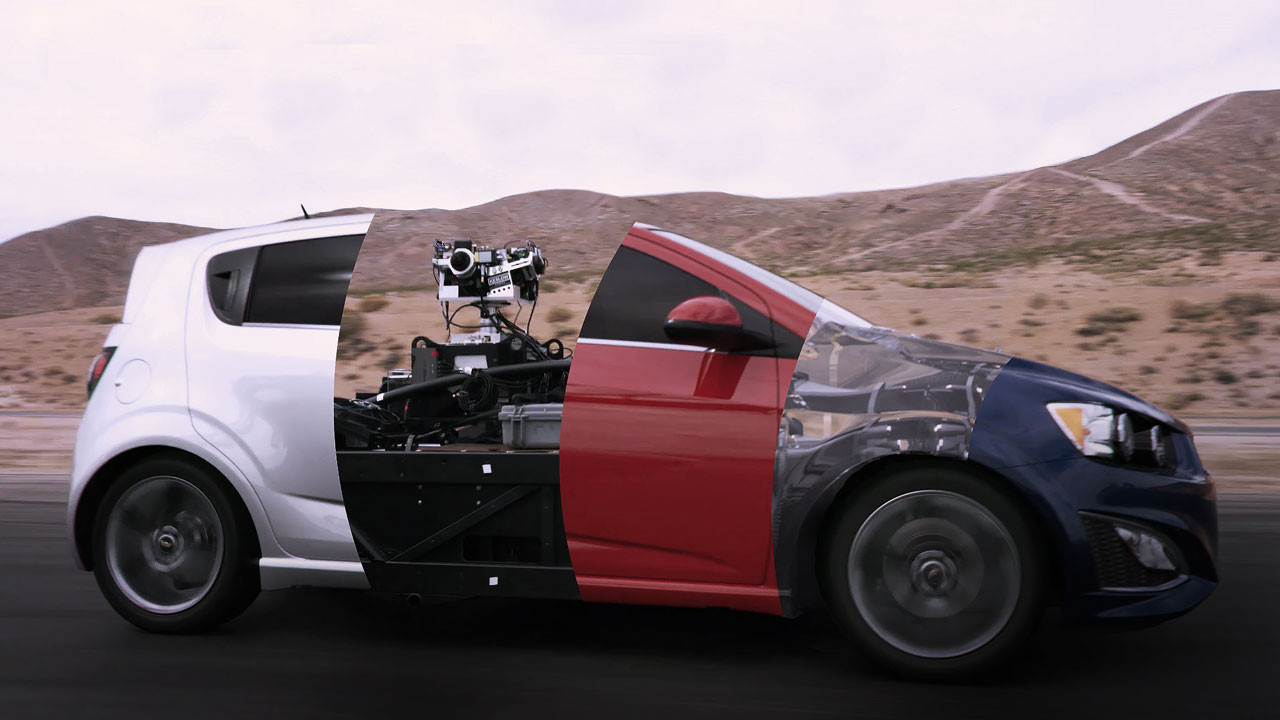

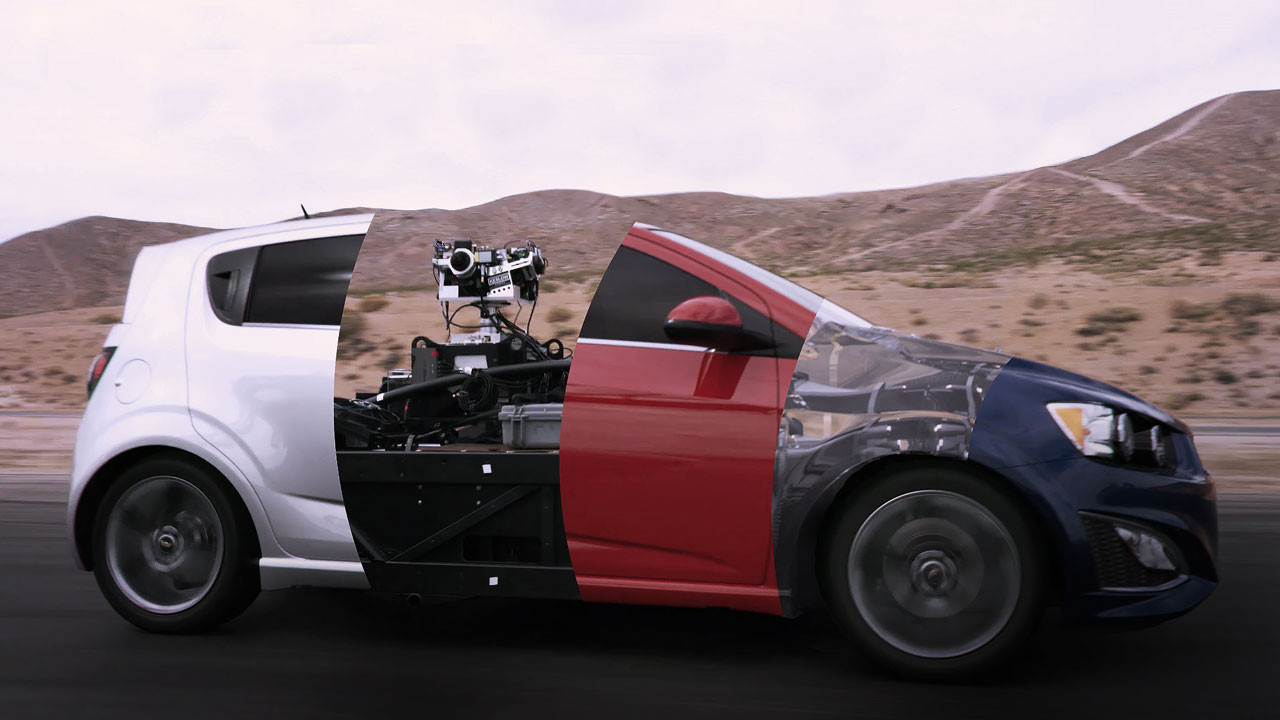

Securing exotic, high-performance vehicles for a video shoot can be an expensive and arduous ordeal. Between dealing with availability of the vehicle, location, and filming, setting up the perfect shot for movies or commercials is extremely difficult...

Securing exotic, high-performance vehicles for a video shoot can be an expensive and arduous ordeal. Between dealing with availability of the vehicle, location, and filming, setting up the perfect shot for movies or commercials is extremely difficult...

Securing exotic, high-performance vehicles for a video shoot can be an expensive and arduous ordeal. Between dealing with availability of the vehicle, location, and filming, setting up the perfect shot for movies or commercials is extremely difficult...

Securing exotic, high-performance vehicles for a video shoot can be an expensive and arduous ordeal. Between dealing with availability of the vehicle, location, and filming, setting up the perfect shot for movies or commercials is extremely difficult...

While there are plenty of advanced digital movie cameras, most of them aren't really designed for the modern realities of movie making, where computer-generated effects are seemingly ubiquitous. You'll still have to bust out the green screen if you...

While there are plenty of advanced digital movie cameras, most of them aren't really designed for the modern realities of movie making, where computer-generated effects are seemingly ubiquitous. You'll still have to bust out the green screen if you...

The folks behind last month's raucous Deadpool movie lied to you more than you probably could've imagined. That intro sequence that serves as a narrative framing device for practically the whole flick? Almost entirely composited together in post proc...

The folks behind last month's raucous Deadpool movie lied to you more than you probably could've imagined. That intro sequence that serves as a narrative framing device for practically the whole flick? Almost entirely composited together in post proc...

Computer graphics have come a long way, but there are still a few aspects that are pretty time consuming to get right. Realistic fabric movement that reacts to gravity and other forces is one of 'em. The folks at Disney Research have found a way to...

Computer graphics have come a long way, but there are still a few aspects that are pretty time consuming to get right. Realistic fabric movement that reacts to gravity and other forces is one of 'em. The folks at Disney Research have found a way to...