Developments in CGI and animatronics might be getting alarmingly realistic, but the audio that goes with it often still relies on manual recordings. A pair of associate professors and a graduate student from Cornell University, however, have developed a method for synthesizing the sound of moving fabrics -- such as rustling clothes -- for use in animations, and thus, potentially film. The process, presented at SIGGRAPH, but reported to the public today, involves looking into two components of the natural sound of fabric, cloth moving on cloth, and crumpling. After creating a model for the energy and pattern of these two aspects, an approximation of the sound can be created, which acts as a kind of "road map" for the final audio.

The end result is created by breaking the map down into much smaller fragments, which are then matched against a database of similar sections of real field-recorded audio. They even included binaural recordings to give a first-person perspective for headphone wearers. The process is still overseen by a human sound engineer, who selects the appropriate type of fabric and oversees the way that sounds are matched, meaning it's not quite ready for prime time. Understandable really, as this is still a proof of concept, with real-time operations and other improvements penciled in for future iterations. What does a virtual sheet being pulled over an imaginary sofa sound like? Head past the break to hear it in action, along with a presentation of the process.

Continue reading Fabricated: Scientists develop method to synthesize the sound of clothing for animations (video)

Filed under: Science, Alt

Fabricated: Scientists develop method to synthesize the sound of clothing for animations (video) originally appeared on Engadget on Wed, 26 Sep 2012 23:40:00 EDT. Please see our terms for use of feeds.

Permalink  PhysOrg

PhysOrg |

Cornell Chronical

Cornell Chronical |

Email this |

Comments

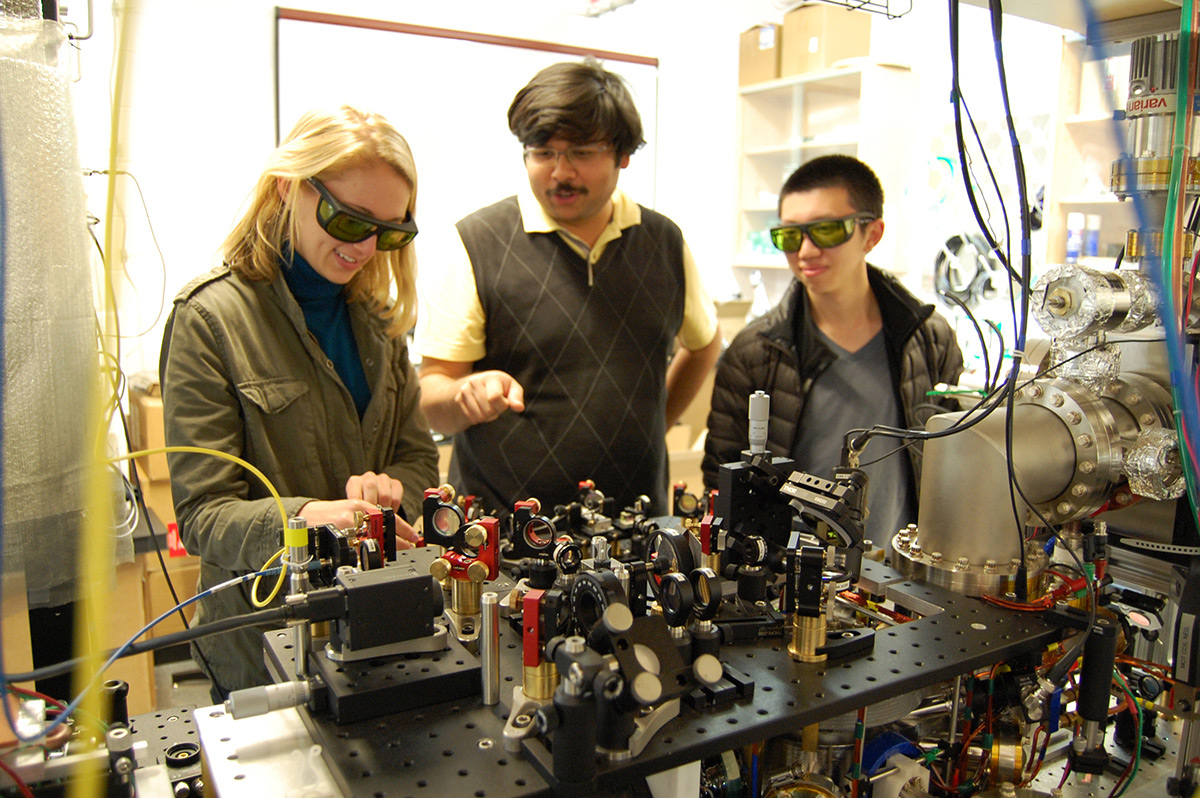

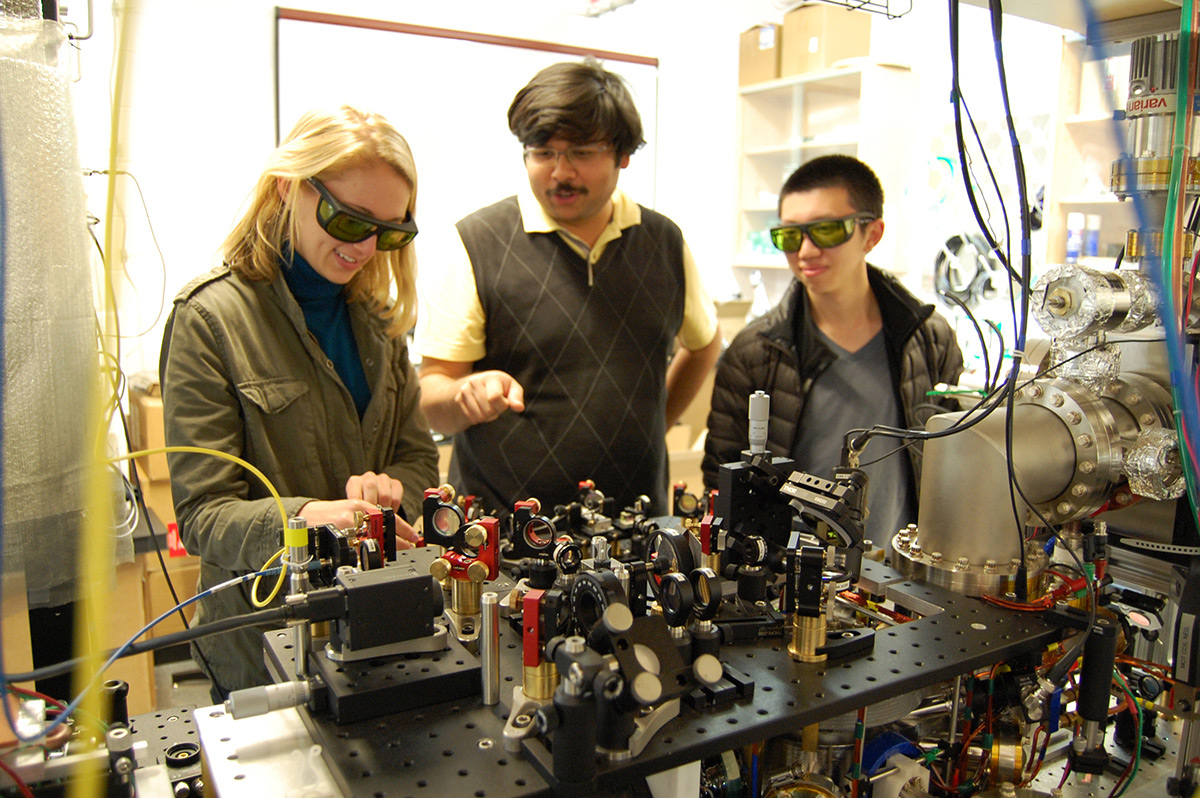

Quantum physics theory has an odd but fundamental quirk: atoms in a quantum state aren't supposed to move as long as you're measuring them. It sounds preposterous, but Cornell University researchers have just demonstrated that it's real. The team...

Quantum physics theory has an odd but fundamental quirk: atoms in a quantum state aren't supposed to move as long as you're measuring them. It sounds preposterous, but Cornell University researchers have just demonstrated that it's real. The team...

Quantum physics theory has an odd but fundamental quirk: atoms in a quantum state aren't supposed to move as long as you're measuring them. It sounds preposterous, but Cornell University researchers have just demonstrated that it's real. The team...

Quantum physics theory has an odd but fundamental quirk: atoms in a quantum state aren't supposed to move as long as you're measuring them. It sounds preposterous, but Cornell University researchers have just demonstrated that it's real. The team...

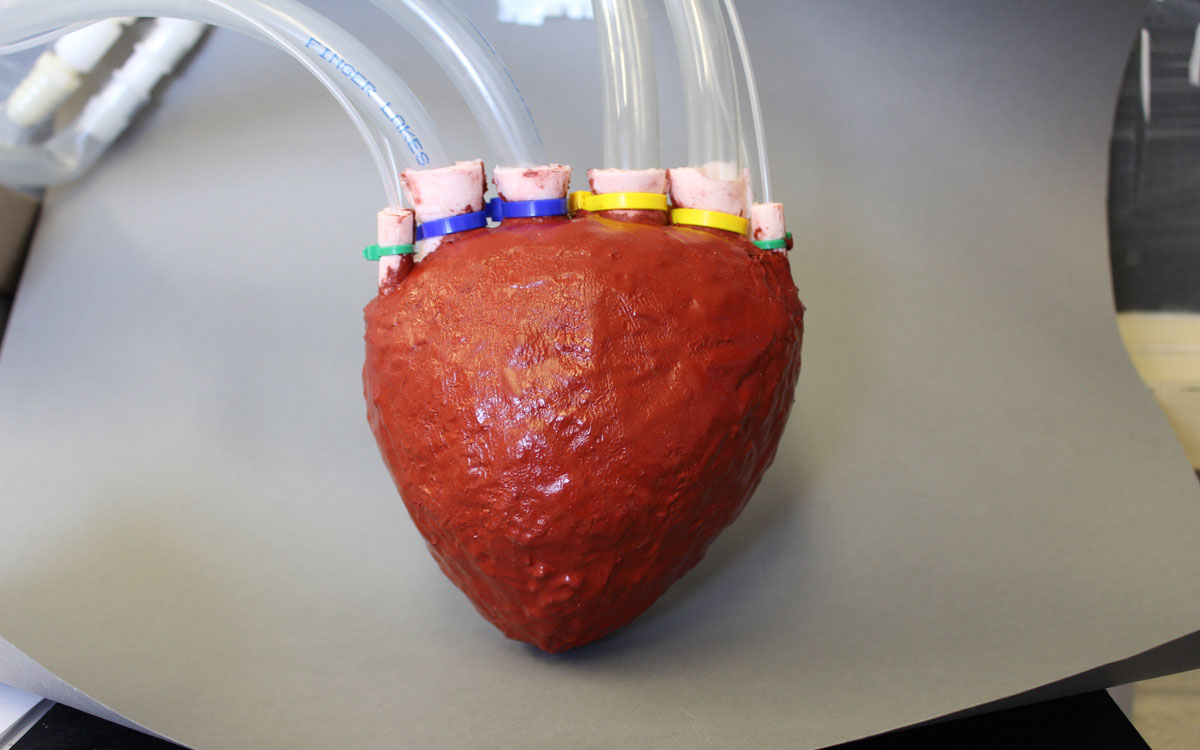

Artificial hearts only kinda-sorta behave like the real thing. They pump blood, sure, but they're typically solid blocks of machinery that are out of place in a squishy human body. Cornell University thinks it can do better, though: its scientist...

Artificial hearts only kinda-sorta behave like the real thing. They pump blood, sure, but they're typically solid blocks of machinery that are out of place in a squishy human body. Cornell University thinks it can do better, though: its scientist...